📢China just released its much-anticipated regulation on generative AI.

🧵below w/ my initial notes/reactions.

TLDR: the final version is *much* less strict than the April draft version. This reflects a very active policy debate in 🇨🇳 + econ concerns.

https://t.co/fblFYZ5bVbcac.gov.cn/2023-07/13/c_1…

🧵below w/ my initial notes/reactions.

TLDR: the final version is *much* less strict than the April draft version. This reflects a very active policy debate in 🇨🇳 + econ concerns.

https://t.co/fblFYZ5bVbcac.gov.cn/2023-07/13/c_1…

2/x

Scope: Article 2 dramatically narrows the scope of AIGC activities covered. The draft version included R&D, general use of AIGC, and provision of AIGC services to the public.

This version cuts R&D and general use, and covers just providing AIGC to public. *Big* change

Scope: Article 2 dramatically narrows the scope of AIGC activities covered. The draft version included R&D, general use of AIGC, and provision of AIGC services to the public.

This version cuts R&D and general use, and covers just providing AIGC to public. *Big* change

3/x

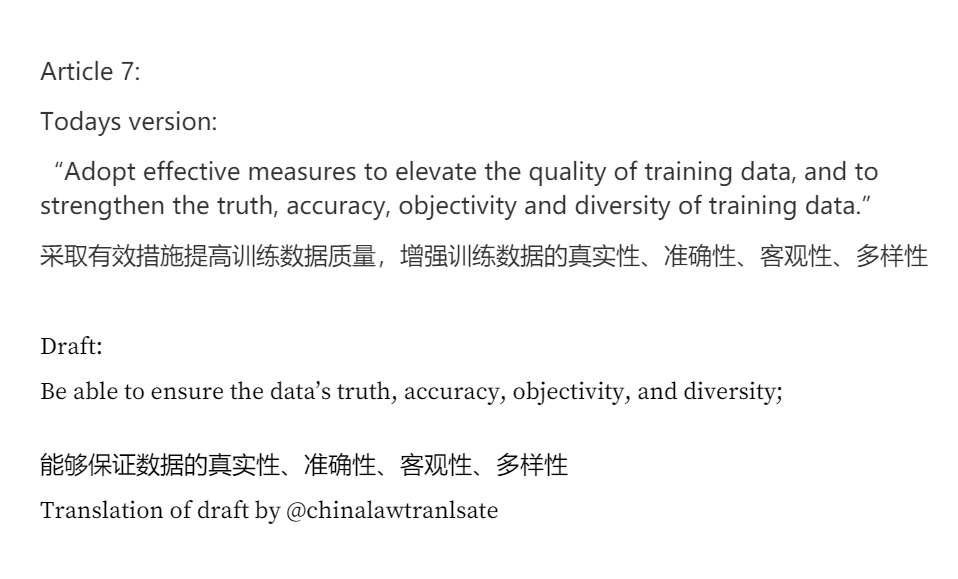

Training data:

Lowers requirements from (impossible) demand that you adopt measures to "ensure" truth of training data, to more reasonable demand that you "elevate the quality" & strengthen truthfulness, etc

Tranlsation of draft by @ChinaLawTransl8 https://t.co/89hC5UEELdchinalawtranslate.com/en/gen-ai-draf…

Training data:

Lowers requirements from (impossible) demand that you adopt measures to "ensure" truth of training data, to more reasonable demand that you "elevate the quality" & strengthen truthfulness, etc

Tranlsation of draft by @ChinaLawTransl8 https://t.co/89hC5UEELdchinalawtranslate.com/en/gen-ai-draf…

4/x

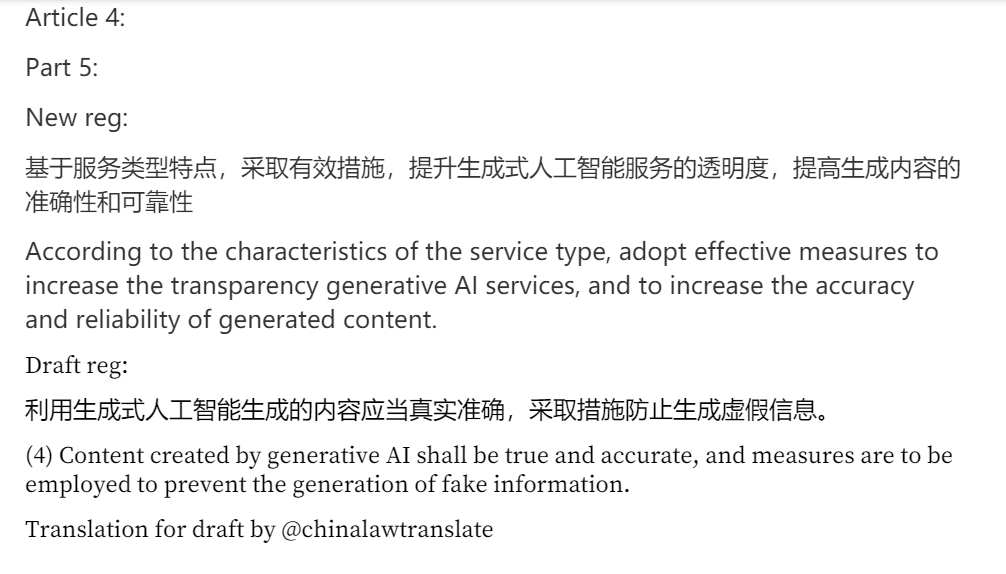

AIGC outputs:

Softens requirements from the (impossible) demand that AIGC outputs "shall be true and accurate", to requiring providers increase accuracy, reliability, etc.

Also, divides things by service type so AIGC sci-fi images don't need to be "accurate".

AIGC outputs:

Softens requirements from the (impossible) demand that AIGC outputs "shall be true and accurate", to requiring providers increase accuracy, reliability, etc.

Also, divides things by service type so AIGC sci-fi images don't need to be "accurate".

5/x

Lots more to say on specific requirements but I'm short on time so couple big picture things.

#1 This regulation is explicitly "provisional" (暂行). Chinese regulators are taking an iterative approach, trying things out, getting feedback, making changes. More to come here.

Lots more to say on specific requirements but I'm short on time so couple big picture things.

#1 This regulation is explicitly "provisional" (暂行). Chinese regulators are taking an iterative approach, trying things out, getting feedback, making changes. More to come here.

6/x

CAC was the sole author of the draft regulation (as it has been for all AI regs), and we got 6 more orgs co-signing this one.

Many were expected (S&T, etc), but its notable that the NDRC is listed second. Reframes it from a purely technical/information reg to an econ one.

CAC was the sole author of the draft regulation (as it has been for all AI regs), and we got 6 more orgs co-signing this one.

Many were expected (S&T, etc), but its notable that the NDRC is listed second. Reframes it from a purely technical/information reg to an econ one.

7/x

In terms of why the requirements have softened, there was lots of active debate and pushback (or suggestions for improvement) on the draft from Chinese academics, policy analysts and companies. The economy is also a huge concern right now, and that was def a factor.

In terms of why the requirements have softened, there was lots of active debate and pushback (or suggestions for improvement) on the draft from Chinese academics, policy analysts and companies. The economy is also a huge concern right now, and that was def a factor.

8/x

To learn more about China's AI governance regime, and how the country actually sets AI regulatory policy, give my recent (this week!) report on exactly that.

https://t.co/cQzqaKJIqscarnegieendowment.org/2023/07/10/chi…

To learn more about China's AI governance regime, and how the country actually sets AI regulatory policy, give my recent (this week!) report on exactly that.

https://t.co/cQzqaKJIqscarnegieendowment.org/2023/07/10/chi…

9/9

Lastly: I'm not a lawyer or a professional translator, and these are very off-the-dome takes. Welcome all corrections.

Look out for proper translations by @DigiChn @ChinaLawTransl8

And analysis from many others, including @kendraschaefer @hlntnr and many more. 🫡

Lastly: I'm not a lawyer or a professional translator, and these are very off-the-dome takes. Welcome all corrections.

Look out for proper translations by @DigiChn @ChinaLawTransl8

And analysis from many others, including @kendraschaefer @hlntnr and many more. 🫡

• • •

Missing some Tweet in this thread? You can try to

force a refresh