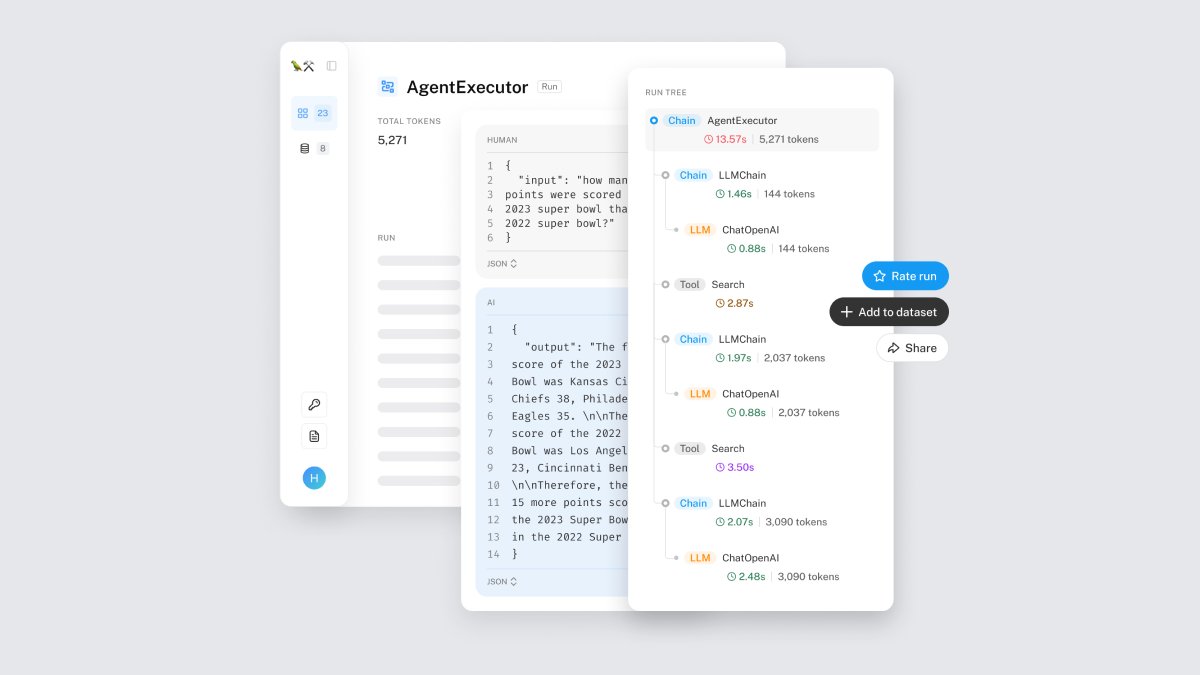

🦜🛠️ Introducing LangSmith 🦜🔗

A unified platform to help developers debug, test, evaluate, and monitor their LLM applications.

Integrates seamlessly with LangChain, but doesn't require it.

A unified platform to help developers debug, test, evaluate, and monitor their LLM applications.

Integrates seamlessly with LangChain, but doesn't require it.

✒️Blog

what we built, where we’re going, and how our Alpha partners put LangSmith to use

🙏🏽 to companies like @klarna @SnowflakeDB, @streamlit @BCG @DeepLearningAI_ @fintual @mendableai @multion_ai & @quivr_brain for helping us shape LangSmith

blog.langchain.dev/announcing-lan…

what we built, where we’re going, and how our Alpha partners put LangSmith to use

🙏🏽 to companies like @klarna @SnowflakeDB, @streamlit @BCG @DeepLearningAI_ @fintual @mendableai @multion_ai & @quivr_brain for helping us shape LangSmith

blog.langchain.dev/announcing-lan…

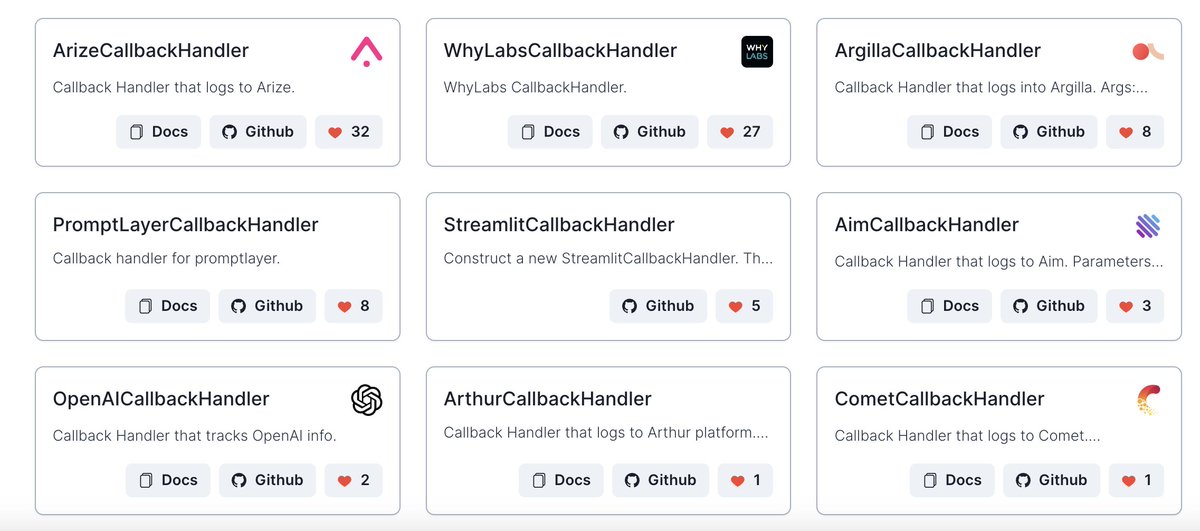

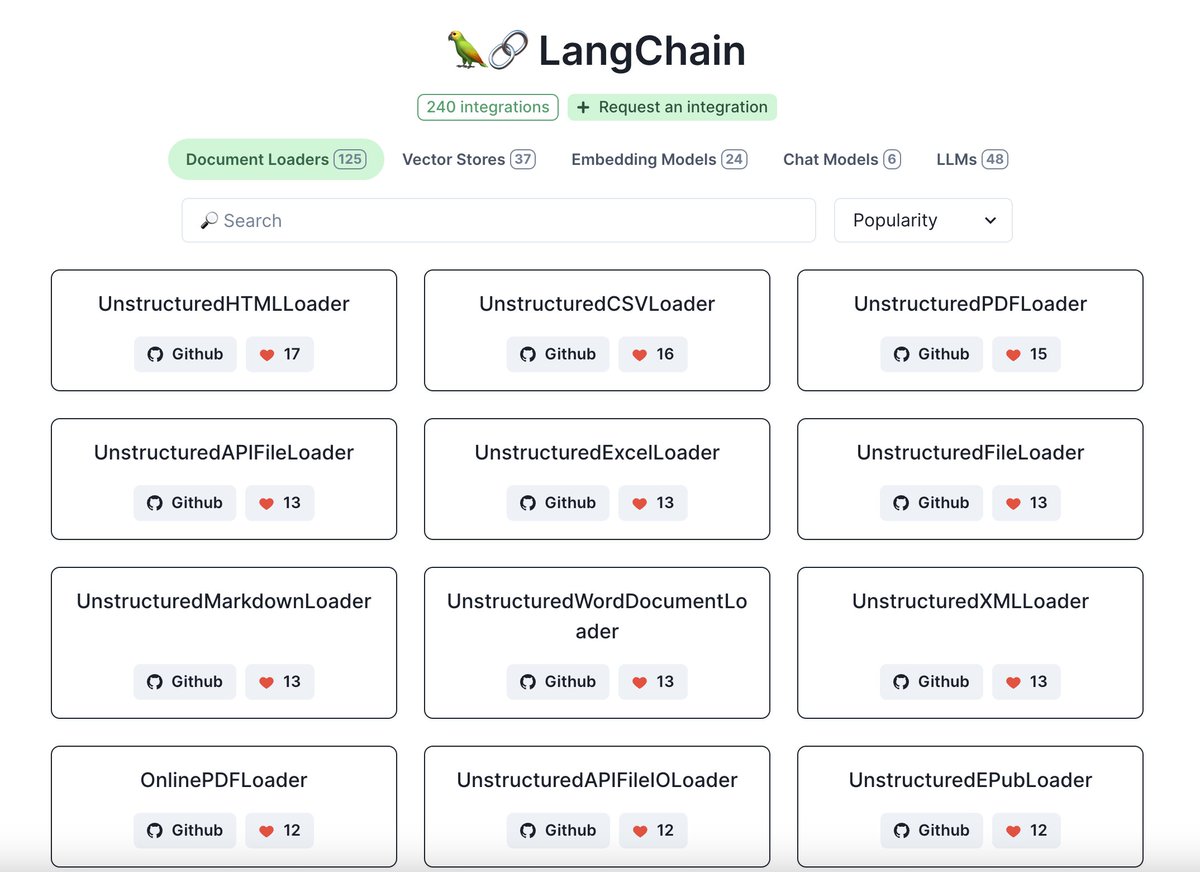

🗺️Comprehensive User Guide

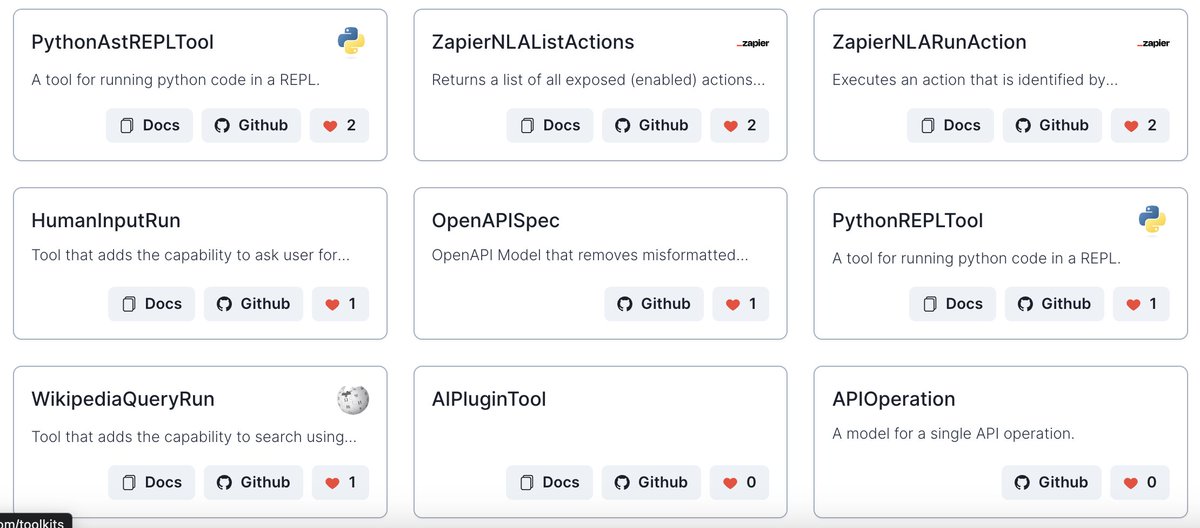

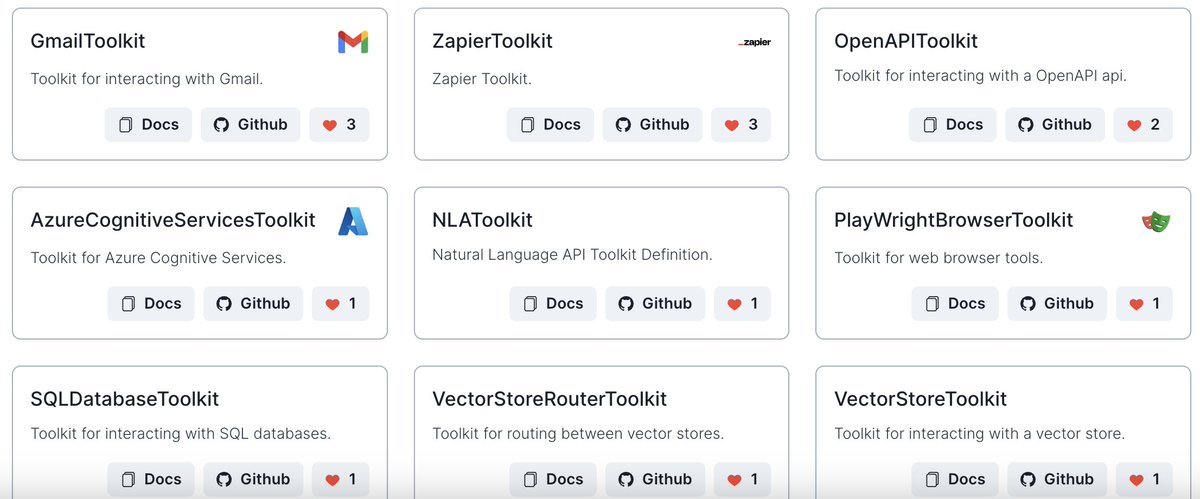

This walks through how to best use the platform, including connectivity with other companies like:

@openai & @anthropicAI for replaying runs

@thefireworksai for finetuning

@getcontextai for analytics

docs.smith.langchain.com/docs/overview

This walks through how to best use the platform, including connectivity with other companies like:

@openai & @anthropicAI for replaying runs

@thefireworksai for finetuning

@getcontextai for analytics

docs.smith.langchain.com/docs/overview

📄Documentation

For a technical walkthrough of how to interact with the platform via our SDKs (currently in Python and JavaScript), see our documentation below

Covers how to set up, how to start sending data there, how to pull data

docs.smith.langchain.com/overview

For a technical walkthrough of how to interact with the platform via our SDKs (currently in Python and JavaScript), see our documentation below

Covers how to set up, how to start sending data there, how to pull data

docs.smith.langchain.com/overview

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter