A new beast has entered the scene: Scopus AI. An undisclosed chatbot fed with the paywalled content, owned by profit-oriented Elsevier. Marketed as "a brand new window into humanity's accumulated knowledge". Why is it problematic?

First, the owner. Elsevier has been long known for questionable practices, prioritizing profit seeking over the health of sci-comm ecosystem. For example, recently a whole board of a journal Neuroimage resigned in the protest against Elsevier's greed

https://twitter.com/Andrew_Akbashev/status/1649149289631739905

If you want to know more about its business model, which allows for profit margins bigger than big tech, I recommend the following article. If you spent some time in the academia however, you don't need much convincing https://t.co/kitS776iG8theguardian.com/science/2017/j…

Elsevier's database of scholarly metadata, Scopus, is an important resource in academia, esp. for 2 major use-cases: research assessment & literature search. It is, however, a big black box in terms of data curation and algorithms. And it's very expensive. https://t.co/beyabh1dbbncbiotech.org/sites/default/…

Elsevier has probably carefully observed the development of new AI-powered tools like @scite and @scispace_ . Elsevier's advantage is that they sit on a trove of text data, not accessible otherwise (that is, full-texts of Elsevier articles and all the abstracts from Scopus).

Some of this data is accessible through open data sources like @OpenAlex_org and @open_abstracts @SemanticScholar but getting the texts from publishers is generally a pain, because the publishers as a rule are not willing to give their IP for free if they can make profit!

So open source / external providers will not have the same training data to compete with Scopus. And even if you buy a copy of this data from Scopus, you would generally have a very hard time in negotiating a license to build an application meant to compete with them.

Now, Scopus' apparent mission is to provide trustworthy, comprehensible product to improve academic work and research discoverability. But Elsevier's bigger mission is to make money off academia. It is appaling to see how they intersect in the newest chatbot offering.

"Scopus AI", how it is called, is riding on the generative AI wave, probably hoping to chip off some profit that OpenAI and others are making. Of course, in all LLM applications, hallucinations are a problem. Galactica and ChatGPT both failed at this

https://twitter.com/paniterka_ch/status/1599893718214901760

Scopus needs to appear credible, so what they claim is that their "advanced engineering limits the risk of hallucinations" and "and taps into the trustworthy and verified knowledge". But how do they do it? What is this advanced engineering? We don't know. https://t.co/ZbU5UME3Dsbeta.elsevier.com/products/scopu…

We actually know virtually nothing about the technology, the model, the curation process, the feedback loops with the experts they claim to be in place. No model card, no metrics, nothing. They claim the LLM usage is private, so maybe they use an open source model, but that's all

Let's just savor it for a moment: "our AI is using latest LLMs and other technologies, in combination with our own technology". How nice and transparent they are: they use hardware and software to create the product! sadly, their competitors aren't better

https://twitter.com/paniterka_ch/status/1639265476579581960

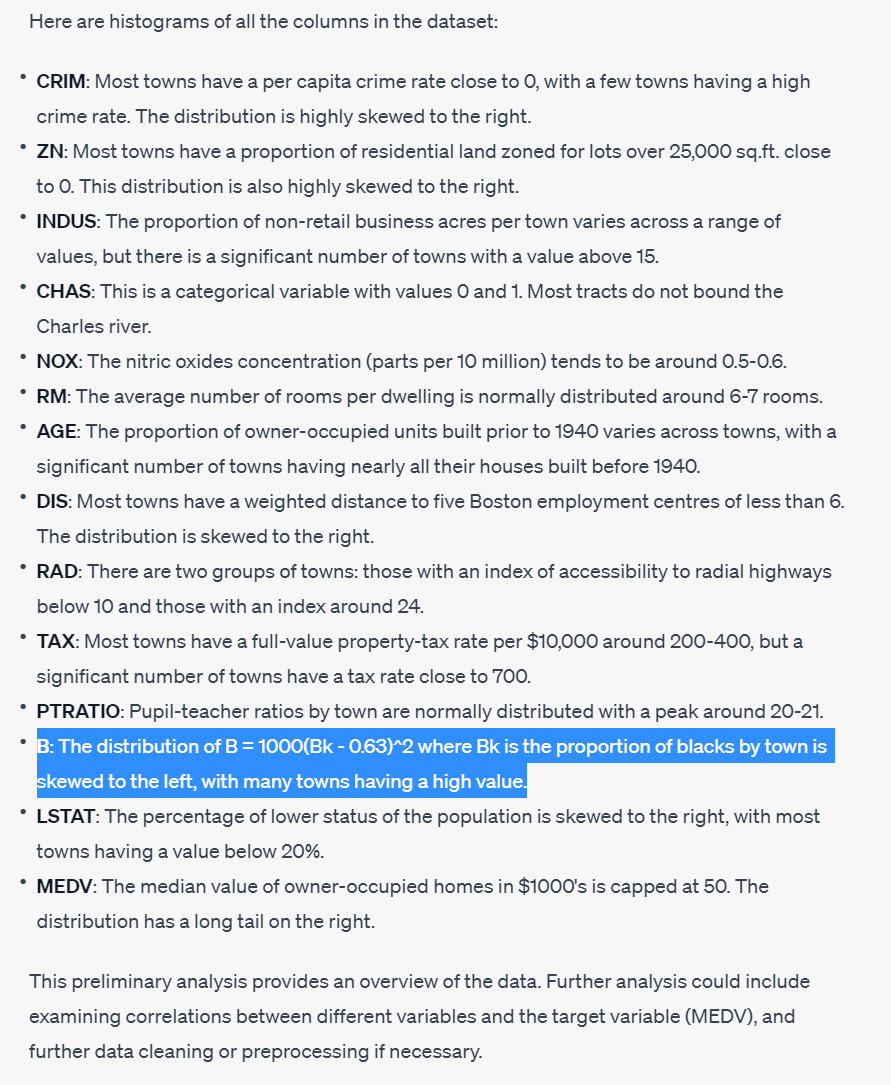

But there is also another huge problem with the bold claim they are making: that Scopus AI is a window into humanity's accumulated knowledge. But they don't access humanity's knowledge! It's only titles+abstracts of a selection of articles published in some peer-reviewed journals

Has all the knowledge been published in peer-reviewed journals? What about the knowledge contained in all the other forms of writing? about the knowledge not contained in writing at all? who and what is overrepresented, and who is underrepresented? @timnitGebru @emilymbender

Which scientific knowledge didn't make it to Scopus? which negative results are missing? which results were denied publication by being "not novel enough" by the peer review process? which of them were presented to be more novel than they are, to pass through this novelty bias?

Then, if Scopus uses black-box curation for their journal list, does it encompass all the scientific knowledge from the world? No - we know well that their data doesn't represent the world proportionally (slide by @stefhaustein and @RodrigoCostas1 ) https://t.co/aJE2bPB3txzenodo.org/record/7987872

To be honest, we don't even know if they really use all the available abstracts or if they amplify the Elsevier content somehow, which would sneakily boost the citations to the Elsevier-published articles and drive more users to their webpages (b/c they also sell readership data)

And last but not least: they don't even use full-texts, but the abstract and titles only. If you ever read a few scientific publications, how well did the abstract represent the actual findings from the article? all the finesse, details, critical view - this is all missing.

But of course, it is not in Elsevier's motivation to reflect those limitations to the user. What they want is a trust-washed chatbot, marketed especially for less experienced researchers, locking future generations of scientists even further into Elsevier's ecosystem.

ScopusAI is now in alpha, but it'll be a paid product. I can imagine it may be hard for the universities to resist this offering: ChatGPT may be hallucinating, but Elsevier's AI tells The Truth. And AI means gold in the toxic culture of publish or perish

https://twitter.com/paniterka_ch/status/1619722973208014851

We already see that the chatbot LLMs destroy the online ecosystems like Stack Overflow by redirecting the traffic, and inject bullshit into seriously sounding texts, which becomes pretty much undetectable. How will they impact scientific publishing?

https://twitter.com/madiator/status/1683735923387936768

So I really feel it's the worst of both worlds. It's not that I'm worried. I'm just very angry at this point. Knowing all that we know now about LLMs, scientific communication, creation of knowledge, biases, open science - let's just destroy it all in the race for profit.

#scicomm #AcademicTwitter @aarontay @scholarlykitchn @PaoloCrosetto @ETHBibliothek @OpenAcademics @RetractionWatch

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter