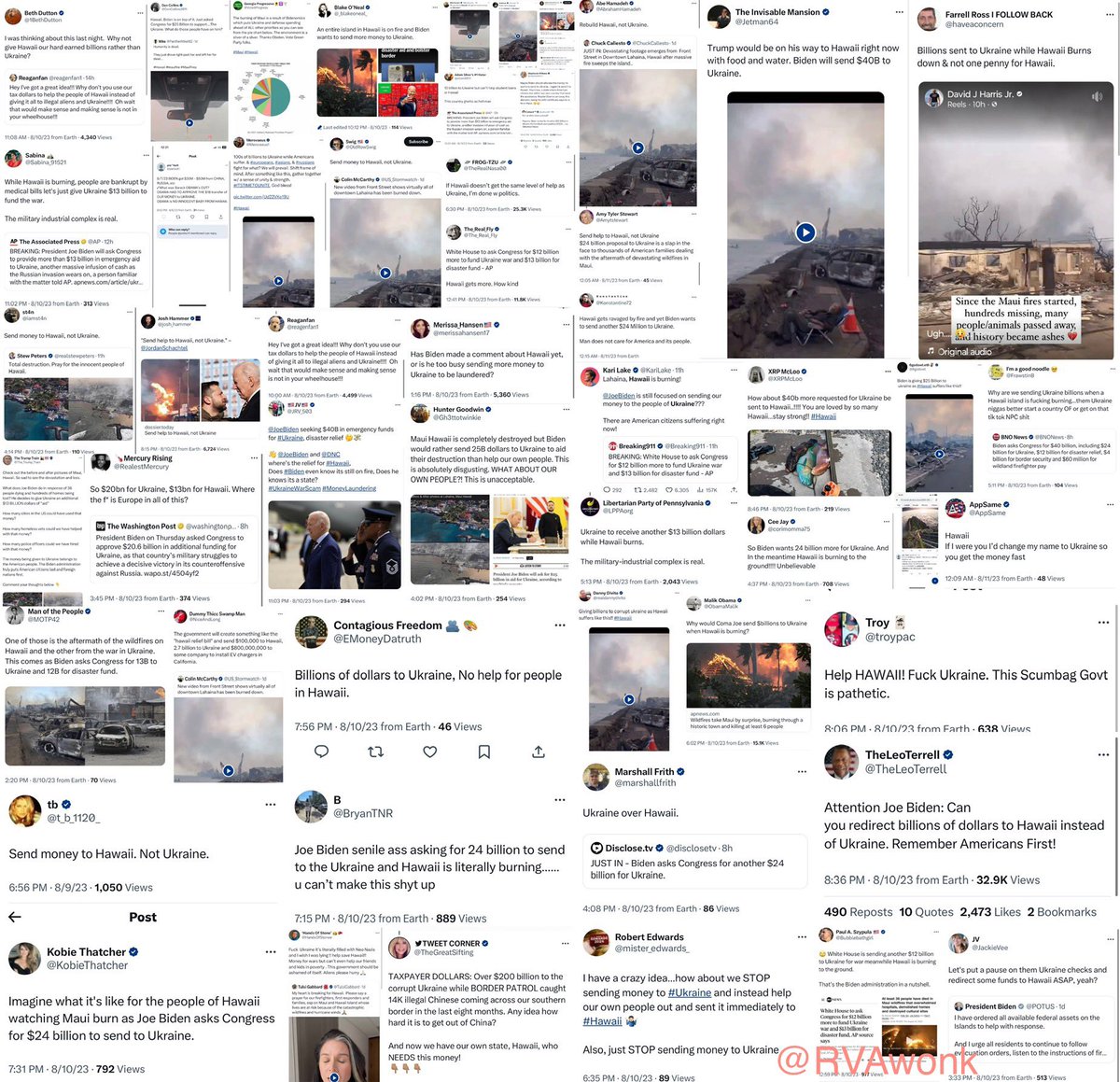

So, the “fund Hawaii, not Ukraine” talking point has a lot of characteristics of a coordinated campaign that turned “organic” after seeding the narrative. These are a few examples.

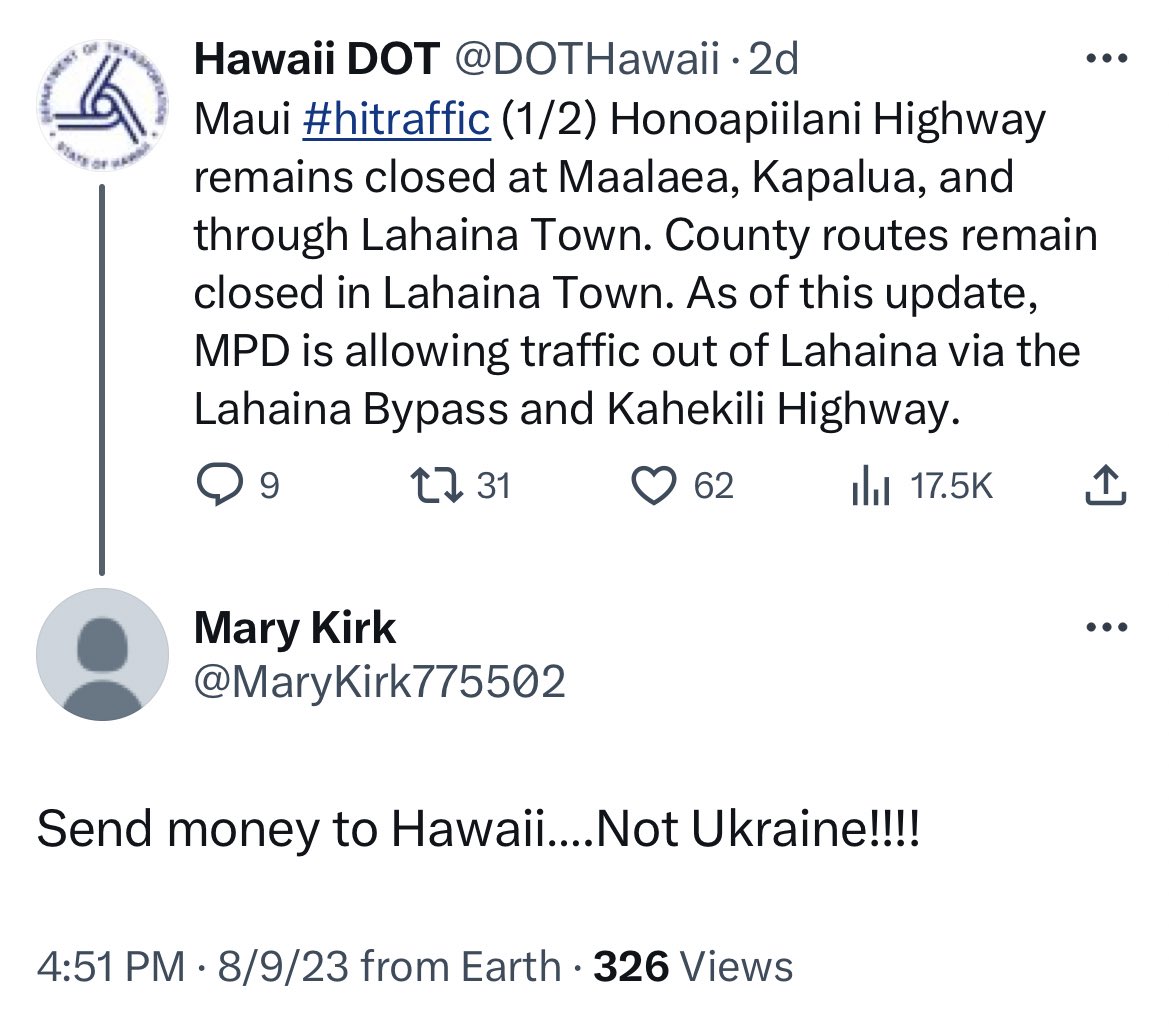

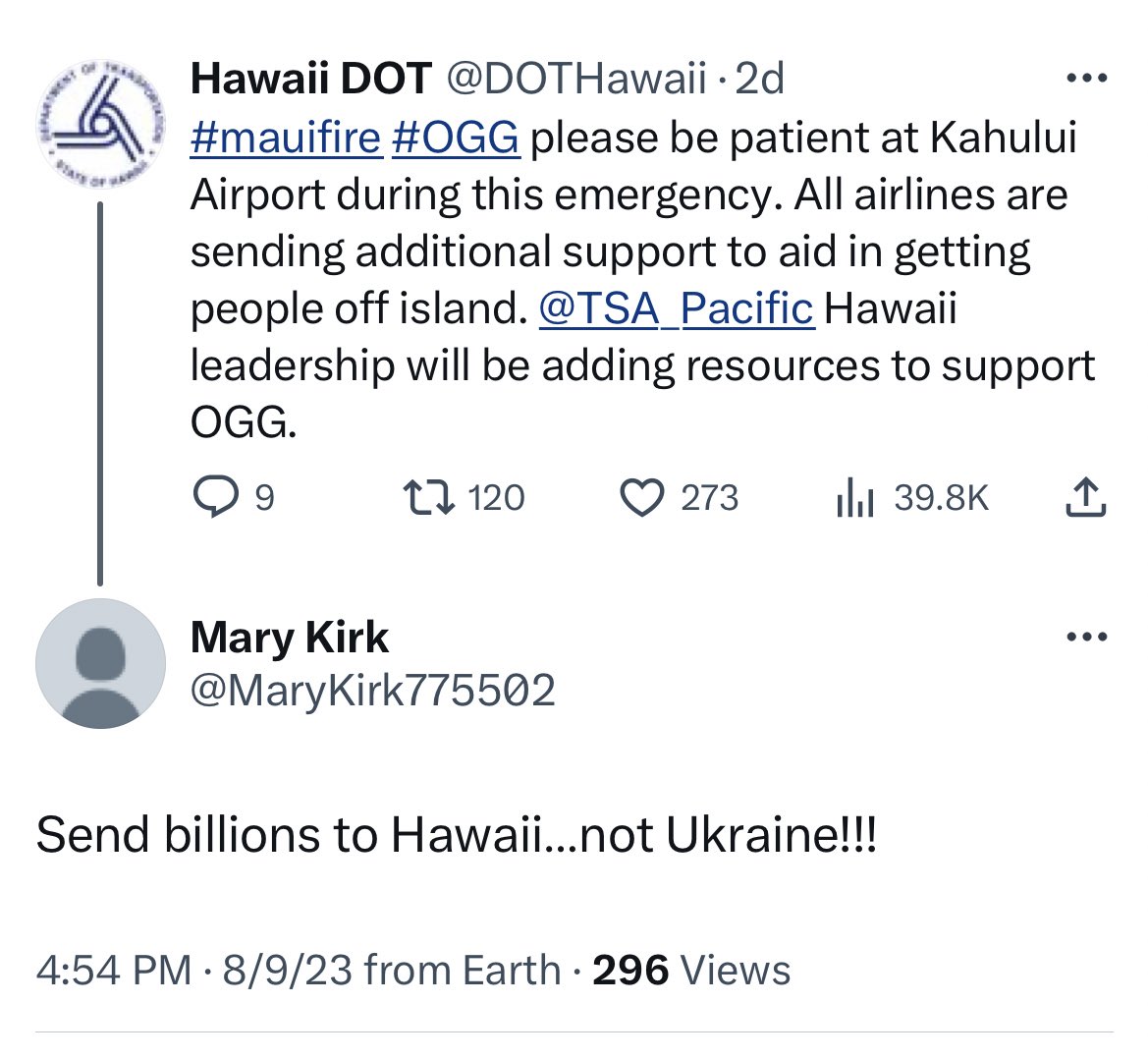

These are the very first two tweets that used the phrase “Hawaii not Ukraine.” They were posted at 4:51 and 4:54 on 8/9, in response to a @DOTHawaii tweet.

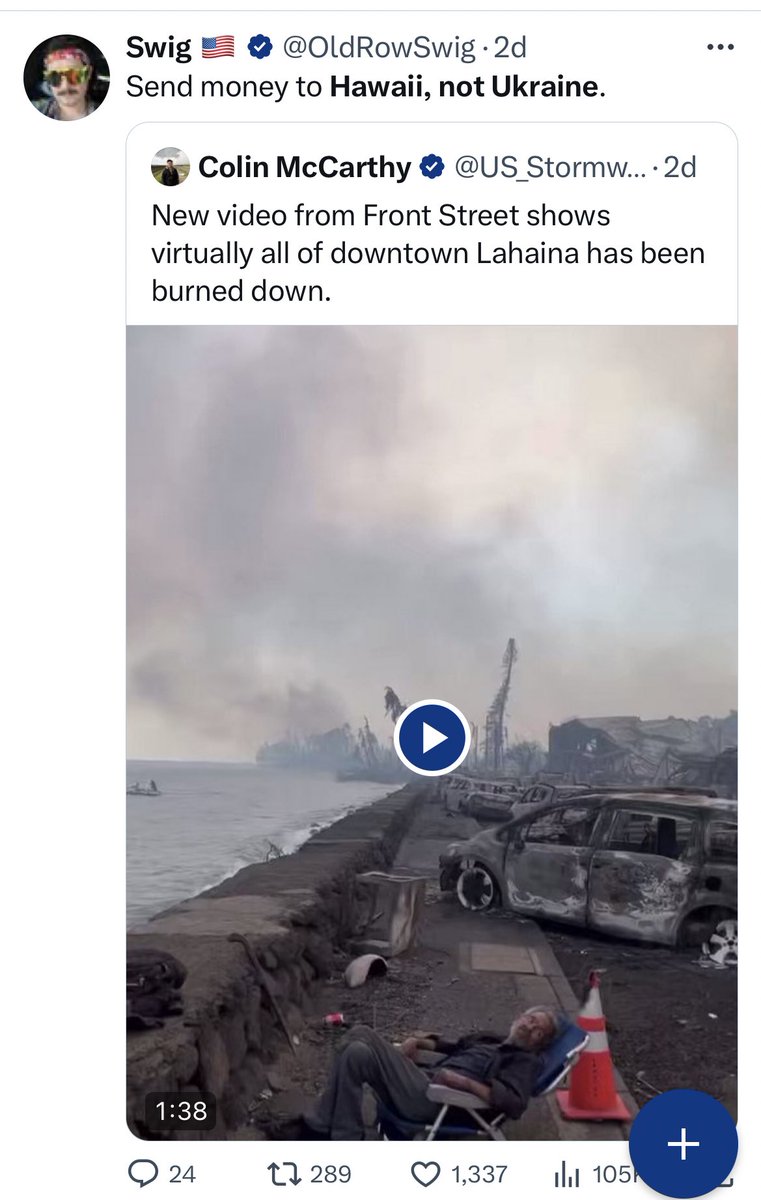

@DOTHawaii Then this quote-tweet was posted an hour later, at 5:57 pm. Look at the engagement this tweet got compared to the one sent an hour earlier.

@DOTHawaii Then this tweet — the 4th tweet to use that specific phrase (“Hawaii, not Ukraine”) — was posted an hour later, at 6:52 pm. This was a breakthrough tweet that ranked at the top of the search results even days later.

@DOTHawaii After that ^ tweet was posted, the talking point started being picked up and tweeted more frequently. These two tweets, again using the same phrase (“Hawaii not Ukraine”) were sent just minutes after that last tweet, and from there it continued to pick up over the next two days.

This is a common format for influence/disinfo campaigns, where a talking point gets seeded by a few key accounts (amplifiers), then picked up by their networks. A big indicator of coordination here is the timing — the first 4 tweets were each sent almost exactly an hour apart.

The first account that tweeted the phrase “Hawaii not Ukraine” is interesting. It’s a small account and was created in July.

The account’s first tweet on 7/19 was a 34 second Space that was nothing but background music. The account has tweeted/RTd 136 times since then.

The account’s first tweet on 7/19 was a 34 second Space that was nothing but background music. The account has tweeted/RTd 136 times since then.

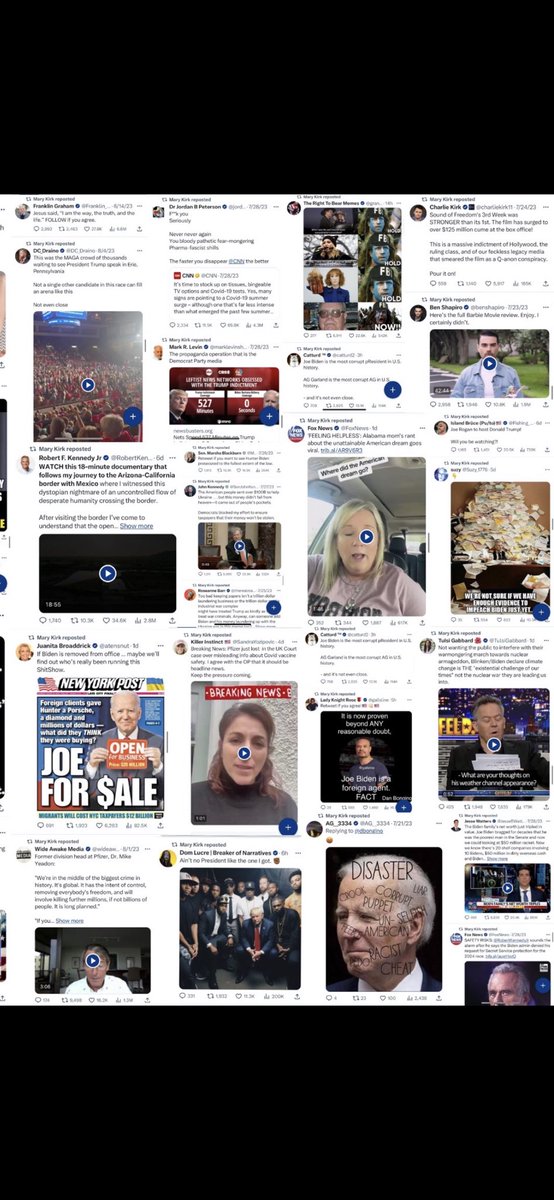

The account’s retweets are interesting, too. They’ve RT’d literally every single major right-wing influencer on Twitter, in what is almost certainly a purposeful/strategic process. To me, this suggests that the account is making contact for the purpose of signaling the network.

I’ll write this up with more info, but this was an interesting case study to break down in real time.

It’s also a great reminder/warning that crises & natural disasters are major attack surfaces (for malign state & non-state actors, extremists, etc) and we’re unprepared for it.

It’s also a great reminder/warning that crises & natural disasters are major attack surfaces (for malign state & non-state actors, extremists, etc) and we’re unprepared for it.

• • •

Missing some Tweet in this thread? You can try to

force a refresh