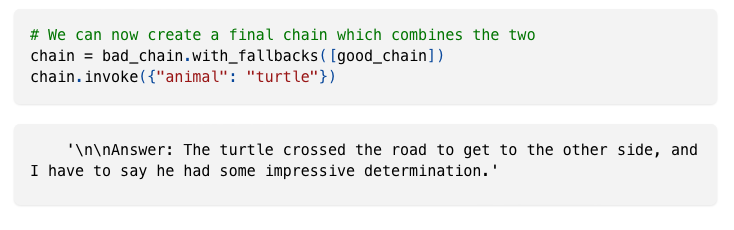

Happy Monday 🌞 Here's what we've added to 🦜🔗 over the weekend:

✅ Pydantic v2 compatibility

🔢 Ernie model embeddings

🚿 Streaming support for text-generation-webui

👆 @SharePoint document loader

✅ Pydantic v2 compatibility

🔢 Ernie model embeddings

🚿 Streaming support for text-generation-webui

👆 @SharePoint document loader

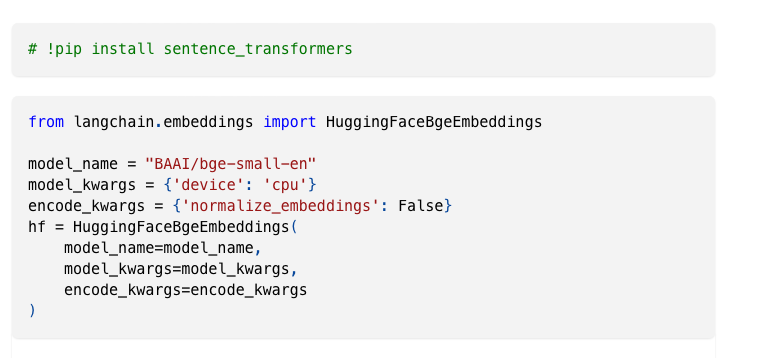

🔢 Ernie model embeddings

Use Ernie Embedding-V1 based on Baidu's Wenxin LLM thanks to GH user axiangcoding!

Docs: python.langchain.com/docs/integrati…

Use Ernie Embedding-V1 based on Baidu's Wenxin LLM thanks to GH user axiangcoding!

Docs: python.langchain.com/docs/integrati…

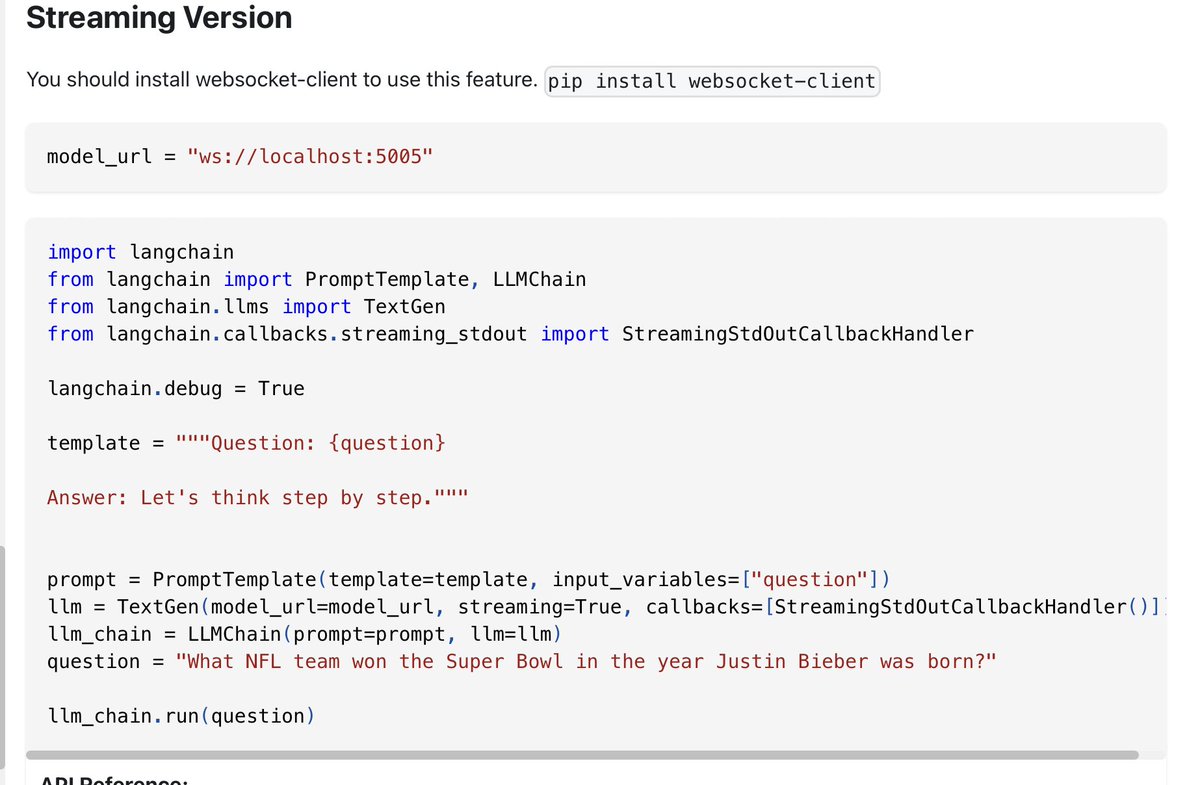

🚿 Streaming support for text-generation-webui

Textgen is a Grade web UI and API for language models, that now supports model streaming thanks to GH user uetuluk!

Docs: python.langchain.com/docs/integrati…

Textgen is a Grade web UI and API for language models, that now supports model streaming thanks to GH user uetuluk!

Docs: python.langchain.com/docs/integrati…

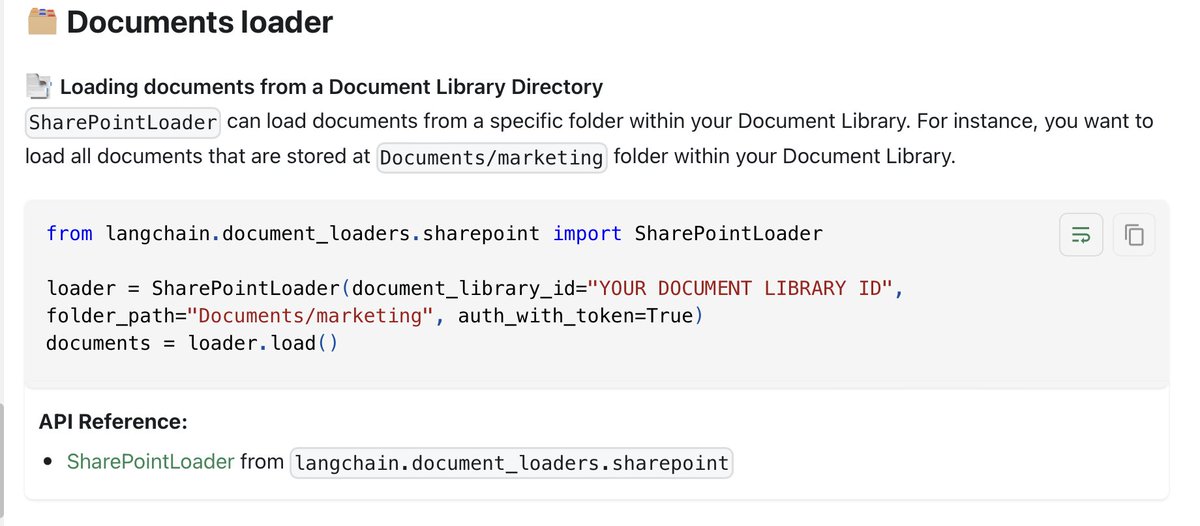

👆 @SharePoint document loader

Microsoft SharePoint makes it easy for teams to share and collaborate on documents.

Thanks to @zeneto you can now easily load all those documents into your LLM applications 🙌

Docs: python.langchain.com/docs/integrati…

Microsoft SharePoint makes it easy for teams to share and collaborate on documents.

Thanks to @zeneto you can now easily load all those documents into your LLM applications 🙌

Docs: python.langchain.com/docs/integrati…

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter