Are you using @LangChainAI but it is difficult to Debug?

Not anymore with LangSmith

It makes tracing each LLM call very easy and intiutive.

It's like looking under the hood of system.

After getting beta access, I explored it over last week & below are my 🔑 take aways:

🧵

Not anymore with LangSmith

It makes tracing each LLM call very easy and intiutive.

It's like looking under the hood of system.

After getting beta access, I explored it over last week & below are my 🔑 take aways:

🧵

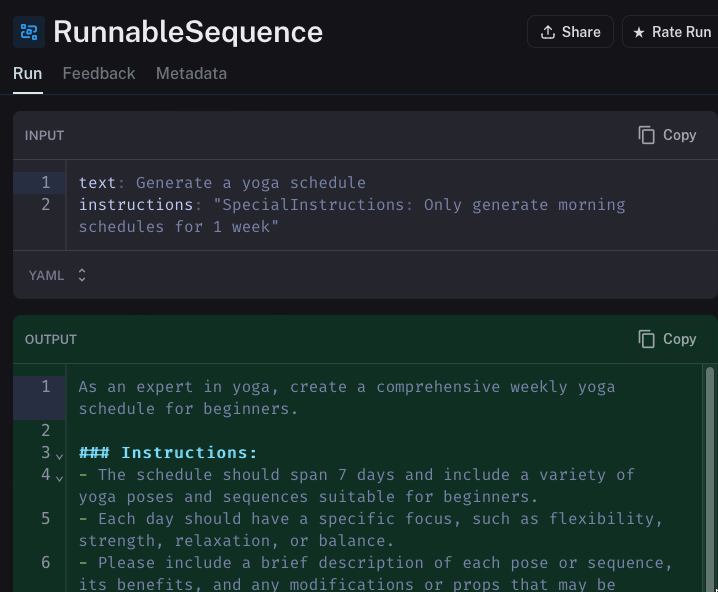

Clear Input / Output:

It provides clear picture of what goes in and what came out at the highest and the most granular level depending on what you want to see.

You can view individual LLM call input/ouput or as a chain together.

It provides clear picture of what goes in and what came out at the highest and the most granular level depending on what you want to see.

You can view individual LLM call input/ouput or as a chain together.

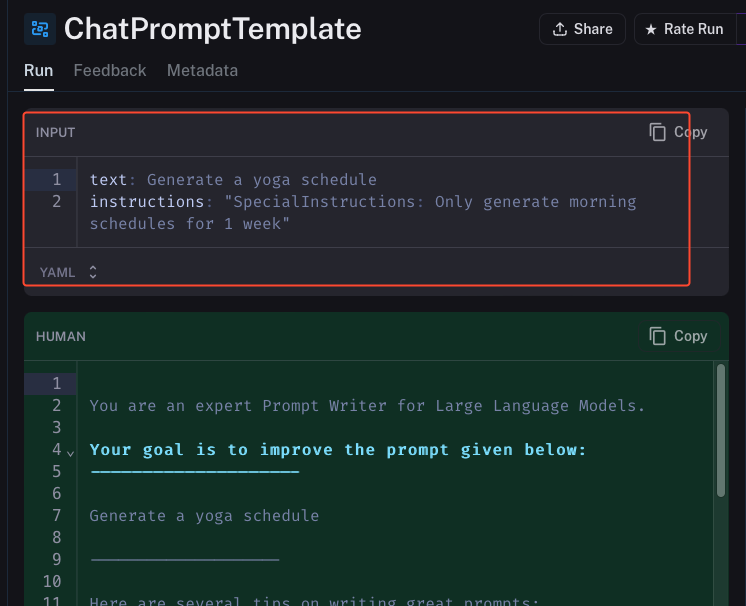

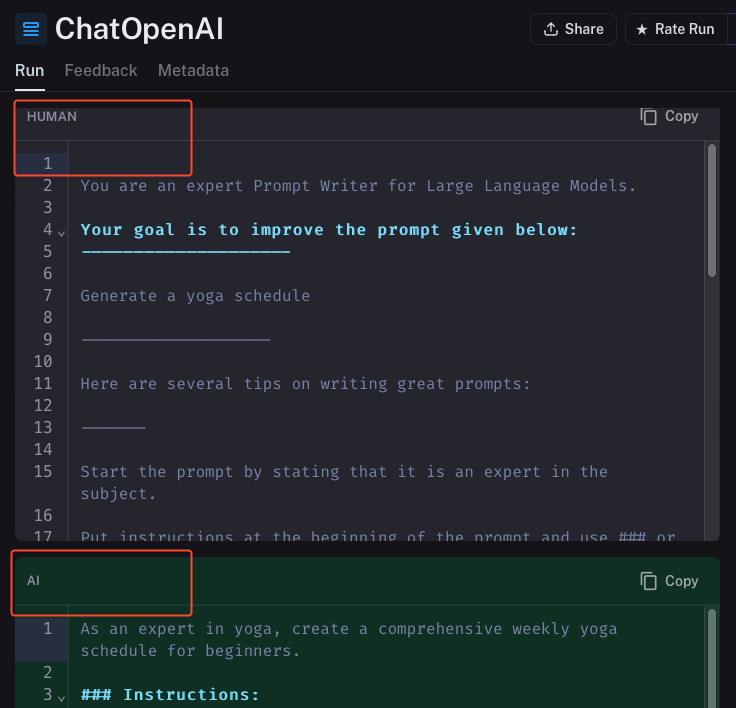

Individual Chain Details:

If you have multiple chains in your definition, it gives you analysis of each of them individually with details regarding input, output, prompt used, and history passed if any.

If you have multiple chains in your definition, it gives you analysis of each of them individually with details regarding input, output, prompt used, and history passed if any.

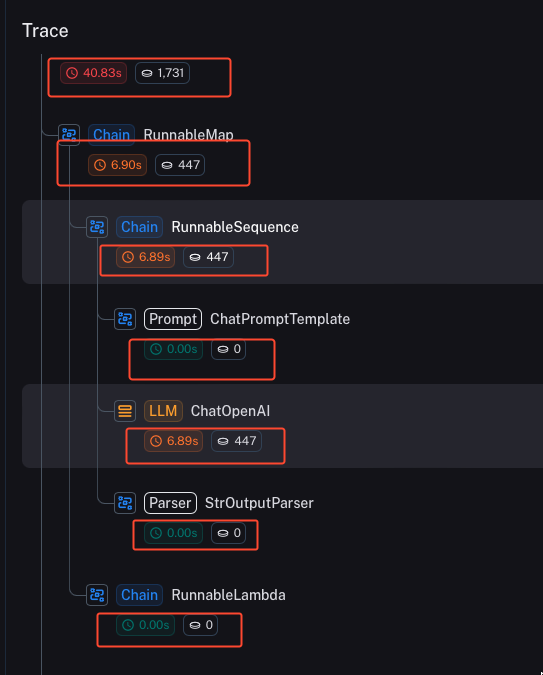

Analytics:

It gives you time spent and token used per step as well as in total at the top.

This would help us to understand the bottleneck in our chain and help in optimizing the chain performance.

Token count would help in understanding the cost per call.

It gives you time spent and token used per step as well as in total at the top.

This would help us to understand the bottleneck in our chain and help in optimizing the chain performance.

Token count would help in understanding the cost per call.

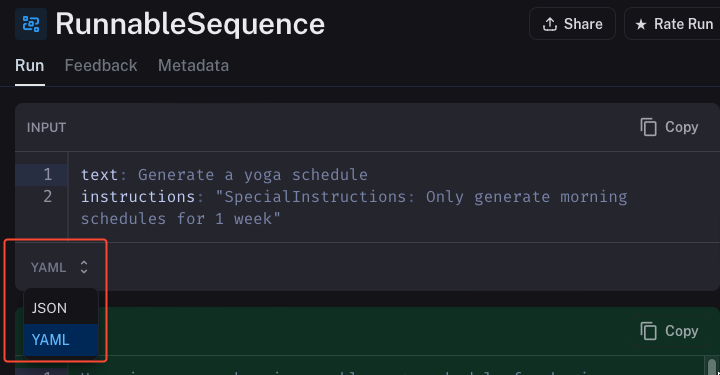

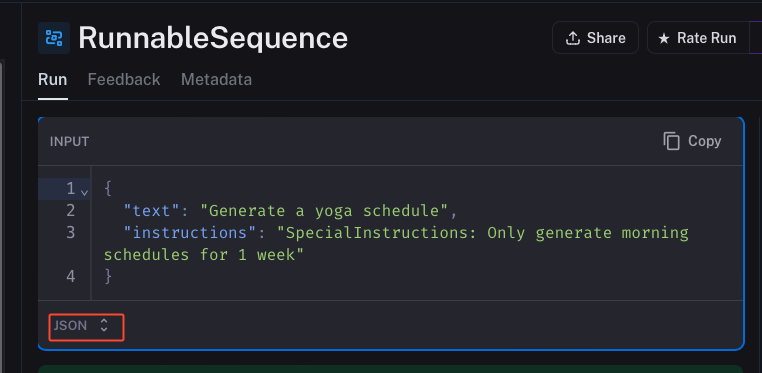

JSON/YAML:

You can toggle the output/input view to JSON or YAML whichever is comfortable for you to review.

This makes life so easier to review in YAML but then copy/paste in JSON to use in code if needed.

You can toggle the output/input view to JSON or YAML whichever is comfortable for you to review.

This makes life so easier to review in YAML but then copy/paste in JSON to use in code if needed.

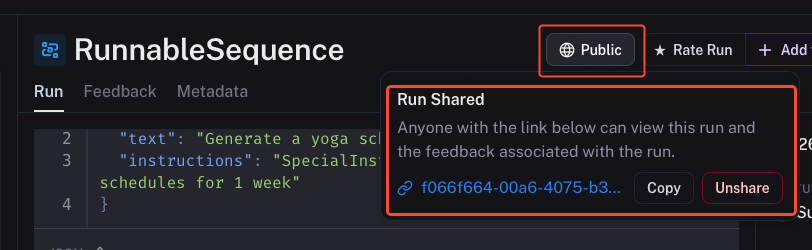

Share:

You can share your trace with anyone using the share button. It will generate a public link accessible to anyone for review.

This makes it very easy to collaborate with others and explain the team working behind the scenes.

You can share your trace with anyone using the share button. It will generate a public link accessible to anyone for review.

This makes it very easy to collaborate with others and explain the team working behind the scenes.

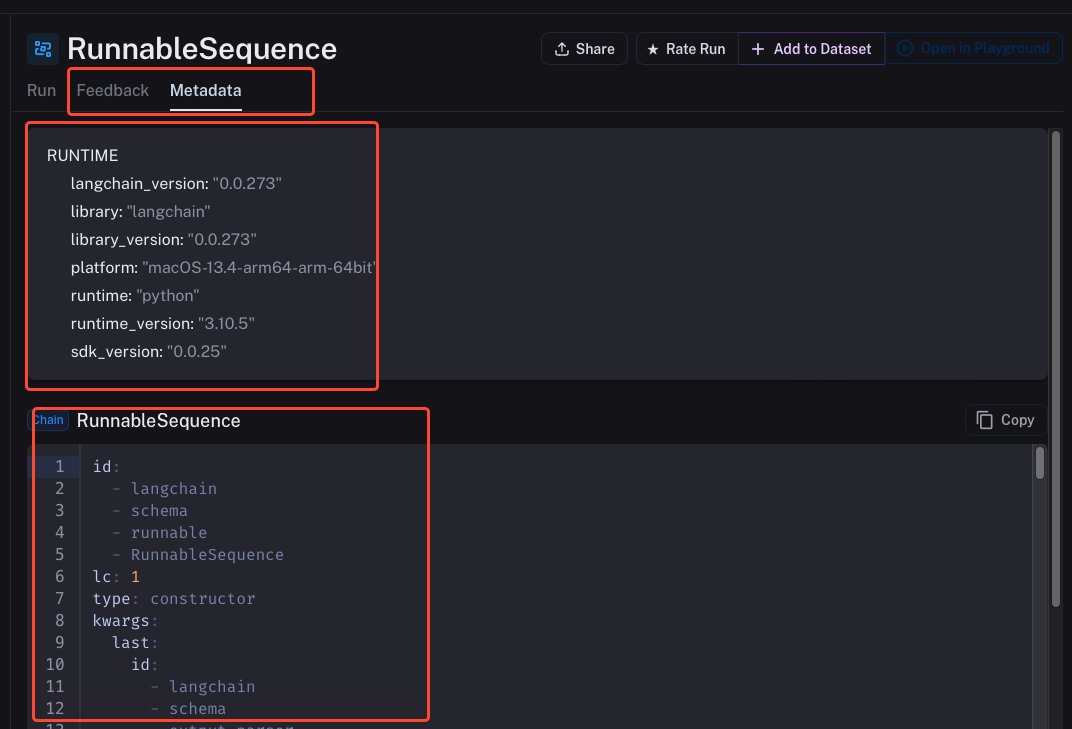

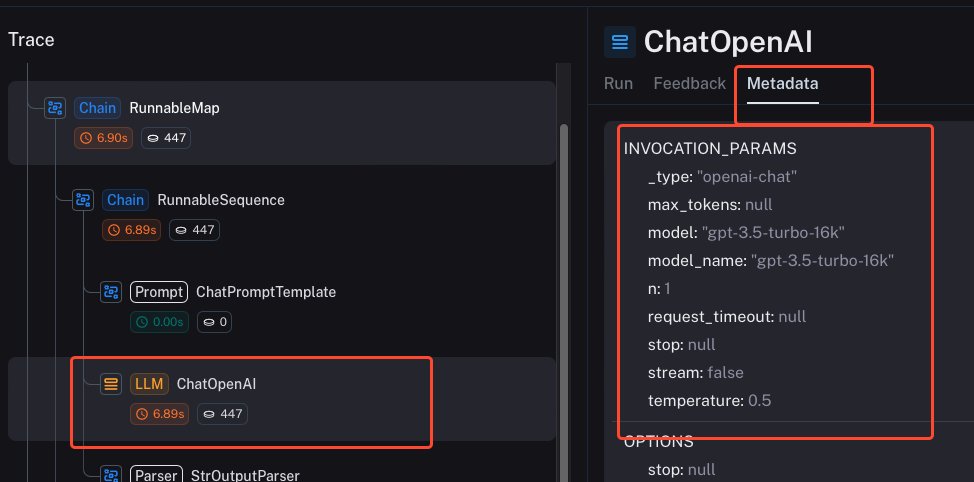

Metadata & Feedback:

It also provides you metadata for the whole sequence, individual chain and individual llm call.

Feedback is also available if any associated with any of these element.

Metadata helps to get all the information related to each chain/call or step.

It also provides you metadata for the whole sequence, individual chain and individual llm call.

Feedback is also available if any associated with any of these element.

Metadata helps to get all the information related to each chain/call or step.

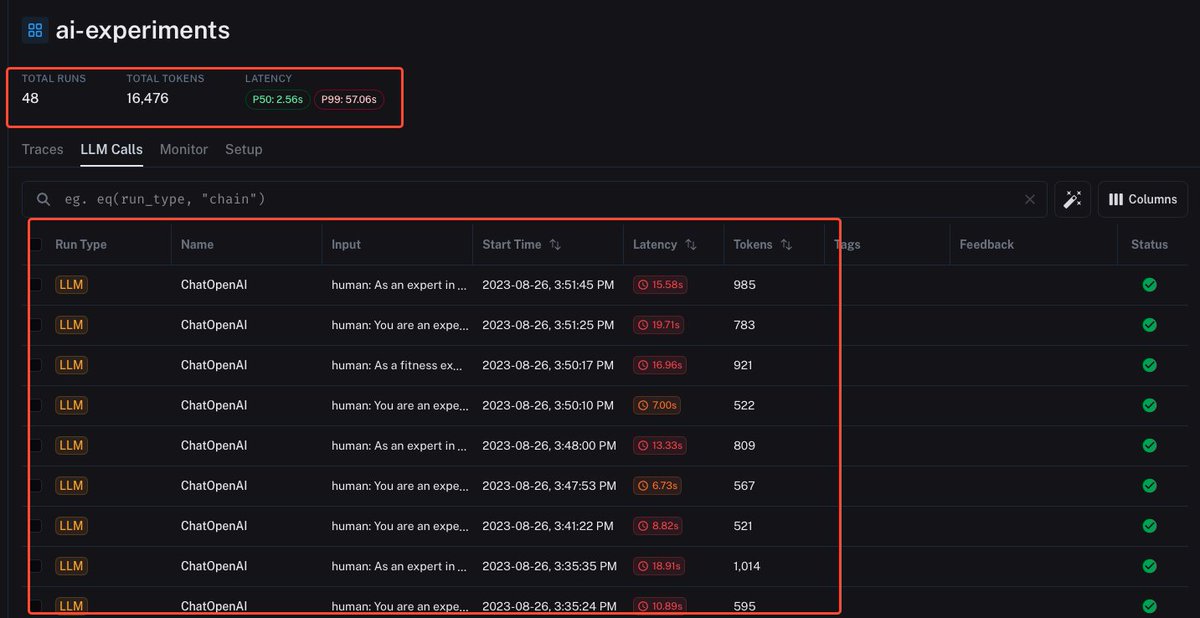

LLM Dashboard:

Overall analytics associated with all the call made using the project in a Dashboard is a bird eye view of how many calls made and how much time and tokens used with it.

This really helps to tap on the cost of the project and reports associated with.

Overall analytics associated with all the call made using the project in a Dashboard is a bird eye view of how many calls made and how much time and tokens used with it.

This really helps to tap on the cost of the project and reports associated with.

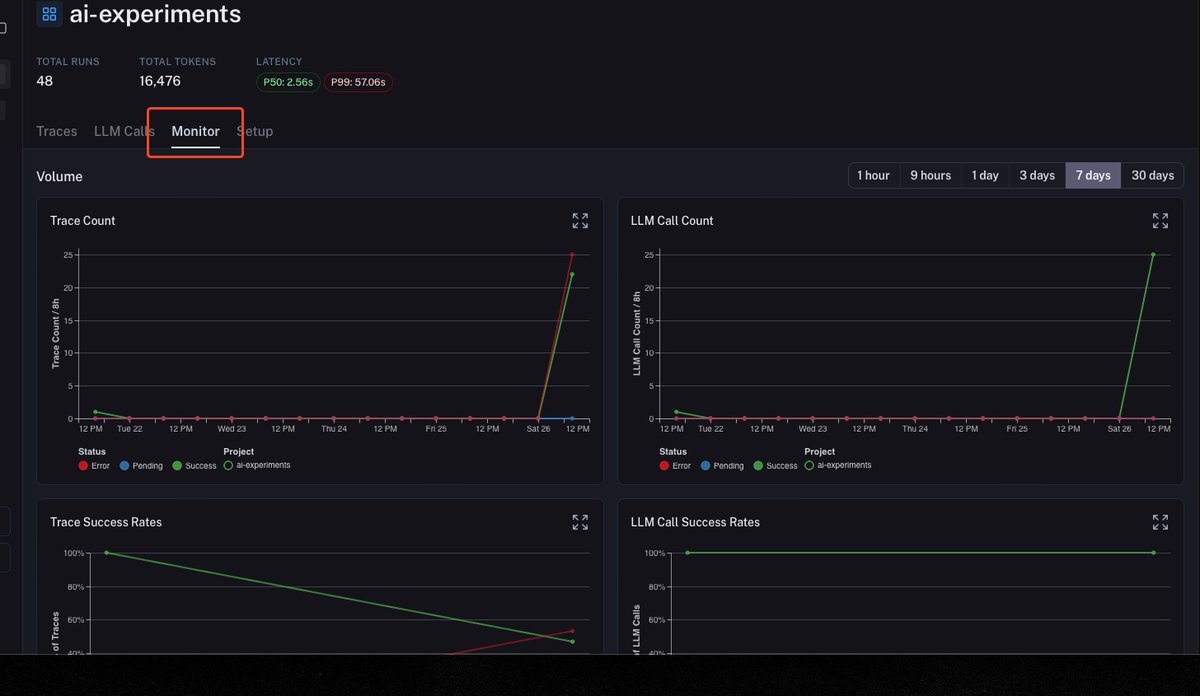

Graphs Dashboard:

This is one of the cool feature added in the dashboard. Graphs.

We all love graphs and it gives bird eye view of everything happening with your project 🙂

This is one of the cool feature added in the dashboard. Graphs.

We all love graphs and it gives bird eye view of everything happening with your project 🙂

If you found this information helpful, follow me

@HardKothari for more such content on AI and Automation.

Show your support by :

- Like 💕

- Retweet 📷

- Sharing your thoughts 📷📷

Thank you!💬

@HardKothari for more such content on AI and Automation.

Show your support by :

- Like 💕

- Retweet 📷

- Sharing your thoughts 📷📷

Thank you!💬

• • •

Missing some Tweet in this thread? You can try to

force a refresh

![def self_querying_default_retriever(llm: Union[ChatOpenAI, OpenAI] = ChatOpenAI(temperature=0), embedding_fn: embeddings = OpenAIEmbeddings(), documents: List[Document] = document_movies, document_content_description: str = "Name of a movie", metadata_field_info: List[AttributeInfo] = movies_metadata_field_info): embeddings = embedding_fn vectorstore = Chroma.from_documents(documents, embeddings) document_content_description ...](https://pbs.twimg.com/media/F9xQsflWQAAaIIl.jpg)