How I just got gained access to 22 unauthorized endpoints across 116 websites (260k endpoints) in about 10 minutes. Use what your comfy with.

👇

👇

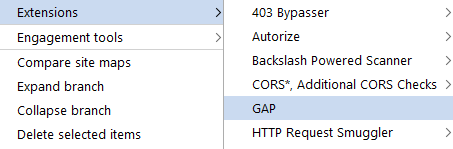

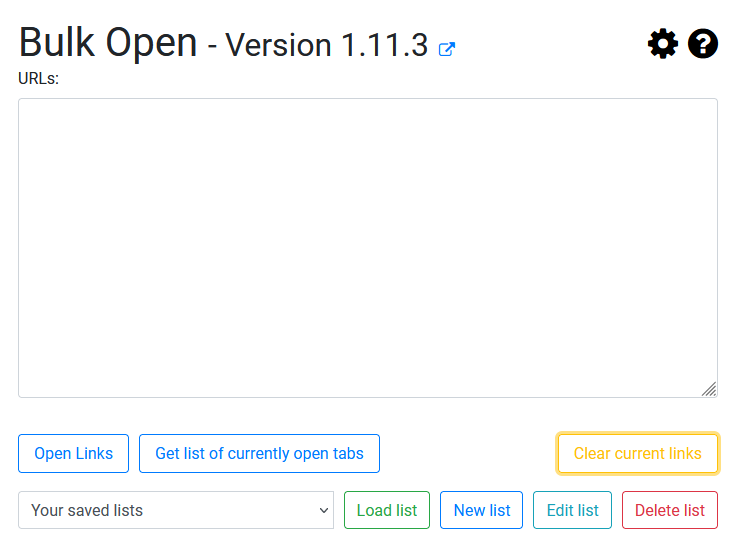

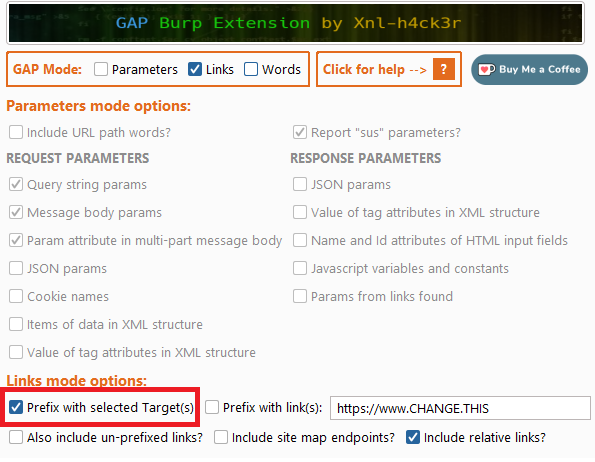

Once I have my list of sites. I load them into my Burpsuite site map via Firefox. There are many ways to do it. If it's not thousands of sites, I use the Bulk Open extension. There are others.

I had Burp crawl a bit for me. You can be as thorough as you want in this phase. For the sake of demo. You can let Burp do its thing.

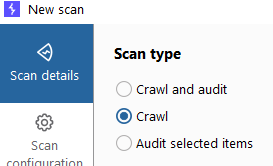

Once that finished. Open up GAP (). Make sure you set "Prefix with selected Targets". github.com/xnl-h4ck3r/GAP…

Once GAP finishes. Copy out your links and feed them into file that you will run FFUF against. In my case, I filtered by "/api", "/admin" and "/user". Those are usually juicy.

We want to run FFUF against all of the urls that your tool GAP output for you, and make sure you save the whole request/response.

command:

ffuf -w "GAPoutput.txt" -u "FUZZ" -noninteractive -o "/tmp/results.json" -od "/tmp/bodies/" -of json

command:

ffuf -w "GAPoutput.txt" -u "FUZZ" -noninteractive -o "/tmp/results.json" -od "/tmp/bodies/" -of json

Then we want to feed this FFUF data to FFUFPostprocessing (). It does a decent job at sanitizing the 260k+ endpoints.

command:

ffufPostprocessing -result-file "/tmp/results.json" -bodies-folder "/tmp/bodies/" -delete-bodies -overwrite-result-filegithub.com/Damian89/ffufP…

command:

ffufPostprocessing -result-file "/tmp/results.json" -bodies-folder "/tmp/bodies/" -delete-bodies -overwrite-result-filegithub.com/Damian89/ffufP…

Finally. Have httpx read through all the results and hit on the endpoints that have more than 60 lines in them.

jq -r '.results[].url' "/tmp/results.json" | httpx -title -sc -lc -nc -silent | sed 's/[][]//g' |awk '$NF > 60' |egrep ' 200| 301| 302'

jq -r '.results[].url' "/tmp/results.json" | httpx -title -sc -lc -nc -silent | sed 's/[][]//g' |awk '$NF > 60' |egrep ' 200| 301| 302'

Once this finishes, I got about 50 endpoints back which I manually visited. I was able to whittle down from 260k endpoints to about 50 endpoints with interesting information in them. 22 of them were sensitive in nature.

Added bonus, check them for leaked tokens/api keys.

command:

jq -r '.results[].url' "/tmp/results.json" | nuclei -tags tokens

command:

jq -r '.results[].url' "/tmp/results.json" | nuclei -tags tokens

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter