The Digital Services Act is a transparency machine. Platforms have to submit every 6 months a report describing their content moderation activities in the EU. The first reports are in and there’s a wealth of information in there. Thread

First up, X/Twitter . In terms of total numbers we see that account suspensions are by far the most used measure (2 mil) followed by restricting reach (90k) and removing content (54k).transparency.twitter.com/dsa-transparen…

Fascinating chart detailing why accounts where suspended. If you take away violations of Twitter's policy on spam and platform manipulation not a great deal seems to be happening.. help.twitter.com/en/rules-and-p…

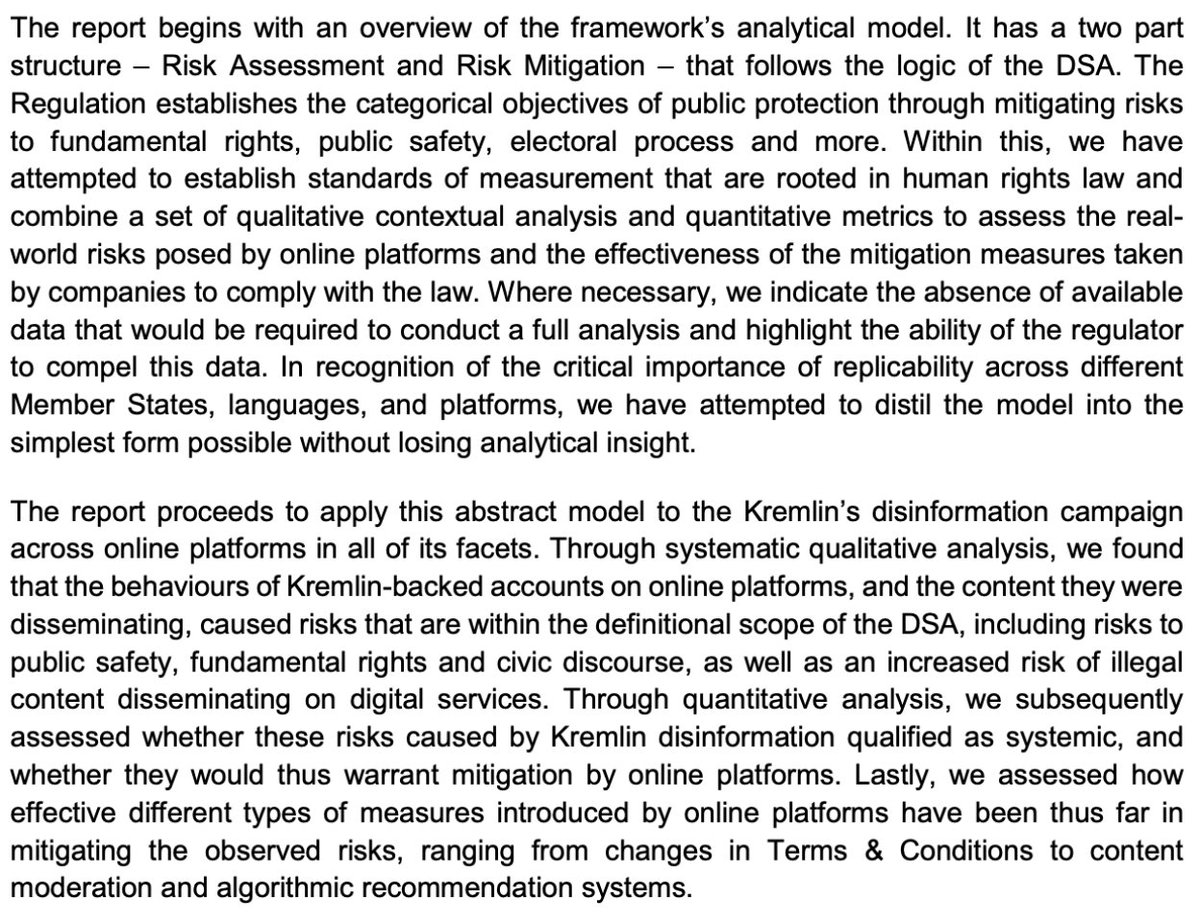

Linguistic expertise of Twitter's EU content moderation team. Will this change in light of the 2024 elections in Finland, Lithuania, Moldova, Romania and Slovakia?

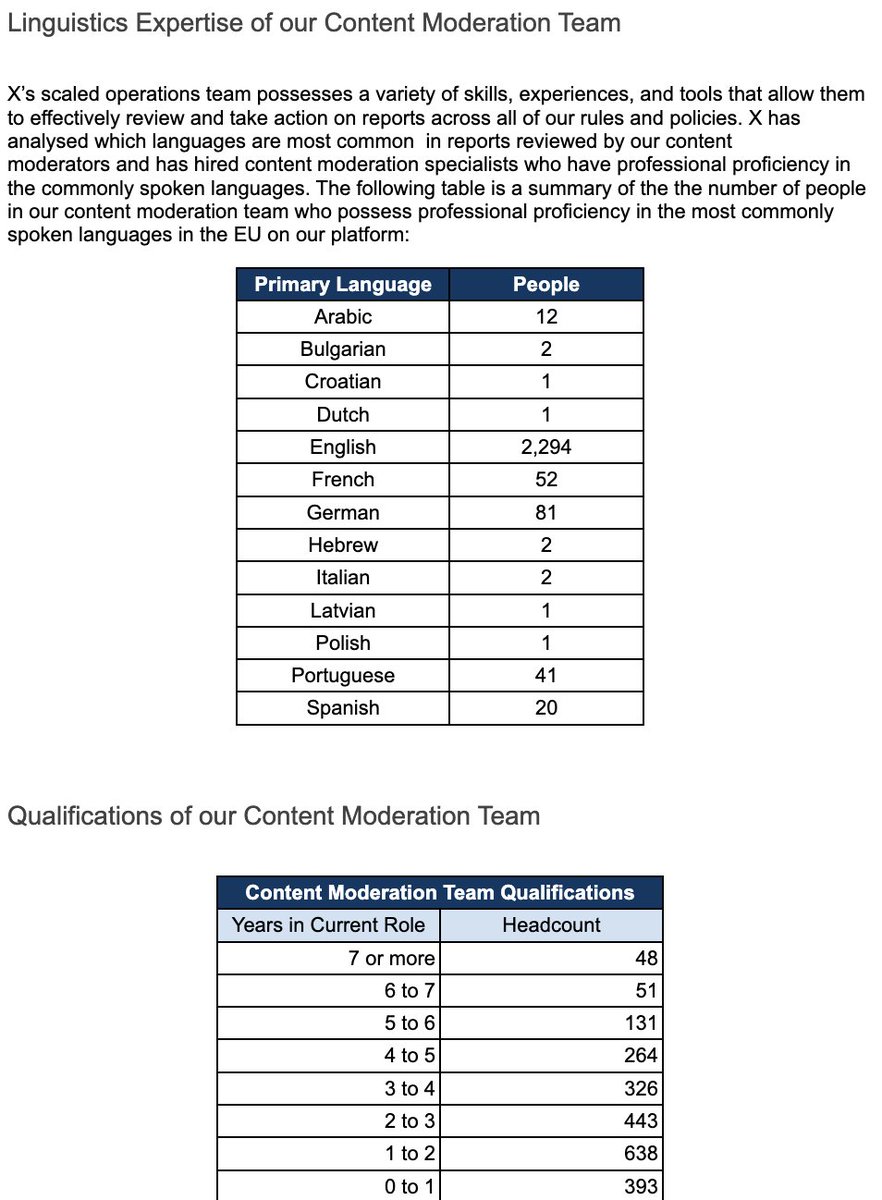

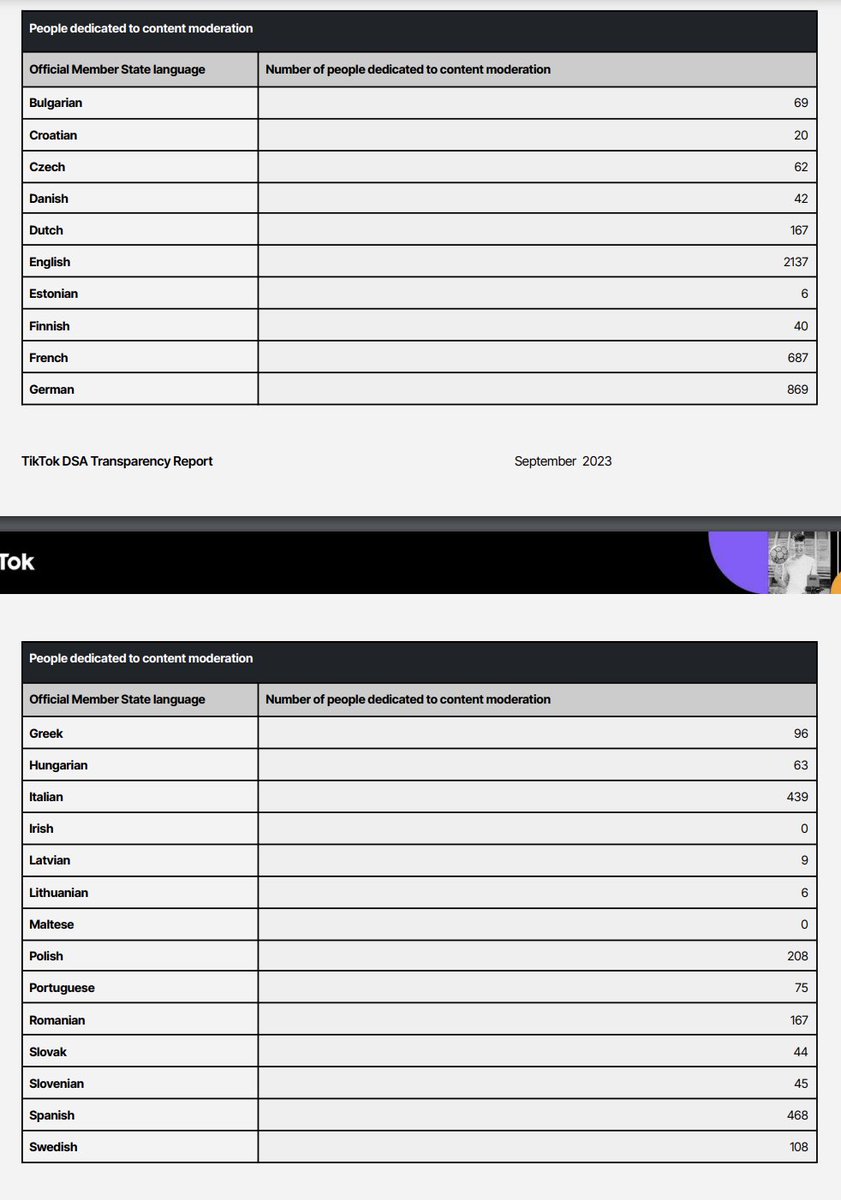

Up next: TikTok (definitely a better layout 🤫) TikTok doesnt give the same type of breakdown per Member State unfortunately sf16-va.tiktokcdn.com/obj/eden-va2/f…

It definitely does have more human moderators compared to X (6k). Moderation of content in Irish and Maltese is - just like w X almost non-existent.

The amount of information requests is roughly 25% of the figures X is getting. (TikTok please provide the aggregated figures! (452 compared to Twitters 1728).

TikTok average number of ‘monthly active recipients’ in the EU broken down per Member State during the period 1 April 2023 to 30 September 2023, rounded to the nearest hundred thousand. A total of 135.9 million

Next: Snapchat. Starts of with detailing its 102 monthly active users. values.snap.com/privacy/transp…

Snap also just gives links to its previous global transparency reports. Again, not what's being asked Snap. Snap in general doesnt seem to be very far in terms of DSA-implementation - see also the lack of implementation on article 40.13 for instance.

As of 25 August 2023, LinkedIn had approximately 820 content moderators globally and 180 content moderators located in the EU. Why mention you have 0 moderators in Czech or Danish, but not mention you have 0 in Slovak or Lithuanian?

Linkedin permanently suspended only 2,047 accounts and a grand total of 0 requests from governments to remove content. It also receives significantly receives less information requests from governments.

Time for the first search engine: Bing! 119 million average monthly users in the EU. "This information was compiled pursuant to the Digital Services Act and thus may differ from other user metrics published by Bing". Hm query.prod.cms.rt.microsoft.com/cms/api/am/bin…

This is an otherwise v short report compared to the others. Bing received 0 orders from EU Gvts to remove content. One interesting nugget is that Bing took voluntary actions to detect, block, and report 35,633 items of suspected CSAM content provided by recipients of the service.

Pinterest! Pinterest had 124 million monthly active users (MAU) in Europe. For some reason Pinterest was only able to collect data for one month (as opposed to two for the others)policy.pinterest.com/en/digital-ser…

• • •

Missing some Tweet in this thread? You can try to

force a refresh