Currently reading Google's DSA transparency report. Some (unorganised) first impressions below:

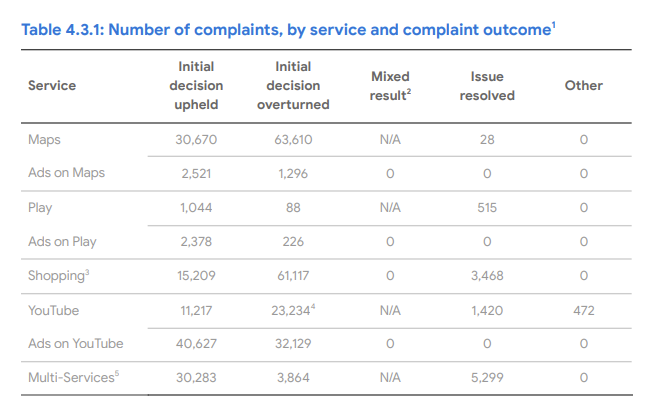

First, the internal appeals seems to be succesful. For starters, it's actually being used by thousands of users (DMCA counternotice *this is not*), and over two thirds are succesful!

First, the internal appeals seems to be succesful. For starters, it's actually being used by thousands of users (DMCA counternotice *this is not*), and over two thirds are succesful!

I wonder if we'll see those numbers rise as users familiarise themselves with this new process...

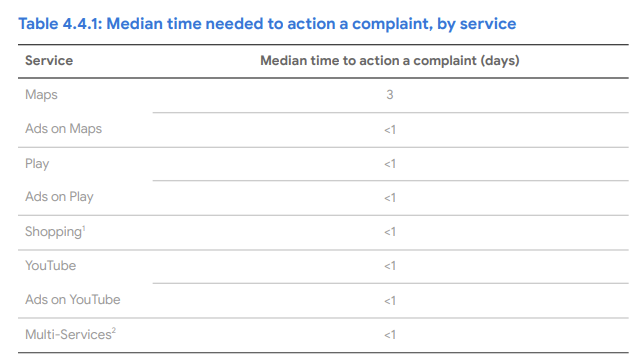

What's more - appeals fast. The median time to resolve appeals is <1 day on most services.

What's more - appeals fast. The median time to resolve appeals is <1 day on most services.

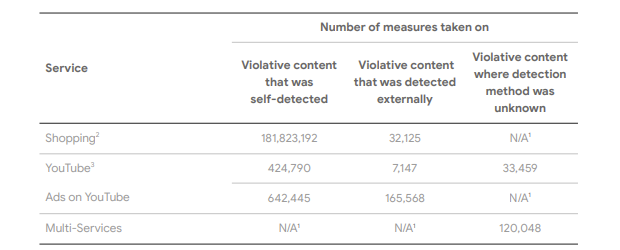

Uptake of the abuse provision seems...modest, if not entirely trivial. I wonder how this compares to other platforms? And what's the delta compared to self-regulation?

On YouTube, it's surprising to me that we see more actions against ads than against user content. What's going on here?

The overall volume of ads must be smaller, but the standards are stricter.

The overall volume of ads must be smaller, but the standards are stricter.

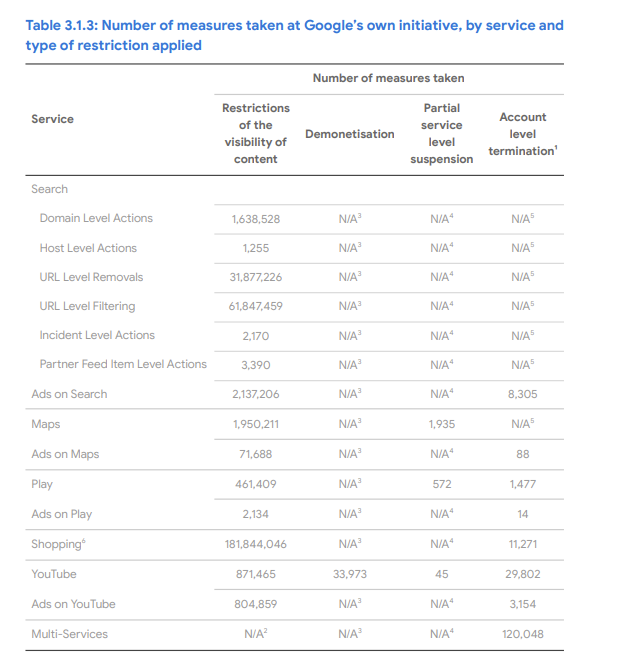

Measures: visibility restrictions *far* outstrip conventional account suspension. This isn't surprising but interesting to see it confirmed and quantified in such stark numbers.

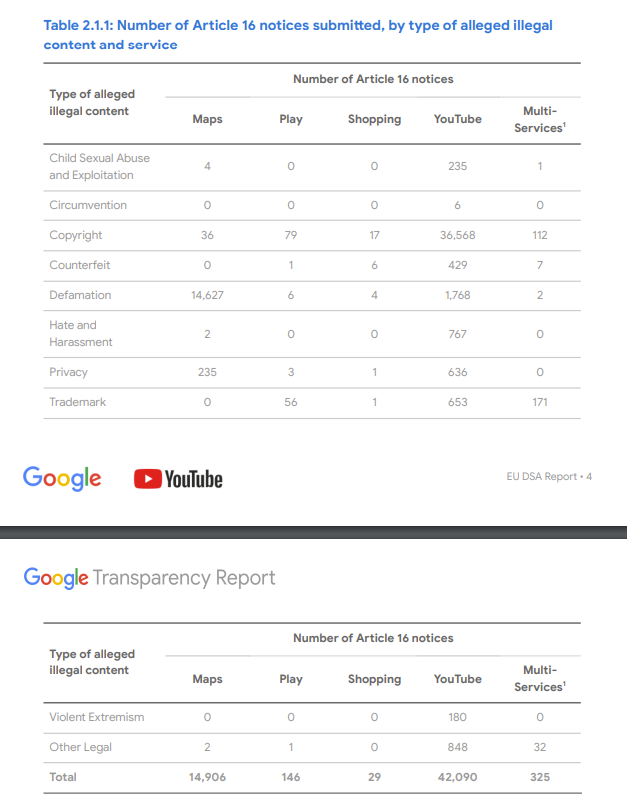

On third party notices: the bulk is copyright on YouTube and defamation on Maps (presumably: corporate libel of some sort?).

An important clarification for those who associate the DSA with more political & cultural themes.

An important clarification for those who associate the DSA with more political & cultural themes.

*Minor correction: I meant to write that *about* two thirds of appeals are succesful, not *over*. Haven't run the numbers yet 👼

• • •

Missing some Tweet in this thread? You can try to

force a refresh