I proved a super simple helper lemma.

In English the proof is 1 line.

In lean4, it took me around 50 lines of code.

1/n

In English the proof is 1 line.

In lean4, it took me around 50 lines of code.

1/n

The trickiest things:

1. Can't take basic math -- e.g., commutativity, automatic casting of naturals as reals -- for granted.

2. Tactics feel simultaneously powerful and weak. E.g., sometimes 'linarith' easily proves inequalities, sometimes not.

3. GPT4 is not v helpful

3/n

1. Can't take basic math -- e.g., commutativity, automatic casting of naturals as reals -- for granted.

2. Tactics feel simultaneously powerful and weak. E.g., sometimes 'linarith' easily proves inequalities, sometimes not.

3. GPT4 is not v helpful

3/n

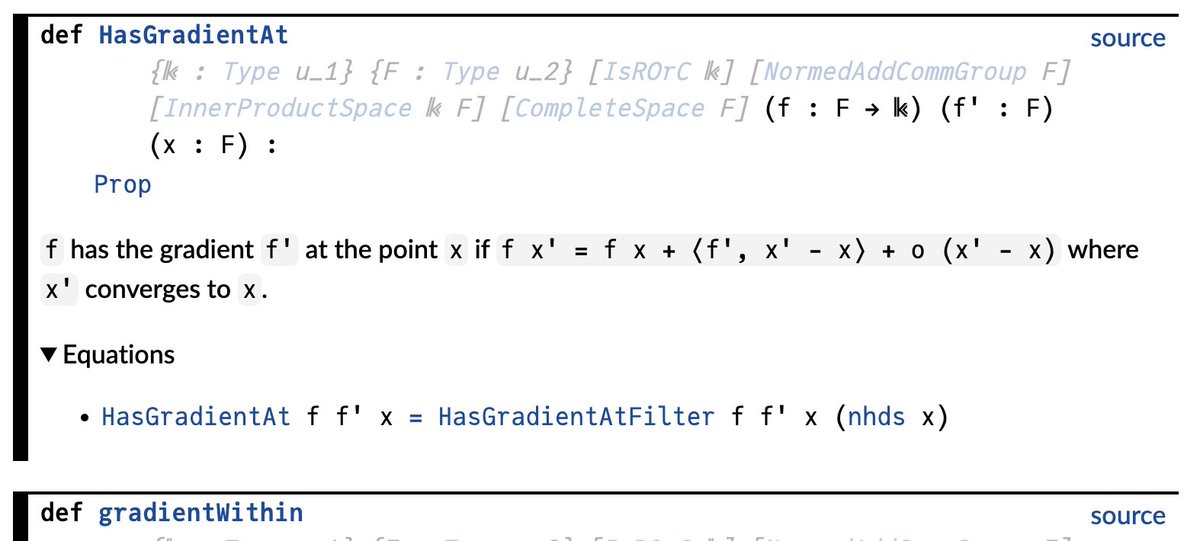

Next up for me is figuring out support for convexity, differentiability, and the fundamental theorem of calculus. I'm also about 7 chapters in to the lean4 tutorial. I'll continue working through that in parallel.

I'm posting my code to a github repo, which i'll link below.

4/n

I'm posting my code to a github repo, which i'll link below.

4/n

Whoops forgot an assumption in the theorem statement above: The sequence a_k should be nonincreasing.

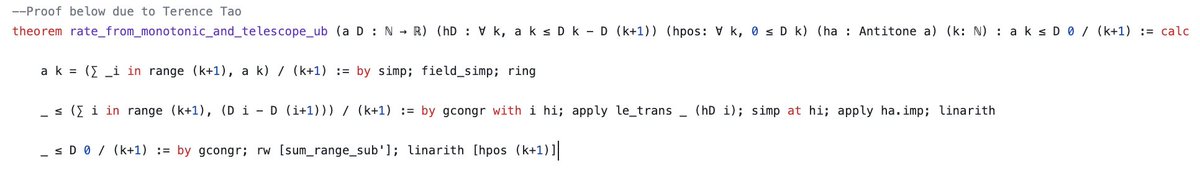

A ~30 line streamlined proof closer to my envisioned 1 line proof in English.

6/n

6/n

https://twitter.com/ericwieser/status/1731084883391344648

Terence Tao kindly sent me the following 3 line lean proof, which is exactly the proof I wanted to write!

Terry’s blog on writing the proof.

https://twitter.com/damekdavis/status/1732369224427807030

• • •

Missing some Tweet in this thread? You can try to

force a refresh