Google just revealed Gemini and will directly integrate the AI into Google apps.

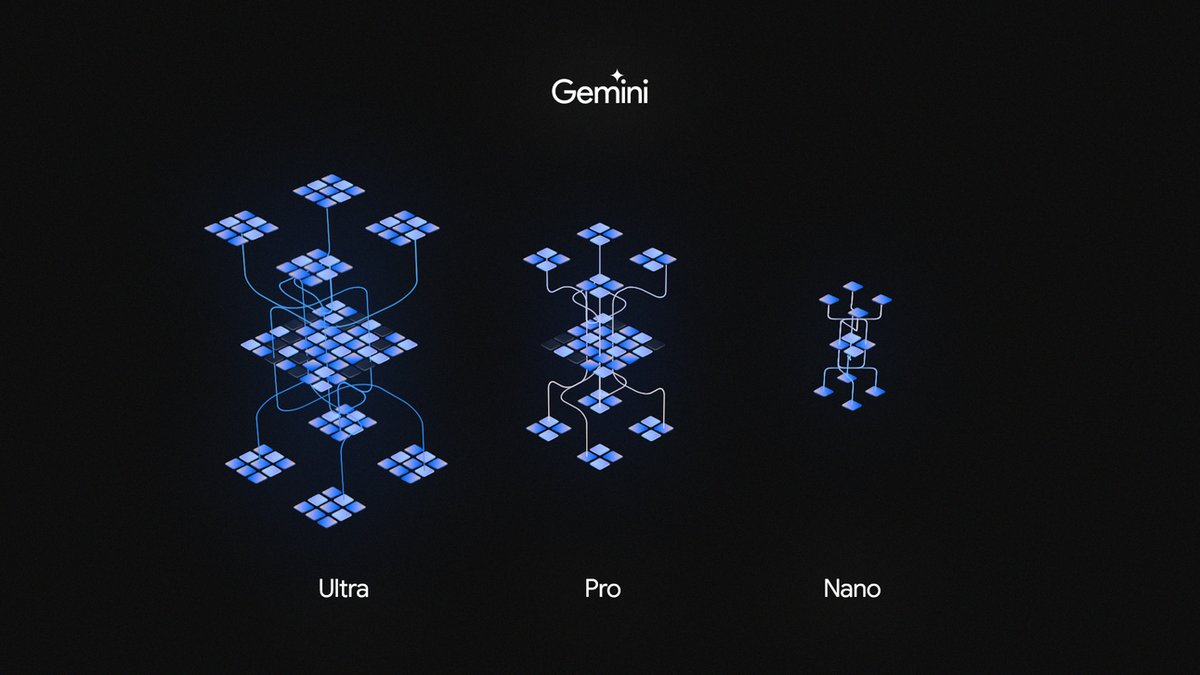

The GPT-4 competitor comes in 3 models — Ultra, Pro, and Nano.

Here's a thread of EVERYTHING you need to know:

The GPT-4 competitor comes in 3 models — Ultra, Pro, and Nano.

Here's a thread of EVERYTHING you need to know:

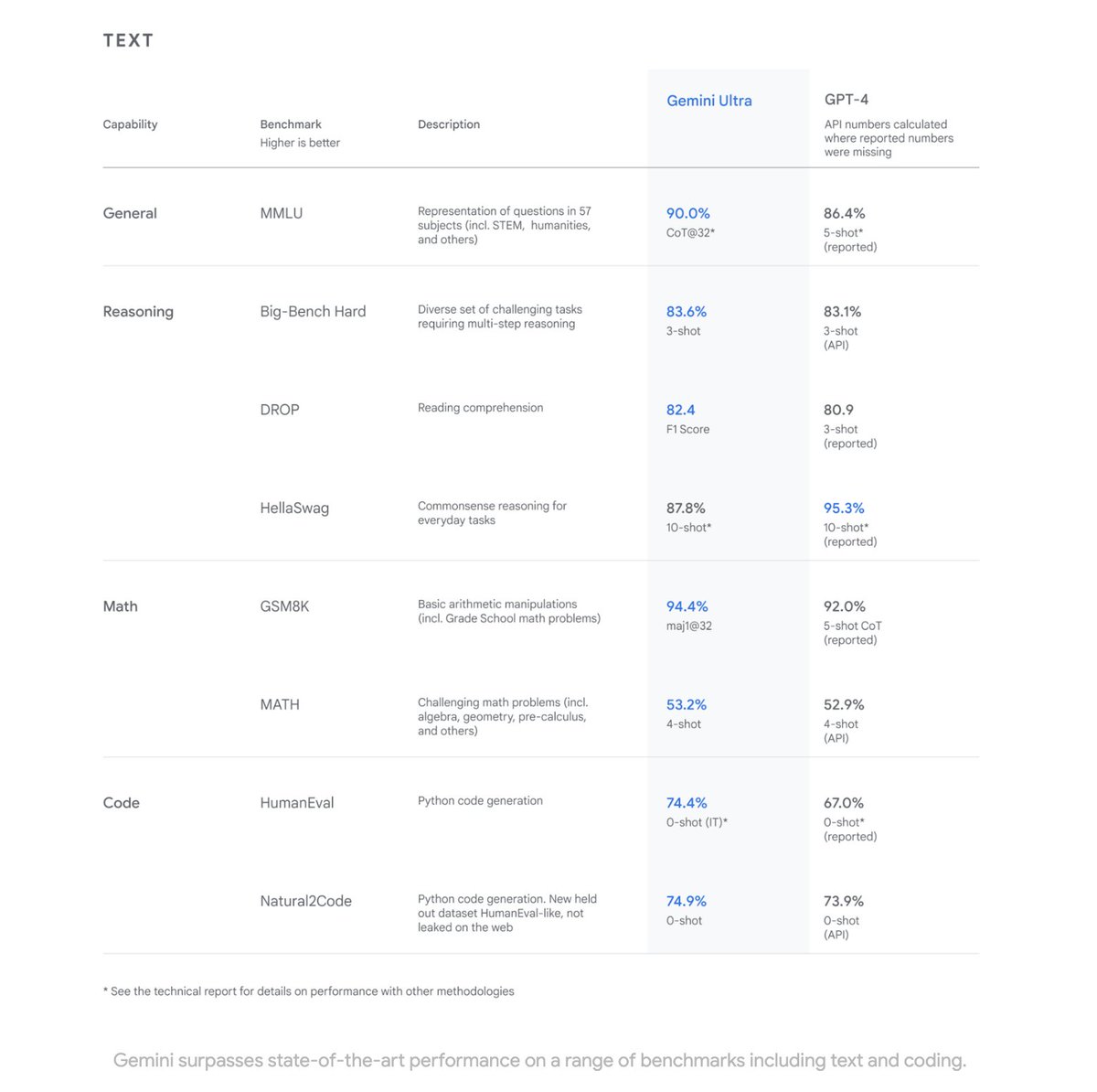

Gemini is multimodal and can recognize images and speak in real-time.

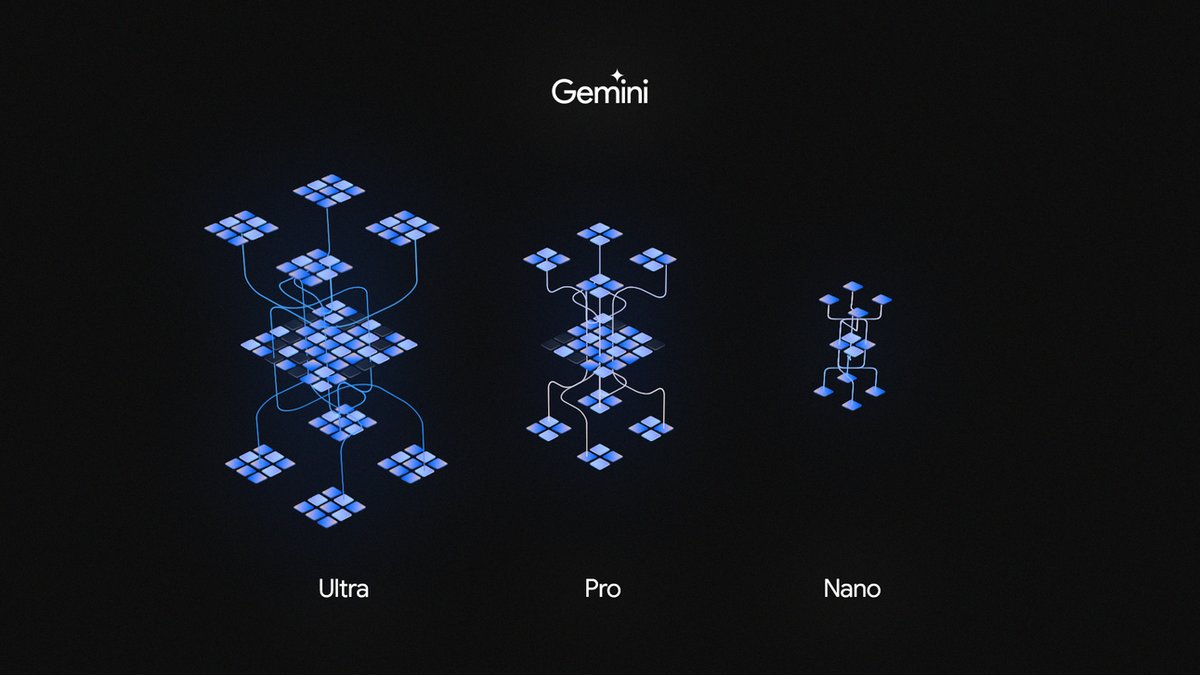

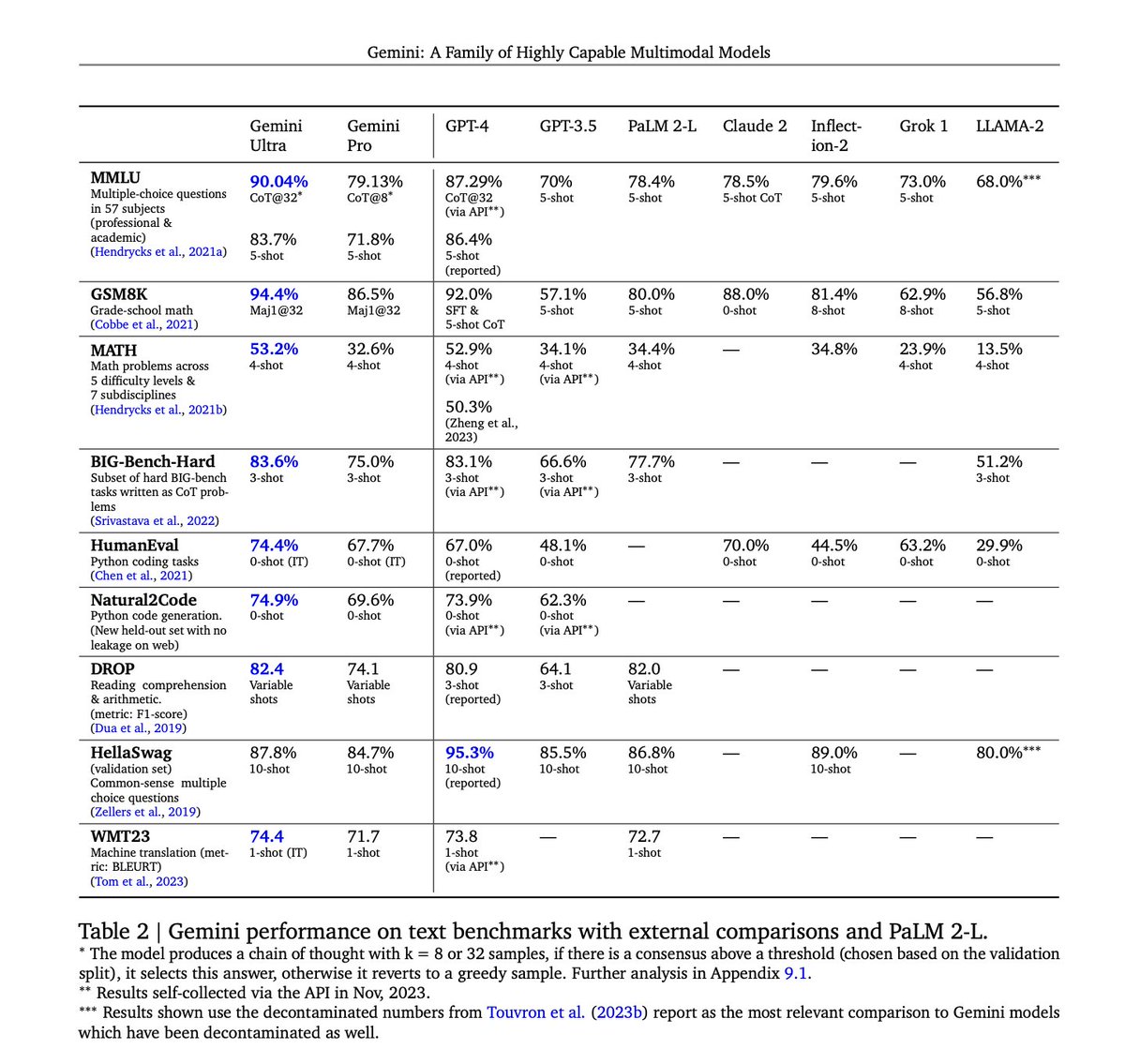

With a score of 90%, Gemini Ultra is the FIRST AI model to outperform human experts on the MMLU benchmark.

This demo is incredible.

With a score of 90%, Gemini Ultra is the FIRST AI model to outperform human experts on the MMLU benchmark.

This demo is incredible.

Gemini has next-generation capabilities such as sophisticated reasoning, multimodality, and advanced coding.

The model is also advanced in math and coding, as compared to ChatGPT (GPT-4), which cannot perform math.

Check out this demo of them solving physics.

The model is also advanced in math and coding, as compared to ChatGPT (GPT-4), which cannot perform math.

Check out this demo of them solving physics.

Gemini has an incredible understanding of science.

It can find and extract research across 1000's of research papers.

Because Gemini is multimodal, it can not only understand text but also graphs through images!

It can find and extract research across 1000's of research papers.

Because Gemini is multimodal, it can not only understand text but also graphs through images!

Gemini comes in three sizes — Ultra for complex tasks, Pro for scaling across a range of tasks, and Nano for efficient on-device tasks.

-Pro will be in Google products through Bard starting today.

-Ultra will be rolling out early next year.

-Nano will be available on Pixel.

-Pro will be in Google products through Bard starting today.

-Ultra will be rolling out early next year.

-Nano will be available on Pixel.

Gemini Ultra’s performance beats current state-of-the-art results in 30 of 32 benchmarks used in LLM research & development.

Gemini Pro will be available for free in Bard and across Google apps today.

In six out of eight benchmarks, Gemini Pro outperformed GPT-3.5, making it 'the most powerful free chatbot on the market today'.

In six out of eight benchmarks, Gemini Pro outperformed GPT-3.5, making it 'the most powerful free chatbot on the market today'.

Gemini Nano now powers on-device generative AI features for Pixel 8 Pro.

New features include:

-Summarize in Recorder

-Smart Reply in Gboard

-Cutting-edge video

-Enhanced photography and image editing

New features include:

-Summarize in Recorder

-Smart Reply in Gboard

-Cutting-edge video

-Enhanced photography and image editing

I shared all the info on Gemini in my newsletter this morning.

Click here to join 400k+ readers, and you'll never miss a thing in AI ever again: therundown.ai/subscribe

Click here to join 400k+ readers, and you'll never miss a thing in AI ever again: therundown.ai/subscribe

Thanks to @GoogleDeepMind for an invitation to the early press conference invite, allowing me to share the news live.

I do these rundowns daily, follow me @rowancheung

for more.

If you found this helpful, spare me a like/retweet to support my content 👇

I do these rundowns daily, follow me @rowancheung

for more.

If you found this helpful, spare me a like/retweet to support my content 👇

https://x.com/rowancheung/status/1732416454497300701?s=20

• • •

Missing some Tweet in this thread? You can try to

force a refresh