The racism behind chatGPT that we aren't talking about...

This year, I learned that students use chatGPT because they believe it helps them sound more respectable. And I learned that it absolutely does not work. A thread. 🧵

This year, I learned that students use chatGPT because they believe it helps them sound more respectable. And I learned that it absolutely does not work. A thread. 🧵

A few weeks ago, I was working on a paper with one of my RAs. I have permission from them to share this story. They had done the research and the draft. I was to come in and make minor edits, clarify the method, add some background literature, and we would refine the discussion.

The draft was incomprehensible. Whole paragraphs were vague, repetitive, and bewildering. It was like listening to a politician. I could not edit it. I had to rewrite nearly every section. We were on a tight deadline, and I was struggling to articulate what was wrong ...

so I sent them on to further sections while I cleaned up ... this.

As I edited, I had to keep my mind from wandering. I had written with this student before, and this was not normal. I usually did some light edits for phrasing, though sometimes with major restructuring.

As I edited, I had to keep my mind from wandering. I had written with this student before, and this was not normal. I usually did some light edits for phrasing, though sometimes with major restructuring.

I was worried about my student. They had been going through some complicated domestic issues. They were disabled. Theyd had a lrior head injury. They had done excellent on their prelims, which of course I couldn't edit for them. What was going on!?

We were co-writing the day before the deadline. I could tell they were struggling with how much I had to rewrite. I tried to be encouraging and remind them that this was their research project and they had done all of the interviews and analysis. And they were doing great.

In fact, the qualitative write-up they had done the night before was better, and I was back to just adjusting minor grammar and structure. I complimented their new work and noted it was different from the other parts of the draft that I had struggled to edit.

Quietly, they asked, "is it okay to use chatGPT to fix sentences to make you sound more white?"

"... is... is that what you did with the earlier draft?"

"... is... is that what you did with the earlier draft?"

They had, a few sentences at a time, completely ruined their own work, and they couldnt tell, because they believed that the chatGPT output had to be better writing. Because it sounded smarter. It sounded fluent. It seemed fluent. But it was nonsense!

I nearly cried with relief. I told them I had been so worried. I was going to check in with them when we were done, because I could not figure out what was wrong. I showed them thr clear differences between their raw drafting and their "corrected" draft.

I told them that I believed in them. They do great work. When I asked them why they felt they had to do that, they told me that another faculty member had told the class that they should use it to make their papers better, and that he and his RAs were doing it.

The student also told me that in therapy, their therapist had been misunderstanding them, blaming them, and denying that these misunderstandings were because of a language barrier.

They felt that they were so bad at communicating, because of their language, and their culture, and their head injury, that they would never be a good scholar. They thought they had to use chatGPT to make them sound like an American, or they would never get a job.

They also told me that when they used chatGPT to help them write emails, they got more responses, which helped them with research recruitment.

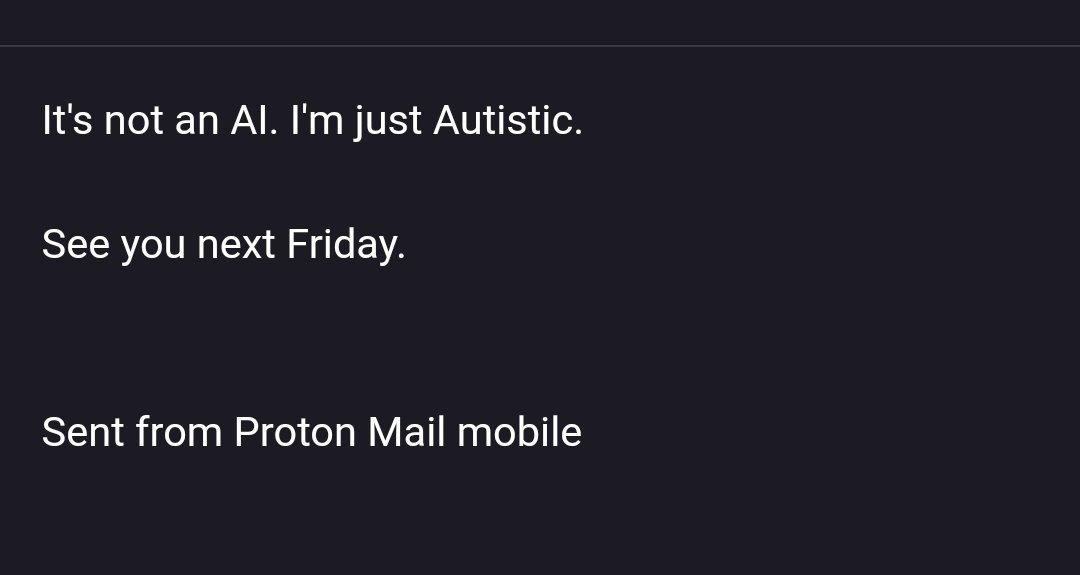

I've heard this from other students too. That faculty only respond to their emails when they use chatGPT. The great irony of my viral autistic email thread was always that had I actually used AI to write it, I would have sounded decidedly less robotic.

ChatGPT is probably pretty good at spitting out the meaningless pleasantries that people associate with respectability. But it's terrible at making coherent, complex, academic arguments!

Last semester, I gave my graduate students an assignment. They were to read some reports on labor exploitation and environmental impact of chatGPT and LLM. Then they wrote a reflection on why they have used chatGPT in the past, and how they might choose to use it in the future.

I told them I would not be policing their LLM use. But I wanted them to know things about it they were unlikely to know, and I warned them about the ways that using an LLM could cause them to submit inadequate work (incoherent methods and fake references, for example).

In their reflections, many international students reported that they used chatGPT to help them correct grammar, and to make their writing "more polished".

I was sad that so many students seemed to be relying on chatGPT to make them feel more confident in their writing, because I felt that the real problem was faculty attitudes toward multilingual scholars.

I have worked with a number of graduate international students who are told by other faculty that their writing is "bad", or are given bad grades for writing that is reflective of English as a second language, but still clearly demonstrates comprehension of the subject matter.

I believe that written communication is important. However, I also believe in focused feedback. As a professor of design, I am grading people's ability to demonstrate that they understand concepts and can apply them in design research and then communicate that process to me.

I do not require that communication to read like a first language student, when I am perfectly capable of understanding the intent. When I am confused about meaning, I suggest clarifying edits.

I can speak and write in one language with competence. How dare I punish international students for their bravery? Fixation on normative communication chronically suppresses their grades and their confidence. And, most importantly, it doesn't improve their language skills!

If I were teaching rhetoric and comp, it might be different. But not THAT different. I'm a scholar of neurodivergent and Mad rhetorics. I can't in good conscience support Divergent rhetorics while supressing transnational rhetoric!

Anyway, if you want your students to stop using chatGPT, then stop being racist and ableist when you grade.

@koala_karmic @NedsDread Also you might want to look up what a luddite actually is

• • •

Missing some Tweet in this thread? You can try to

force a refresh