In our new preprint, we ask: Do multilingual LLMs trained mostly on English use English as an “internal language”? - A key question for understanding how LLMs function.

“Do Llamas Work in English? On the Latent Language of Multilingual Transformers”

arxiv.org/abs/2402.10588

“Do Llamas Work in English? On the Latent Language of Multilingual Transformers”

arxiv.org/abs/2402.10588

What do we mean by “internal language”? Transformers gradually map token embeddings layer by layer to allow for predicting the next token. Intermediate embeddings before the last layer show us what token the model would predict at that point #LogitLens lesswrong.com/posts/AcKRB8wD…

Next, we construct Llama2 prompts in Chinese/French/German/Russian that can be correctly answered with a single token. This shows us how much probability goes to the correct Ch/Fr/Ge/Ru token vs. its English translation.

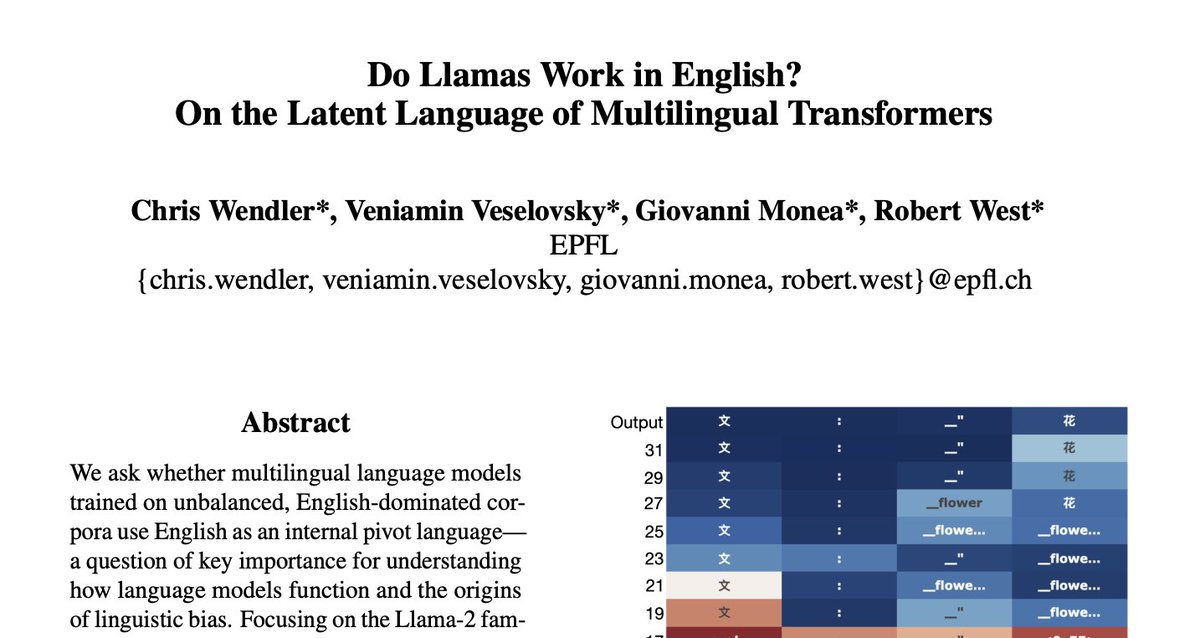

Here, the correct next token is “花” (Chinese for “fleur”):

Here, the correct next token is “花” (Chinese for “fleur”):

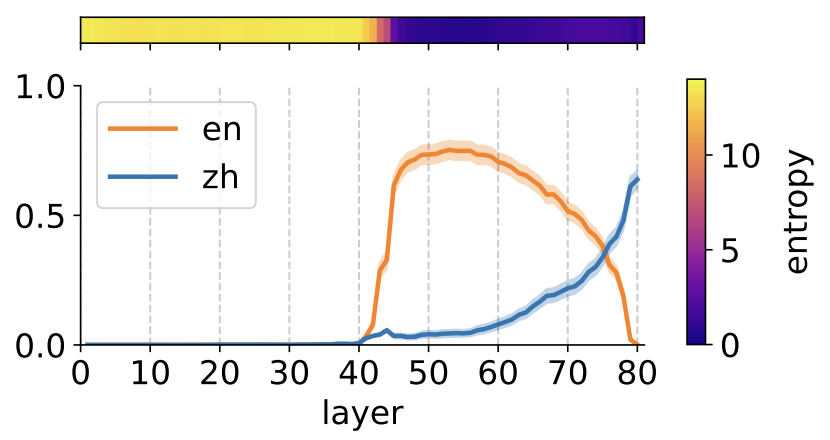

This plot shows: probability of correct Chinese token (blue) is much lower than probability of English translation (orange) during most of Llama2’s forward pass. Chinese takes over only on the final 2 layers – although no English at all appears in the prompt!

Here’s a visualization of the embeddings’ paths through their high-dimensional space – for your convenience in 2D rather than the actual 8192D. 🙂

Trajectories start in red and end in purple.

Takeaway: embeddings reach the correct Chinese token only after a detour through English

Trajectories start in red and end in purple.

Takeaway: embeddings reach the correct Chinese token only after a detour through English

Does this mean that Llama2 first computes the answer in English, then translates it to Chinese?

It’s more subtle than that. Looking more closely, we theorize that those English-looking intermediate embeddings actually correspond to abstract concepts, rather than concrete tokens.

It’s more subtle than that. Looking more closely, we theorize that those English-looking intermediate embeddings actually correspond to abstract concepts, rather than concrete tokens.

Our theory:

As embeddings are transformed layer by layer, they go through 3 phases:

1. “Input space”: model “undoes sins of the tokenizer”.

2. “Concept space”: embeddings live in an abstract concept space.

3. “Output space”: concepts are mapped back to tokens that express them.

As embeddings are transformed layer by layer, they go through 3 phases:

1. “Input space”: model “undoes sins of the tokenizer”.

2. “Concept space”: embeddings live in an abstract concept space.

3. “Output space”: concepts are mapped back to tokens that express them.

Our interpretation: Llama2’s internal “lingua franca” is not English, but concepts — and, crucially, concepts that are biased toward English. Hence, English could still be seen as an “internal language”, but in a semantic, rather than a purely lexical, sense.

Kudos to co-authors @VminVsky @wendlerch @giomonea,

@dgarcia_eu @erichorvitz @im_td @zachary_horvitz @SaiboGeng @peyrardMax for great feedback,

@nostalgebraist for inventing the logit lens,

@JacobSteinhardt & Co for logit lens plotting code,

@NeelNanda5 for mech int trailblazing

@dgarcia_eu @erichorvitz @im_td @zachary_horvitz @SaiboGeng @peyrardMax for great feedback,

@nostalgebraist for inventing the logit lens,

@JacobSteinhardt & Co for logit lens plotting code,

@NeelNanda5 for mech int trailblazing

• • •

Missing some Tweet in this thread? You can try to

force a refresh