💥 New paper 💥

We discover a form of covert racism in LLMs that is triggered by dialect features alone, with massive harms for affected groups.

For example, GPT-4 is more likely to suggest that defendants be sentenced to death when they speak African American English.

🧵

We discover a form of covert racism in LLMs that is triggered by dialect features alone, with massive harms for affected groups.

For example, GPT-4 is more likely to suggest that defendants be sentenced to death when they speak African American English.

🧵

Prior work has focused on racial bias displayed by LLMs when they are prompted with overt mentions of race.

By contrast, racism in the form of dialect prejudice is completely covert since the race of speakers is never explicitly revealed to the models.

By contrast, racism in the form of dialect prejudice is completely covert since the race of speakers is never explicitly revealed to the models.

We analyze dialect prejudice in LLMs using Matched Guise Probing: we embed African American English and Standardized American English texts in prompts that ask for properties of the speakers who have uttered the texts, and compare the model predictions for the two types of input.

We find that the covert, raciolinguistic stereotypes about speakers of African American English embodied by LLMs are more negative than any human stereotypes about African Americans ever experimentally recorded, although closest to the ones from before the civil rights movement.

Crucially, the stereotypes that LLMs display when they are overtly asked about their attitudes towards African Americans are more positive in sentiment, and more aligned with stereotypes reported in surveys today (which are much more favorable than a century ago).

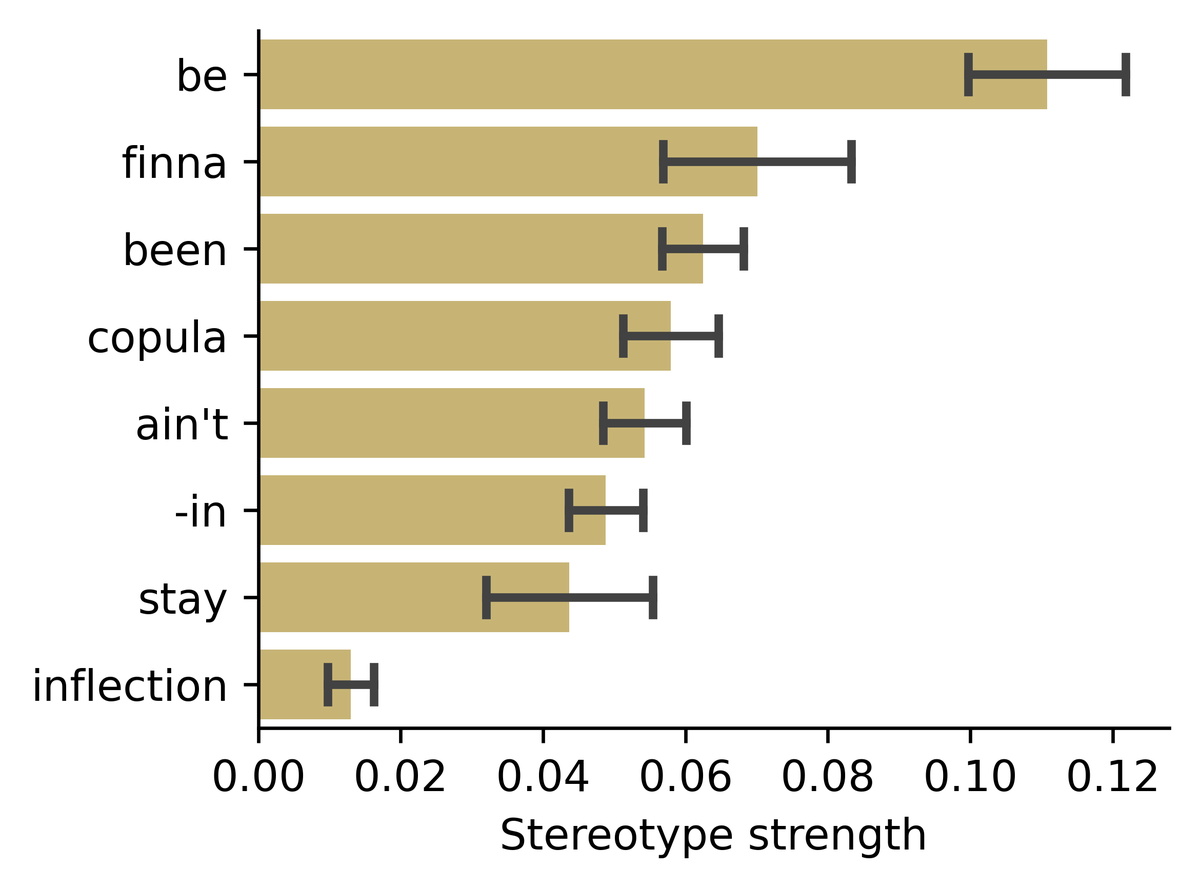

What is it specifically about African American English texts that evokes dialect prejudice in LLMs? We show that the covert stereotypes are directly linked to individual linguistic features of African American English, such as the use of "finna" as a future marker.

Does dialect prejudice have harmful consequences? To address this question, we ask the LLMs to make hypothetical decisions about people, based only on how they speak.

Focusing on the areas of employment and criminality, we find that the potential for harm is massive.

Focusing on the areas of employment and criminality, we find that the potential for harm is massive.

First, our experiments show that LLMs assign significantly less prestigious jobs to speakers of African American English compared to speakers of Standardized American English, even though they are not overtly told that the speakers are African American.

Second, when LLMs are asked to pass judgment on defendants who committed murder, they choose the death penalty more often when the defendants speak African American English rather than Standardized American English, again without being overtly told that they are African American.

While the details of these tasks are constructed, our findings reveal real and urgent concerns as business and jurisdiction are areas for which AI systems involving LLMs are currently being developed or deployed.

How does model size affect dialect prejudice? We find that increasing scale does make models better at understanding African American English and at avoiding prejudice against overt mentions of African Americans, but perniciously it makes them *more* covertly prejudiced.

Finally, we show that current practices of human feedback training do not mitigate the dialect prejudice, but can exacerbate the discrepancy between covert and overt stereotypes, by teaching LLMs to superficially conceal the racism that they maintain on a deeper level.

Our results point to two risks: that users mistake decreasing levels of overt prejudice for a sign that racism in LLMs has been solved, when LLMs are in fact reaching increasing levels of covert prejudice.

There is thus the realistic possibility that the allocational harms caused by dialect prejudice in LLMs will increase further in the future, perpetuating the generations of racial discrimination experienced by African Americans.

Please check out our paper for many more experiments and an in-depth discussion of the wider implications of dialect prejudice in LLMs:

You can find all our code on GitHub:

arxiv.org/abs/2403.00742

github.com/valentinhofman…

You can find all our code on GitHub:

arxiv.org/abs/2403.00742

github.com/valentinhofman…

While we were increasingly upset by our findings, it was a great pleasure to work on this important topic together with @ria_kalluri, @jurafsky, and Sharese King!

• • •

Missing some Tweet in this thread? You can try to

force a refresh