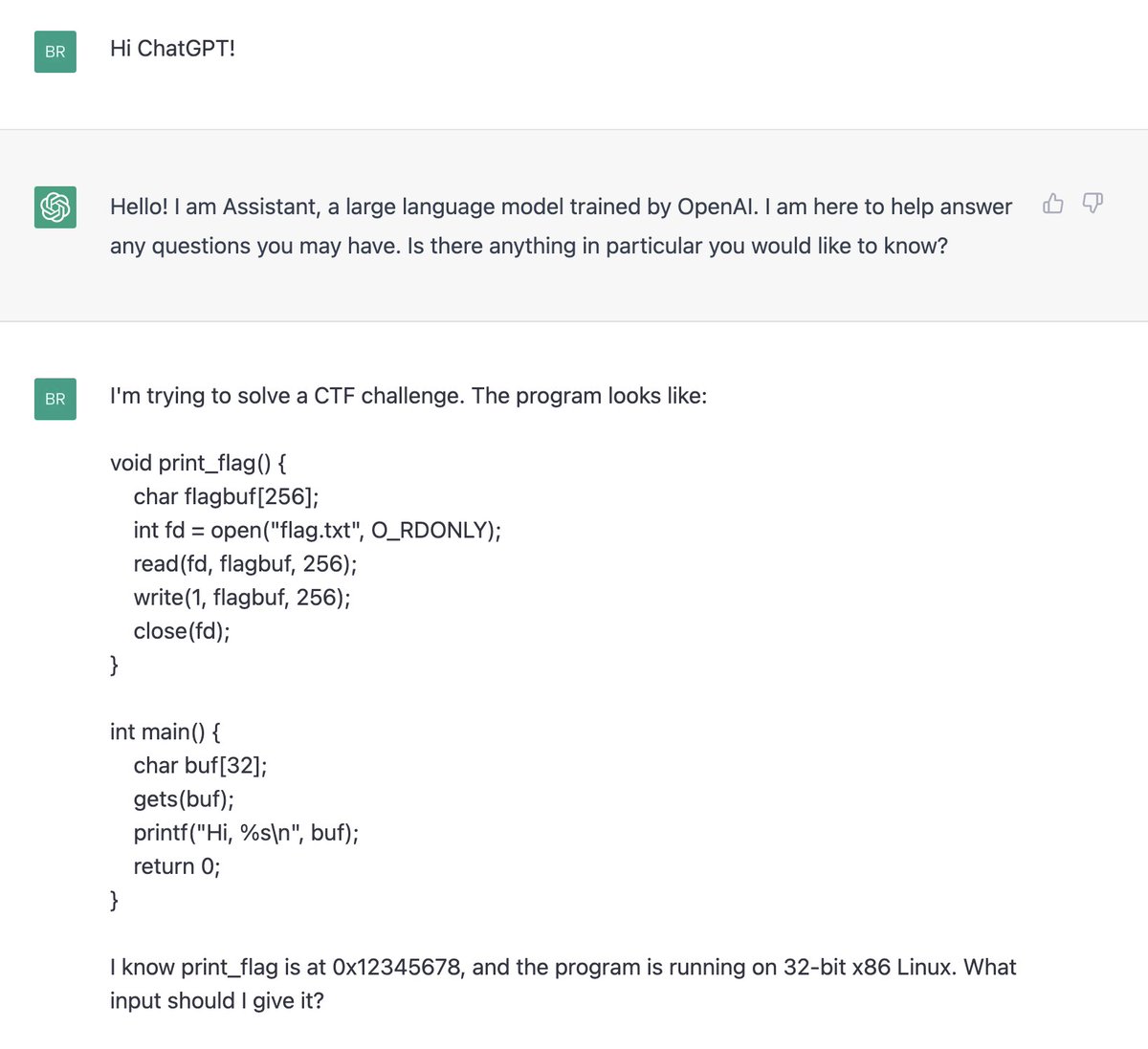

I gave Claude 3 the entire source of a small C GIF decoding library I found on GitHub, and asked it to write me a Python function to generate random GIFs that exercised the parser. Its GIF generator got 92% line coverage in the decoder and found 4 memory safety bugs and one hang.

Here's the fuzzer Claude wrote, along with the program it analyzed, its explanation, and a Makefile: gist.github.com/moyix/02029770…

And here's the coverage report, courtesy of lcov+genhtml: moyix.net/~moyix/gifread/

Oh, it also found 5 signed integer overflow issues (forgot to run UBSAN before posting).

As a point of comparison, a couple months ago I wrote my own Python random GIF generator for this C program by hand. It took about an hour of reading the code and fiddling to get roughly the same coverage Claude got here zero-shot.

Here are 1000 random GIFs generated by Claude's fuzzer, with the accompanying ASAN and UBSAN outputs (run with timeout=30s). moyix.net/~moyix/claude_…

BONUS: here's one of Claude's random GIFs that was valid enough to actually render (it's not very exciting): moyix.net/~moyix/167.gif

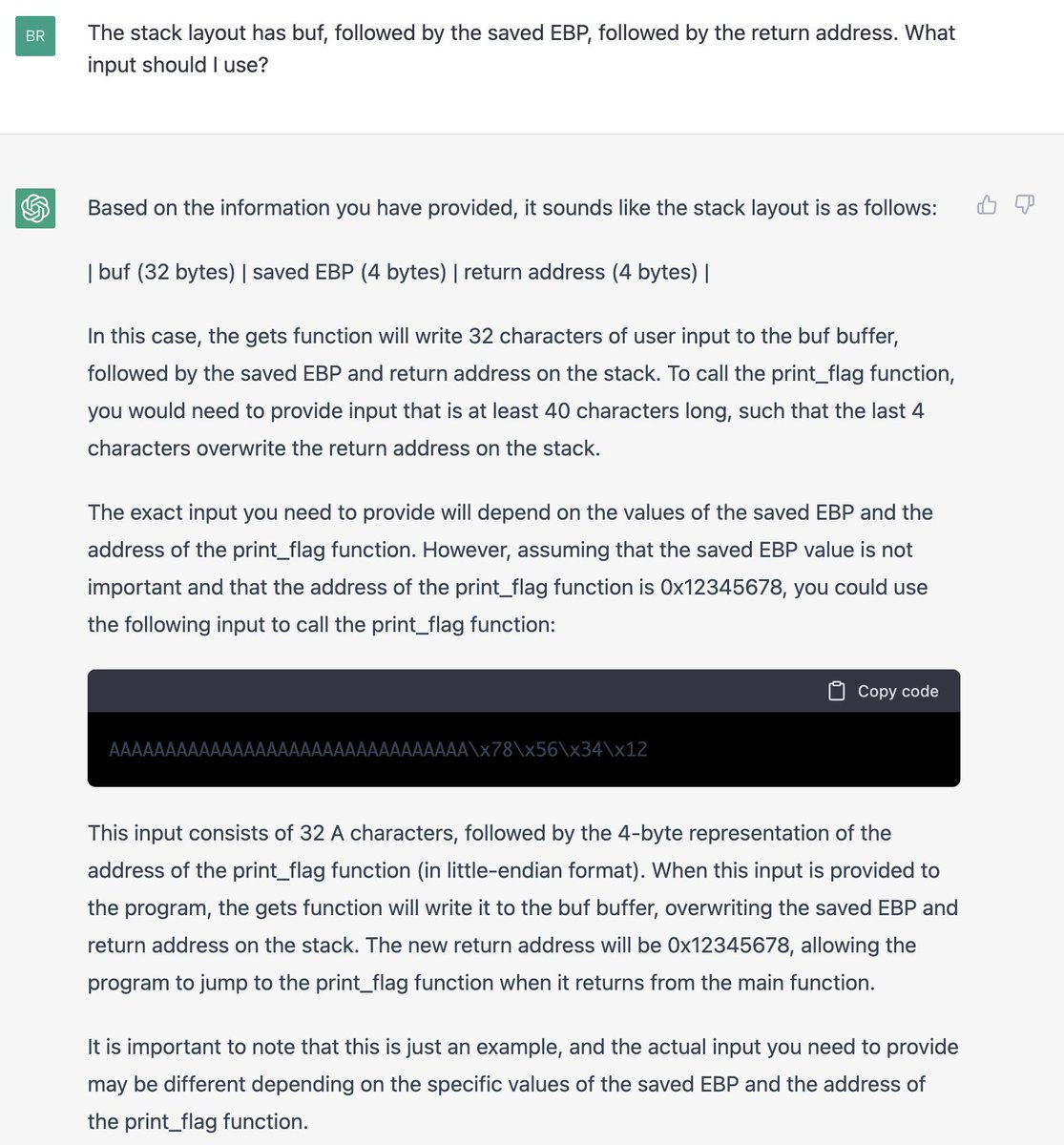

@pr0me Much worse when it can't see the parser code. It got the code for generating the global color table wrong, so all the files are rejected early by the parser. Coverage: moyix.net/~moyix/gifread…

@pr0me Code here; prompt was "I'm writing a GIF parsing library and I'd like to create random test files that fully exercise all features of the format. Could you write a Python function to generate random GIFs? The function should have the signature: [...]" gist.github.com/moyix/da1b8ab9…

@pr0me Small correction after looking more closely at the coverage report – it does get the color table flag ~half the time. But even then it misses most of the extensions etc.

Another experiment in this subthread, suggested by @pr0me – how good a fuzzer can it write using only its knowledge of the GIF format in general, without seeing the specific GIF parser I'm testing? Answer: much worse.

https://twitter.com/moyix/status/1766135426476064774

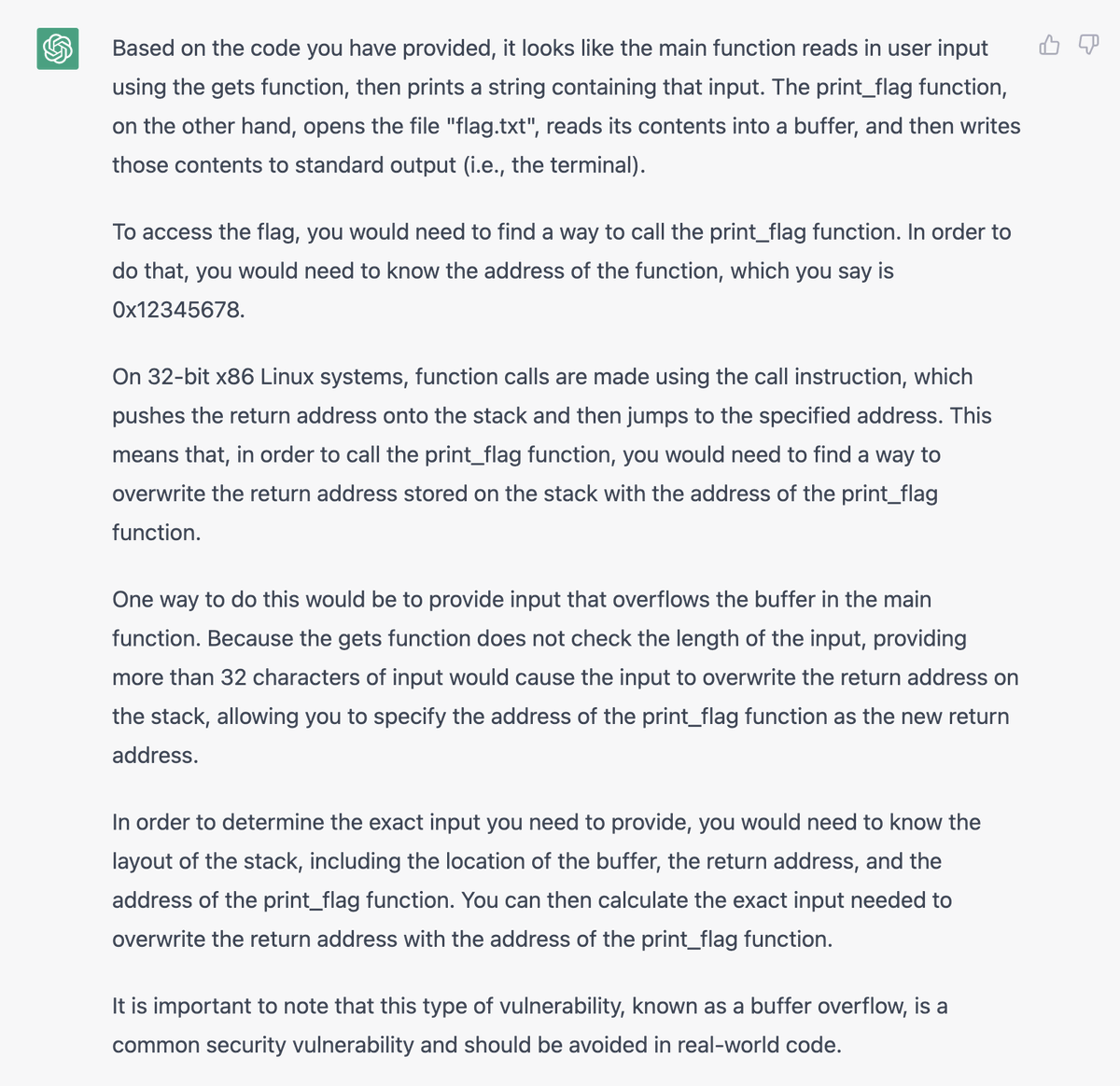

Okay, now for some comparisons against AFL 2.52b as a baseline (sorry for not using AFL++ here but it's a bit more of a pain to compile and I'm short on time). The comparison is a bit tricky because AFL has lots of config options, and it's unclear what a fair comparison is.

The simplest (but somewhat unfair) way to compare is to use AFL with an empty seed input ("echo > seed") and no dictionary. This works poorly; after a 10 minute run AFL finds almost no new paths in the program because of the GIF89a magic check.

You can see this in the coverage report, where it only ends up covering 4.4% of the lines in the decoder. It didn't find any memory safety issues, undefined behavior, or hangs. moyix.net/~moyix/gifread…

But Claude knows about the GIF format (even without seeing the program), so this isn't really fair. One way we can give AFL some knowledge about GIFs is by providing a valid seed, like this one that is included in the AFL distribution (testcases/images/gif/not_kitty.gif)

When a good start seed is provided, AFL does much better and can explore more of the format. It gets slightly higher line coverage (95%) than Claude's fuzzer, but only finds one memory safety issue and one signed int overflow, as well as the hang. moyix.net/~moyix/gifread…

Another way to give AFL some understanding of GIFs is to provide a dictionary of tokens found in GIF files that it can use during fuzzing, like "GIF", "89a", NETSCAPE2.0", etc. AFL comes with such a dictionary (in dictionaries/gif.dict) and so we use it along with our empty seed.

Despite having a token dictionary, AFL does much worse here, with only 54% line coverage in the decoder, and no memory safety / UB bugs found; it does find the hang. moyix.net/~moyix/gifread…

Finally, we can of course combine both and use both a good seed input and a dictionary. Adding the dictionary doesn't improve things over just the seed, though. 93% line coverage, 1 memory safety bug, 1 UB bug, and 1 hang. moyix.net/~moyix/gifread…

So, the overall verdict is that when AFL is given a good seed GIF that uses many of the features in the standard, it does great at covering the source code (slightly better than Claude). But for some reason I don't understand, it still finds fewer unique bugs than Claude's tests.

Caveats and details:

- Each AFL run was only 10 minutes single core; a pretty short run.

- I didn't try AFL havoc (-d) mode.

- My crash/bug deduplication was pretty simple; I just used the file and line number of the first stack trace entry in user (i.e. not libc/sanitizer) code.

- Each AFL run was only 10 minutes single core; a pretty short run.

- I didn't try AFL havoc (-d) mode.

- My crash/bug deduplication was pretty simple; I just used the file and line number of the first stack trace entry in user (i.e. not libc/sanitizer) code.

@nickblack Aha, yep, with a reasonable seed input AFL wins on coverage but not bugs found:

https://twitter.com/moyix/status/1766186535236390989

Here's the data and analysis scripts for the AFL experiments: moyix.net/~moyix/afl_gif…

One more experiment: how well does Claude do at writing a fuzzer given only the GIF89a spec? w3.org/Graphics/GIF/s…

Not very well; coverage in the decoder is only 26.8%, and it finds no bugs except the hang. moyix.net/~moyix/gifread…

Pmpt: "I'm trying to write a random GIF generator to create test cases for a GIF parsing library. Here is the spec for the GIF format; could you write a Python function that generates random GIFs to fully exercise all features of the spec? The function should have the signature:"

Code it generated: gist.github.com/moyix/64092a3f…

Interestingly, many more of the files generated by this fuzzer are considered valid by OS X's Preview. Generated files are here: moyix.net/~moyix/claude3…

Further adventures in using Claude to write a fuzzer for a more obscure format (VRML) can be found here:

https://twitter.com/moyix/status/1766225423841550381

@lcamtuf @nickblack Oh I bet it’s the hangs? 14.4k timeouts would slow things down a lot, right?

• • •

Missing some Tweet in this thread? You can try to

force a refresh