If you are using Postgres for embeddings, JSON or large text columns, or all of the above... then you are using TOAST, whether you know it or no.

TOAST can have massive impact on performance. So lets talk about it a bit: 🧵

TOAST can have massive impact on performance. So lets talk about it a bit: 🧵

Postgres has 8K pages, and you can't spread a row across pages. In fact, Postgres prefers to leave a bit of room for additional versions of rows or additional rows.

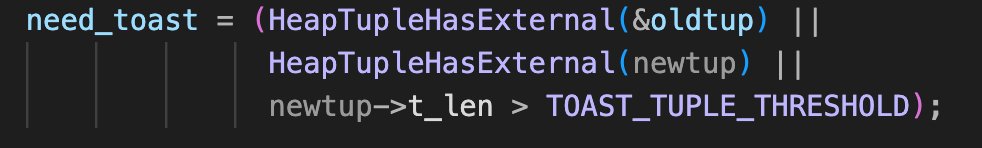

So if a row exceeds 2K (modifiable TOAST_TUPLE_THRESHOLD), Postgres is going to try and do something about it.

So if a row exceeds 2K (modifiable TOAST_TUPLE_THRESHOLD), Postgres is going to try and do something about it.

What does it do? it looks for "TOASTABLE" columns. With variable length type (varlena) like text, jsonb or vector, and that have storage type "extended" or "external". These storage types indicate data that can be stored outside the main table. Extended can also be compressed.

Then it starts with the largest value in one of these columns, it separates it into chunks, compressed them and stores them in another table.

The process continues until the row is under 2K. If it still does not fit, you'll get an error.

The process continues until the row is under 2K. If it still does not fit, you'll get an error.

Every table with TOASTABLE types has a matching pg_toast table. This blog shows you how to find it: medium.com/quadcode-life/…

What's the performance implication? Well, anything that accesses TOAST data will need to "detoast" - read, decompress and process large amounts of data from another table. This can lead to serious IO and much slower and more IO intensive operations than you may expect.

This blog shows a good example: blog.anayrat.info/en/2022/02/14/…

What about pgvector? Embeddings can get really large. OpenAI's small embedding is 1536 points each, which leads to ~6K for the vector alone. Well over the threshold. Oh, and it looks like pgvector doesn't support compressing them.

However, this means that searches through the embeddings table are very IO intensive (putting index strategy aside for a second).

@jkatz05 gave a great talk on all things pgvector, and showed how you can improve performance by using smaller vectors and forcing Postgres to avoid TOAST.

Slide 30 for TOAST stuff, but the entire talk is amazing: postgresql.eu/events/pgconfe…

Slide 30 for TOAST stuff, but the entire talk is amazing: postgresql.eu/events/pgconfe…

Finally, a common practice is to save a join by storing the source chunks for the embedding in the same row as the vector. This of course makes the row even larger, and if you force PG to avoid TOAST, it will reject inserts of too-large rows. So, move them to another table.

• • •

Missing some Tweet in this thread? You can try to

force a refresh