Build a LLM app with RAG to chat with any AI newsletter on Substack in just 30 lines of Python Code (step-by-step instructions):

1. Import necessary libraries

• Streamlit for building the web app

• Embedchain for the RAG functionality

• tempfile for creating temporary files and directories

• Streamlit for building the web app

• Embedchain for the RAG functionality

• tempfile for creating temporary files and directories

2. Configure the Embedchain App

For this application we will use GPT-4 Turbo, you can choose from cohere, anthropic or any other LLM of your choice.

Select the vector database as the opensource chroma db (you are free to choose any other vector database of your choice)

For this application we will use GPT-4 Turbo, you can choose from cohere, anthropic or any other LLM of your choice.

Select the vector database as the opensource chroma db (you are free to choose any other vector database of your choice)

3. Set up the Streamlit App

Streamlit lets you create user interface with just python code, for this app we will:

• Add a title to the app using 'st.title()'

• Create a text input box for the user to enter their OpenAI API key using 'st.text_input()'

Streamlit lets you create user interface with just python code, for this app we will:

• Add a title to the app using 'st.title()'

• Create a text input box for the user to enter their OpenAI API key using 'st.text_input()'

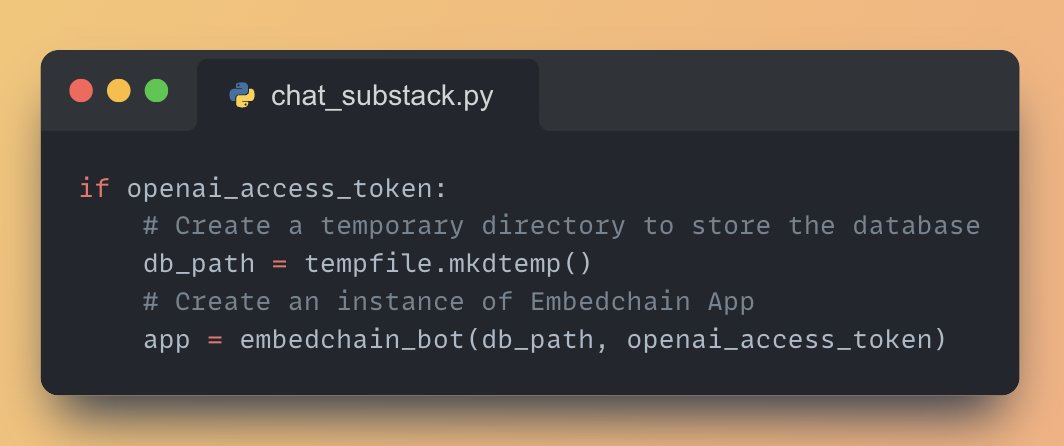

4. Initialize the Embedchain App

• If the OpenAI API key is provided, create a temporary directory for the vector database using 'tempfile.mkdtemp()'

• Initialize the Embedchain app using the 'embedchain_bot' function

• If the OpenAI API key is provided, create a temporary directory for the vector database using 'tempfile.mkdtemp()'

• Initialize the Embedchain app using the 'embedchain_bot' function

5. Get the Substack Newsletter URL from the user and add it to the knowledge base

• Use 'st.text_input()' to get the substack URL from the user

• Given the video URL, add it to the embedchain application

• Use 'st.text_input()' to get the substack URL from the user

• Given the video URL, add it to the embedchain application

6. Ask question about the AI newsletter and display the answer

• Create a text input for the user to enter their question using 'st.text_input()'

• If a question is asked, get the answer from the Embedchain app and display it using 'st.write()'

• Create a text input for the user to enter their question using 'st.text_input()'

• If a question is asked, get the answer from the Embedchain app and display it using 'st.write()'

Working Application demo using Streamlit

Paste the above code in vscode and run the following command: 'streamlit run chat_substack.py'

Paste the above code in vscode and run the following command: 'streamlit run chat_substack.py'

If you’re interested in:

- ML/NLP

- LLMs

- RAG

Connect with me → @Saboo_Shubham_

My Newsletter →

Everyday, I share tip & tutorials on above topics on X and my newsletter. unwindai.substack.com

- ML/NLP

- LLMs

- RAG

Connect with me → @Saboo_Shubham_

My Newsletter →

Everyday, I share tip & tutorials on above topics on X and my newsletter. unwindai.substack.com

Find all the awesome LLM Apps demo with RAG in the following Github Repo.

P.S: Don't forget to star the repo to show your support 🌟

github.com/Shubhamsaboo/a…

P.S: Don't forget to star the repo to show your support 🌟

github.com/Shubhamsaboo/a…

• • •

Missing some Tweet in this thread? You can try to

force a refresh