Telegram has launched a pretty intense campaign to malign Signal as insecure, with assistance from Elon Musk. The goal seems to be to get activists to switch away from encrypted Signal to mostly-unencrypted Telegram. I want to talk about this a bit. 1/

First things first, Signal Protocol, the cryptography behind Signal (also used in WhatsApp and several other messengers) is open source and has been intensively reviewed by cryptographers. When it comes to cryptography, this is pretty much the gold standard. 2/

Telegram by contrast does not end-to-end encrypt conversations by default. Unless you manually start an encrypted “Secret Chat”, all of your data is visible on the Telegram server. Given who uses Telegram, this server is probably a magnet for intelligence services. 3/

Signal’s client code is also open source. You can download it right now and examine the code and crypto libraries. Even if you don’t want to do that, many experts have. This doesn’t mean there’s never going to be a bug: but it means lots of eyes.

github.com/signalapp/Sign…

github.com/signalapp/Sign…

Pavel Durov, the CEO of Telegram, has recently been making a big conspiracy push to promote Telegram as more secure than Signal. This is like promoting ketchup as better for your car than synthetic motor oil. Telegram isn’t a secure messenger, full stop. That’s a choice Durov made.

When Telegram launched, they had terrible and insecure cryptography. Worse: it was only available if you manually turned it on for each chat. I assumed (naively) this was a growing pain and eventually they’d follow everyone else and add default end-to-end encryption. They didn’t.

I want to switch away from that and briefly address a specific point Durov makes in his post. He claims that Signal doesn’t have reproducible builds and Telegram does. As I said, this is extremely silly because Telegram is unencrypted anyway, but it’s worth addressing.

One concern with open source code is that even if you review the open code, you don’t know that this code was used to build the app you download from the App Store. “Reproducible builds” let you build the code on your own computer and compare it to the downloaded code.

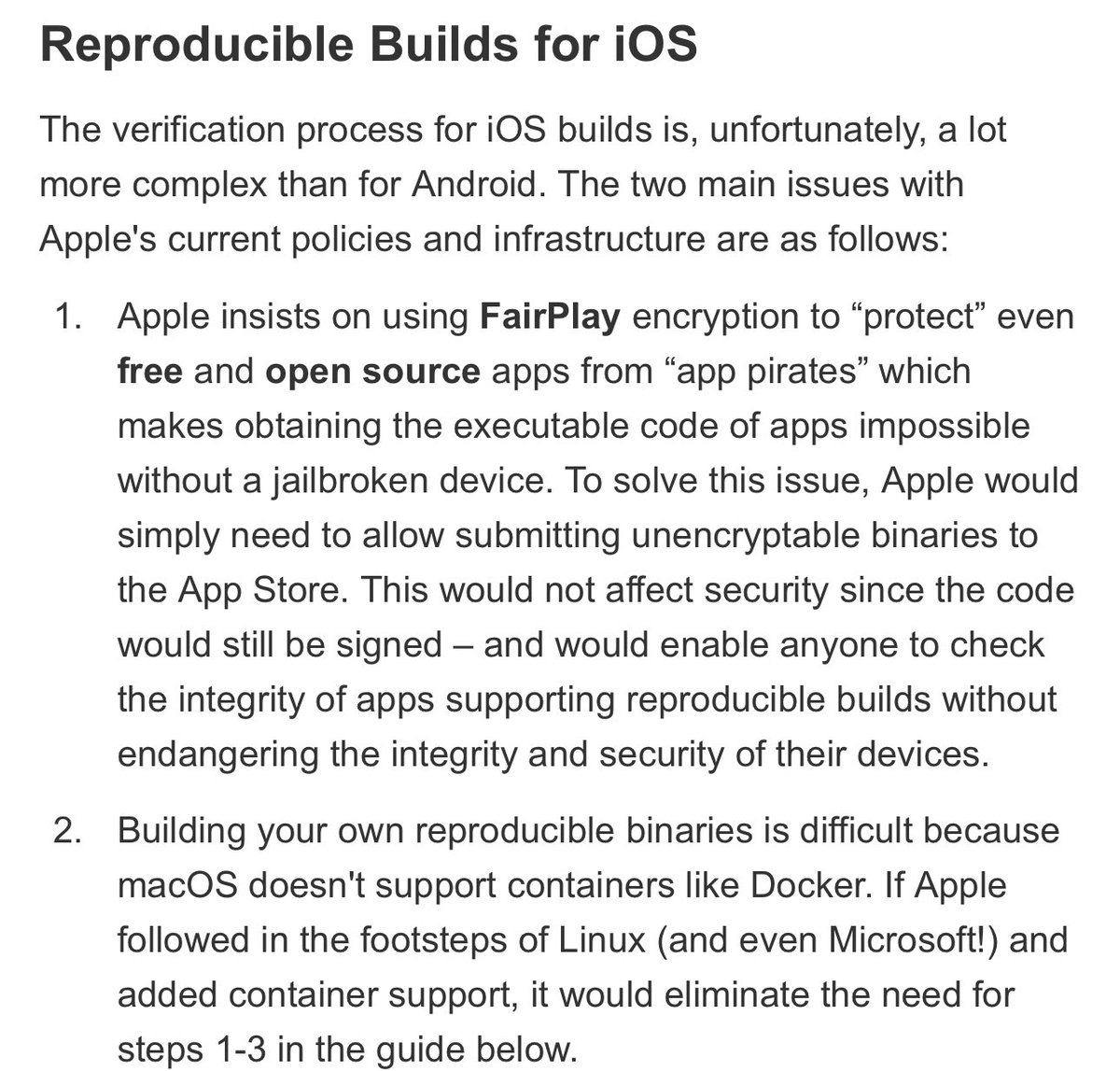

Signal has these for Android, and it’s a relatively simple process. Because Android is friendly to this. For various Apple-specific reasons this is shockingly hard to do on iOS. Mostly because apps are encrypted. (Apple should fix this.)

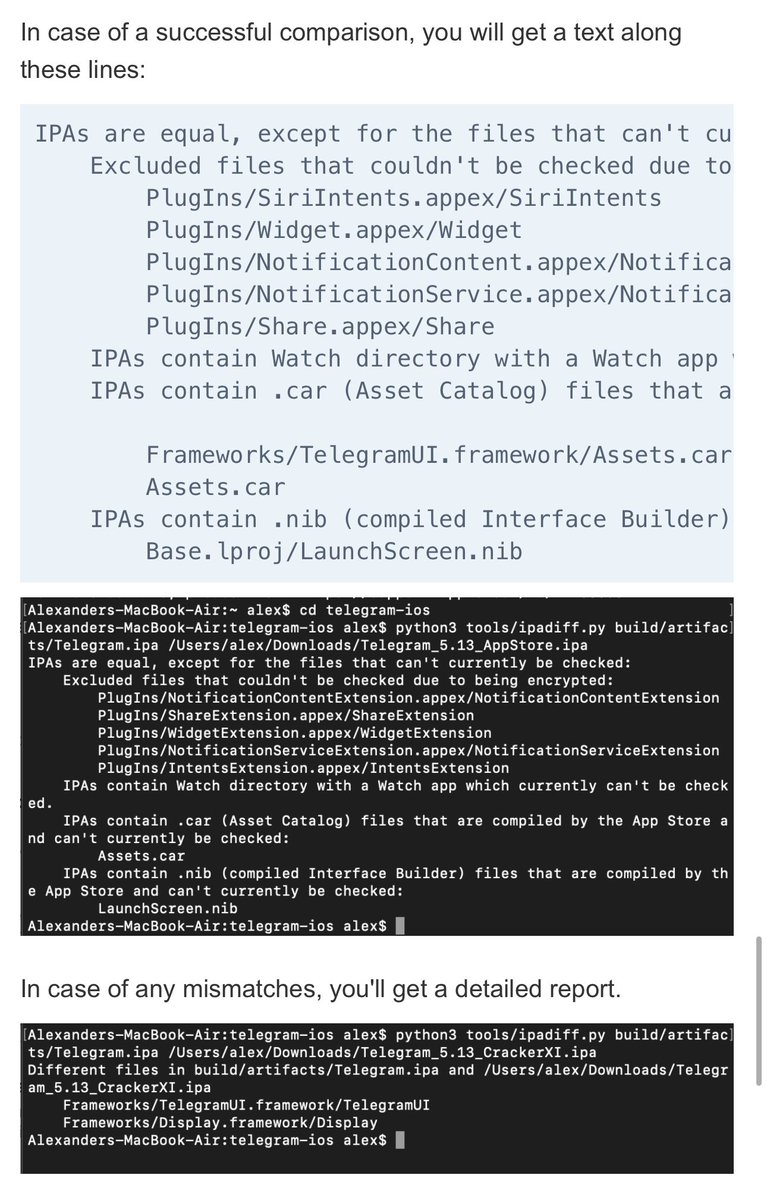

I want to give Telegram credit because they’ve tried to “hack” a solution for repro builds on iOS. But reading it shows how bad it is: you need a jailbroken (old) iPhone. And at the end you still can’t verify the whole app. Some files stay encrypted. core.telegram.org/reproducible-b…

It’s not weird for a CEO to say “my product is better than your product.” But when the claim is about security and critically, *you’ve made a deliberate decision not to add security for most users* then it exists the domain of competition, and starts to feel like malice.

I don’t really care which messenger you use. I just want you to understand the stakes. If you use Telegram, we experts cannot even begin to guarantee that your communications are confidential. In fact at this point I assume they are not, even in Secret Chats mode.

You should do what you want with this information. Think about confidentiality matters. Think about where Telegram operates its servers and what government jurisdictions they work in. Decide if you care about this. Just don’t shoot your foot off because you’re uninformed.

• • •

Missing some Tweet in this thread? You can try to

force a refresh