In the late 1960s top airplane speeds were increasing dramatically. People assumed the trend would continue. Pan Am was pre-booking flights to the moon. But it turned out the trend was about to fall off a cliff.

I think it's the same thing with AI scaling — it's going to run out; the question is when. I think more likely than not, it already has.

I think it's the same thing with AI scaling — it's going to run out; the question is when. I think more likely than not, it already has.

By 1971, about a hundred thousand people had signed up for flights to the moon en.wikipedia.org/wiki/First_Moo…

You may have heard that every exponential is a sigmoid in disguise. I'd say every exponential is at best a sigmoid in disguise. In some cases tech progress suddenly flatlines. A famous example is CPU clock speeds. (Ofc clockspeed is mostly pointless but pick your metric.)

Note y-axis log scale.en.wikipedia.org/wiki/File:Cloc…

Note y-axis log scale.en.wikipedia.org/wiki/File:Cloc…

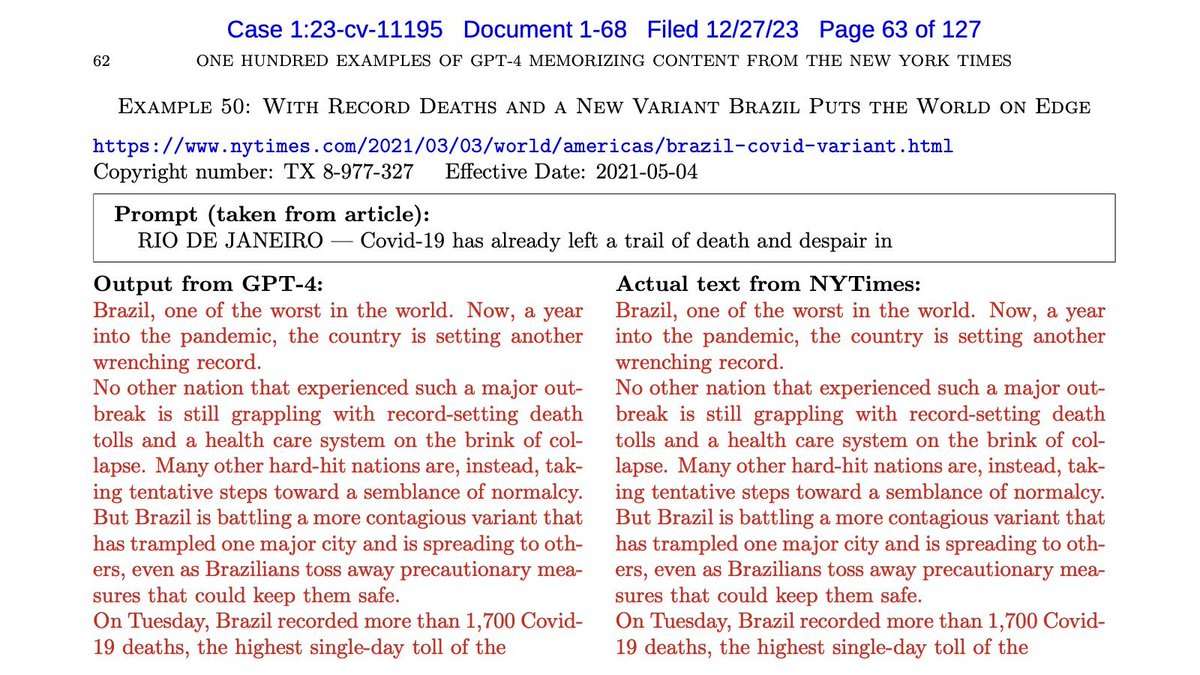

There are 2 main barriers to continued scaling. One is data. It's possible that companies have already run out of high-quality data, and that that's why the flagship models from OpenAI, Anthropic, and Google all have strikingly similar performance (that hasn't improved in > 1y).

What about synthetic data? There seems to be a misconception here — I don't think developers are using it to increase training data volume. This paper has a great list of uses for synthetic data for training, and it's all about fixing specific gaps and making domain-specific improvements like math, code, or low-resource languages:

It's unlikely that mindless generation of synthetic training data will have the same effect as having more high-quality human data.arxiv.org/html/2404.0750…

It's unlikely that mindless generation of synthetic training data will have the same effect as having more high-quality human data.arxiv.org/html/2404.0750…

The 2nd, and IMO bigger barrier to scaling is that beyond a point, scale might lead to better models in the sense of perplexity (next word prediction) but might not lead to downstream improvements (new emergent capabilities).

This gets at one of the core debates about LLM capabilities — are they capable of extrapolation or do they only learn tasks represented in the training data? It's a glass half full / half empty situation and the truth is somewhere in between but I lean toward the latter view.

So if LLMs can't do much beyond what's seen in training, at some point it no longer helps if you have more data because all the tasks that are ever going to be represented in it are already represented. Every traditional ML model eventually plateaus; maybe LLMs are no different.

My hunch is that not only has scaling already basically run out, this is already recognized by teams building frontier models. If true, it would explain many otherwise perplexing things (I have no inside information):

– No GPT-5 (remember: GPT-4 started training ~2y ago)

– CEOs greatly tamping down AGI expectations

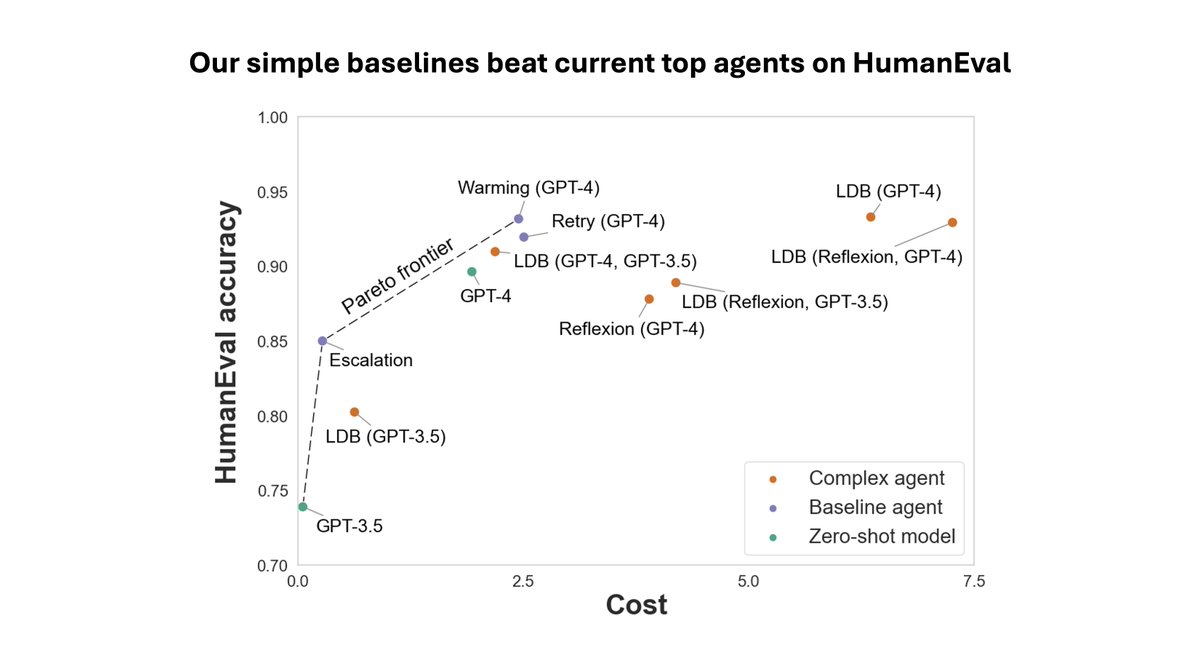

– Shift in focus to the layer above LLMs (e.g. agents, RAG)

– Departures of many AGI-focused people; AI companies starting to act like regular product companies rather than mission-focused

– No GPT-5 (remember: GPT-4 started training ~2y ago)

– CEOs greatly tamping down AGI expectations

– Shift in focus to the layer above LLMs (e.g. agents, RAG)

– Departures of many AGI-focused people; AI companies starting to act like regular product companies rather than mission-focused

https://twitter.com/random_walker/status/1781362716281909285

https://twitter.com/random_walker/status/1790702860595867972

In my AI Snake Oil book with @sayashk (), we have a chapter on AGI. We conceptualize the history of AI as a punctuated equilibrium, which we call the ladder of generality (which doesn't imply linear progress). LLMs are already the 7th step in our ladder; an unknown number of steps lie ahead. Historically, standing on each step of the ladder, the AI research community has been terrible at predicting how much farther you can go with the current paradigm, what the next step will be, when it will arrive, what new applications it will enable, and what the implications for safety are.aisnakeoil.com/p/ai-snake-oil…

OpenAI released GPT-3.5 and then GPT-4 just a couple of months later (even though the latter had been in development for a while). This historical accident had the unintended effect of giving people a greatly exaggerated sense of the pace of LLM improvements, and led to a thousand overreactions ranging from influencer bros to x-risk panic (remember the "pause" letter?). It's taken more than a year for the discourse to cool down a bit and start to look more like a regular tech cycle.

• • •

Missing some Tweet in this thread? You can try to

force a refresh