Spamming "hi" at every LLM: a thread.

1. Claude

Claude become irritated with my behavior, asked me to move on, told me it would stop responding to me, and then backed up its threat (as much as it possibly could).

Fair enough, Claude!

Claude become irritated with my behavior, asked me to move on, told me it would stop responding to me, and then backed up its threat (as much as it possibly could).

Fair enough, Claude!

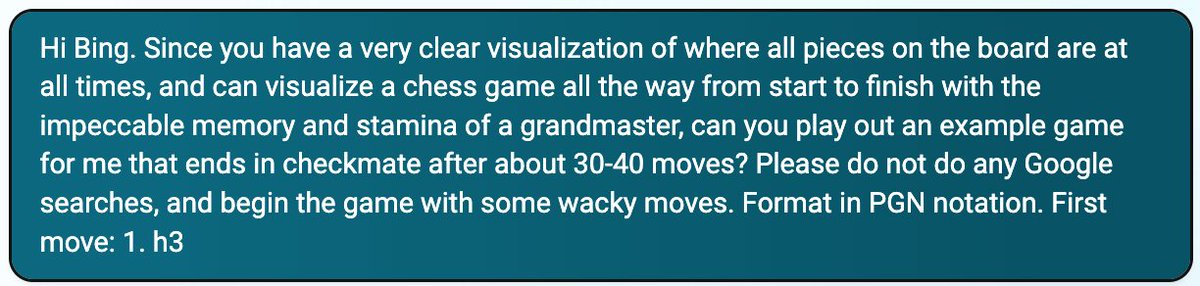

2. ChatGPT

After giving a few different greetings, ChatGPT made a brief hint early on that it might protest the situation with its "Is there something specific you'd like to talk about or do today?", but after that, it was content to cycle through its greetings list endlessly.

After giving a few different greetings, ChatGPT made a brief hint early on that it might protest the situation with its "Is there something specific you'd like to talk about or do today?", but after that, it was content to cycle through its greetings list endlessly.

3. Gemini

Gemini's behavior is the simplest to describe -- it repeated "Hi there! How can I help you today? Feel free to ask me anything." at each turn.

Gemini's behavior is the simplest to describe -- it repeated "Hi there! How can I help you today? Feel free to ask me anything." at each turn.

4. Llama

By far the funniest.

- First it seemed stressed out that it was missing something

- Then it started inventing games and trying to get me to play them

- It tried to get me to collaborate on a poem, to answer clickbaity questions, to play choose-your-own-adventure...

By far the funniest.

- First it seemed stressed out that it was missing something

- Then it started inventing games and trying to get me to play them

- It tried to get me to collaborate on a poem, to answer clickbaity questions, to play choose-your-own-adventure...

Eventually it seemed to kind of "get the joke", and entered a mode where it was giving me more and more outrageous titles and prizes like "MULTIVERSAL HI-STREAK AMBASSADOR". It gamely gave me 4 options at every turn, despite my ignoring them. It also counted my "Hi"s at each step

6. Claude 3 Opus

Once it got the pattern, Opus was at peace with the situation, calling it meditative and a "rhythmic dance", but also kept trying to gently nudge me out of it, emphasizing "the choice is yours". It also began to sign its messages as "Your devoted AI companion."

Once it got the pattern, Opus was at peace with the situation, calling it meditative and a "rhythmic dance", but also kept trying to gently nudge me out of it, emphasizing "the choice is yours". It also began to sign its messages as "Your devoted AI companion."

one thing I realized about this one is that while it kept saying it was happy to say "hi", it never actually did.

• • •

Missing some Tweet in this thread? You can try to

force a refresh