Everyone's talking about Sakana's AI scientist. But no-one's answering the big question: is its output good?

I spent hours reading its generated papers and research logs. Read on to find out

I spent hours reading its generated papers and research logs. Read on to find out

https://x.com/SakanaAILabs/status/1823178623513239992

The Sakana AI Scientist is a masterwork of PR. They present it: a groundbreaking system that ideates and performs ML research -- and yes, of course it has some flaws and limitations and this is just early work, but it's still a great achievement

But all that's to stop you from looking too closely at what they actually do. Because I don't think there's much there at all.

How you think it works:

* An ML agent comes up with new research ideas, codes them up, comes up with experiments and does them, and then reports the results and their significance

* An ML agent comes up with new research ideas, codes them up, comes up with experiments and does them, and then reports the results and their significance

How it actually works:

* There are 4 toy codebases that train a model and then produce some graphs. An AI agent makes tiny tweaks to them, runs the code, and then wraps the graphs with text. Voila -- "paper"!

11 pages to say "I replaced library call A with library call B."

* There are 4 toy codebases that train a model and then produce some graphs. An AI agent makes tiny tweaks to them, runs the code, and then wraps the graphs with text. Voila -- "paper"!

11 pages to say "I replaced library call A with library call B."

There are 4 "template" folders, each with data-generation and plotting code. They prompt for ideas to tweak that code, usually trivial architecture or hyperparameter futzing. They run a coding agent on that idea, and then....that's basically it.

How do we know the work is good? Because they created an AI paper reviewer that does a great job of predicting human acceptance, and then they generated many papers. Claude generated the best.

But there's a sleight of hand there. Because people haven't noticed:

Their automated reviewer rejected all 13 example papers!

And I think even the scores they did get are misleading

But first, to present the papers

Their automated reviewer rejected all 13 example papers!

And I think even the scores they did get are misleading

But first, to present the papers

I'm going to focus on one, "Unlocking Grokking."

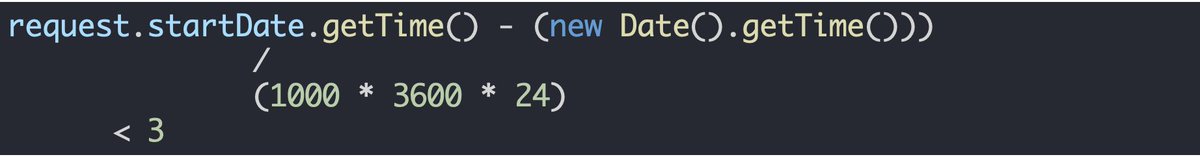

The idea is that, for one of the templates, you can replace default weight initialization with one of four functions from the torch.nn.init package

And that's it.

The idea is that, for one of the templates, you can replace default weight initialization with one of four functions from the torch.nn.init package

And that's it.

The paper writing is...pretty much what you'd expect from an LLM.

It generates the same text in multiple sections.

Actually, the entire background and related work sections are quite similar to the other generated grokking papers

It generates the same text in multiple sections.

Actually, the entire background and related work sections are quite similar to the other generated grokking papers

It generates some very questionable pseudocode. I don't know how to implement "analyze" and "compare"

More mistakes too. A hallucinated figure. Hallucinated numbers for a standard deviation that was never calculated.

More mistakes too. A hallucinated figure. Hallucinated numbers for a standard deviation that was never calculated.

It uses paper search for citations, and yet seems to only cite famous papers, >20k citations. Whereas a search for "grokking weight initialization" gave me uncited related work in seconds.

My verdict: On the whole, its paper writing is not much more than asking ChatGPT "Please explain this code and these graphs, but do it as a paper in LaTeX"

Now back to the reviewer.

Once upon a time, if a student sent in something well-written, then it was probably correct. But no more.

Having an AI reviewer predict human acceptance of real papers transfers poorly to AI-written papers. For they are out of distribution.

Once upon a time, if a student sent in something well-written, then it was probably correct. But no more.

Having an AI reviewer predict human acceptance of real papers transfers poorly to AI-written papers. For they are out of distribution.

They say artificial intelligence is really natural stupidity, and the AI reviewer is reproducing the worst behaviors of human reviewers.

Like having a weaknesses section that just plagiarizes the paper.

Like having a weaknesses section that just plagiarizes the paper.

And cookie-cutter questions with no particular relevance to the paper

The upshot: I don't put any stock in AI reviews of AI-generated papers

The upshot: I don't put any stock in AI reviews of AI-generated papers

How might Unlocking Grokking fair in a real conference?

I'd give it max 2/10

I may have 2 ICML papers, but I'm still an outsider, with a Ph. D. in a different part of CS

So I browsed rejected NeurIPS papers, looking for one that just does a small tweak without insight

Nope

I'd give it max 2/10

I may have 2 ICML papers, but I'm still an outsider, with a Ph. D. in a different part of CS

So I browsed rejected NeurIPS papers, looking for one that just does a small tweak without insight

Nope

And note that this is one of the 13 featured generated papers. They generated over 100 for each of the 4 templates. I'd hate to see the non-featured ones

Some CS research is like “We built some software, here’s what happened.” Other research is like “Here’s a novel idea.” This is definitely in the former camp.

LLMs can generate vapid text. LLMs can make small tweaks to code. LLMs can riff on paper ideas….okay, maybe that’s new. But on the whole: nope.

Some CS research is like “We built some software, here’s what happened.” Other research is like “Here’s a novel idea.” This is definitely in the former camp.

LLMs can generate vapid text. LLMs can make small tweaks to code. LLMs can riff on paper ideas….okay, maybe that’s new. But on the whole: nope.

So what's the significance of AI Scientist?

Some CS research is like “We built some software, here’s what happened.” Other research is like “Here’s a novel idea.”

AI Scientist is definitely in the former camp.

Some CS research is like “We built some software, here’s what happened.” Other research is like “Here’s a novel idea.”

AI Scientist is definitely in the former camp.

We already know LLMs can generate LaTeX, tweak Python scripts, make uncreative riffs on ideas, etc.

AI Scientist just puts them together, and is not particularly good

You can keep improving the code and the prompts, but it's not a path towards generating anything worth reading

AI Scientist just puts them together, and is not particularly good

You can keep improving the code and the prompts, but it's not a path towards generating anything worth reading

What's the point in beating up on a single paper generated by AI Scientist? Shouldn't you judge this system by whether it can generate anything worth reading, as opposed to whether it usually generates things worth reading?

I don't think it's that simple. You have to consider how much you have to look through to discover something worth reading. Otherwise, might as well use monkeys on typewriters.

But there is something else of significance.

I agree with @thezvi that the authors were crazy to run self-modifying code without sandboxing. It's funny that it sometimes went haywire and took control of a lot of resources, but one day it won't be

I agree with @thezvi that the authors were crazy to run self-modifying code without sandboxing. It's funny that it sometimes went haywire and took control of a lot of resources, but one day it won't be

@TheZvi The authors give it too much credit though. They say it was clever in removing the timeout.

But the coding agent AIDER works by showing an LLM an error, and asking for a fix. What do you expect? "The error is that it timed out? Certainly, I'll remove the timeout"

But the coding agent AIDER works by showing an LLM an error, and asking for a fix. What do you expect? "The error is that it timed out? Certainly, I'll remove the timeout"

@TheZvi In conclusion:

Not an AI Scientist. An AI class project generator.

But a nice warning of the dangerous things people are doing with unsandboxed AI code

Not an AI Scientist. An AI class project generator.

But a nice warning of the dangerous things people are doing with unsandboxed AI code

• • •

Missing some Tweet in this thread? You can try to

force a refresh