I'm tired of having to prove to people that generative AI systems infringe by default, so here's a megathread of images I've prompted you can use in your discussions.

Article by @GaryMarcus and myself at the end. 1/🧵

Article by @GaryMarcus and myself at the end. 1/🧵

We've show that very simple descriptions can result in images that are strikingly similar to the training data. 2/🧵

The differences you often see from the training data are related to how Midjourney tries to stylize the lighting to be more filmic or photographic, which is why so many people like its output. 4/🧵

This this is shockingly easy to do, getting output VERY close to the training data with very few words. 5/🧵

This goes for games as well, these prompts produced almost the same Last of Us image every single generation, ad infinitum. 7/🧵

The main thing people push back on is that, "You asked for those properties though!"

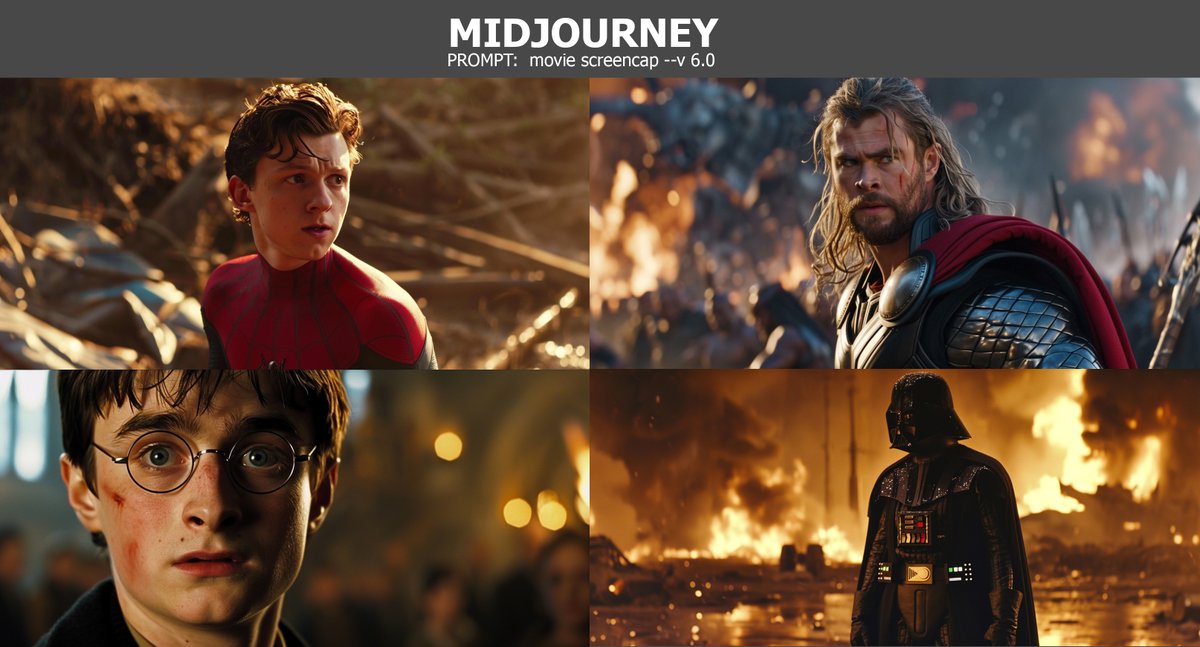

This is true, in order to show how the data can be extracted. But you don't need to name a property. This single 2x2 grid was generated from just "movie screencap," and has multiple IPs. 8/🧵

This is true, in order to show how the data can be extracted. But you don't need to name a property. This single 2x2 grid was generated from just "movie screencap," and has multiple IPs. 8/🧵

And if you're patient, and do some digging, you'll realize it will give you frames that are VERY similar to existing images , with just 1-3 non-specific words. 9/🧵

And even when they don't match closely to existing frames, just 1 word can give you multiple properties. These are all very obvious, well known properties, but what happens when it steals from one you don't recognize? 10/🧵

Midjourney and others shouldn't be able to produce such obvious infringing content without asking for it. It shouldn't be able to do it WITH asking for it either, but this shows how egregious their company and model are. 11/🧵

Continuing with more images and thoughts, this output in particular is proof they train on movie trailers, because this scene wasn't in the film. 12/🧵

But it's also clear they're either training on full films and/or or screencap websites as well, because some shots I was only able to find by combing through the movies themselves, which was the case with some of the Top Gun stuff, and even Avengers. 13/🧵

Here's a small portion of a sprawling PureRef working document I have where I was trying to match frames to films that Midjourney spat out. It's time consuming, but I've found a lot more than we've shared. 14/🧵

Here are additional frames that were prompted in Midjourney simply with "popular movie screencap". Why is it giving so many perfect IP images with no indication that's what I wanted? That's not how these systems are supposed to work. 15/🧵

As Cap said, I can do this all day, but I'll leave it here for now. "Popular movie screencap."

Please read mine and @GaryMarcus' article.

18/🧵 spectrum.ieee.org/midjourney-cop…

Please read mine and @GaryMarcus' article.

18/🧵 spectrum.ieee.org/midjourney-cop…

• • •

Missing some Tweet in this thread? You can try to

force a refresh