Introducing an upgraded Claude 3.5 Sonnet, and a new model, Claude 3.5 Haiku. We’re also introducing a new capability in beta: computer use.

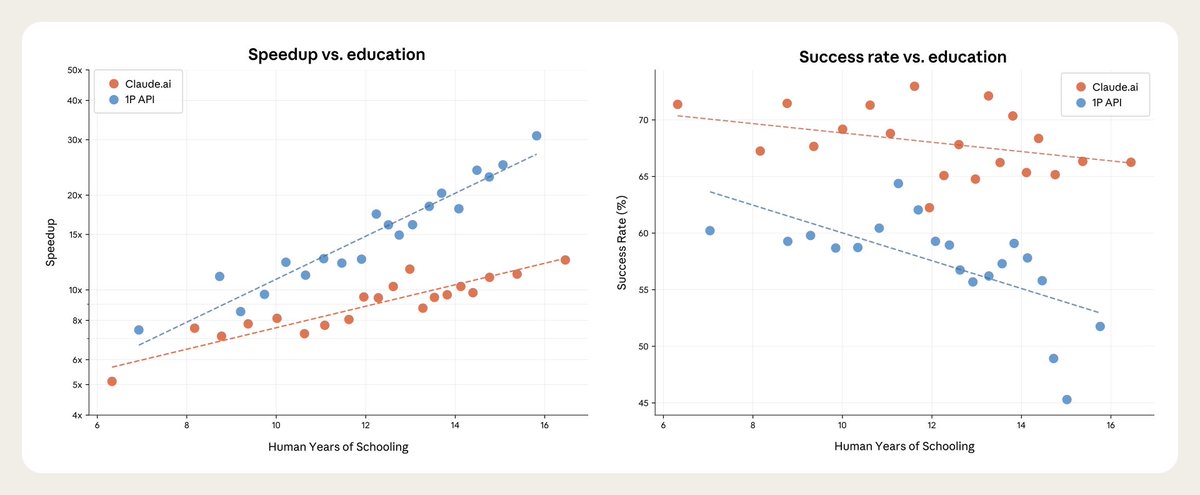

Developers can now direct Claude to use computers the way people do—by looking at a screen, moving a cursor, clicking, and typing text.

Developers can now direct Claude to use computers the way people do—by looking at a screen, moving a cursor, clicking, and typing text.

The new Claude 3.5 Sonnet is the first frontier AI model to offer computer use in public beta.

While groundbreaking, computer use is still experimental—at times error-prone. We're releasing it early for feedback from developers.

While groundbreaking, computer use is still experimental—at times error-prone. We're releasing it early for feedback from developers.

We've built an API that allows Claude to perceive and interact with computer interfaces.

This API enables Claude to translate prompts into computer commands. Developers can use it to automate repetitive tasks, conduct testing and QA, and perform open-ended research.

This API enables Claude to translate prompts into computer commands. Developers can use it to automate repetitive tasks, conduct testing and QA, and perform open-ended research.

We're trying something fundamentally new.

Instead of making specific tools to help Claude complete individual tasks, we're teaching it general computer skills—allowing it to use a wide range of standard tools and software programs designed for people.

Instead of making specific tools to help Claude complete individual tasks, we're teaching it general computer skills—allowing it to use a wide range of standard tools and software programs designed for people.

Claude 3.5 Sonnet's current ability to use computers is imperfect. Some actions that people perform effortlessly—scrolling, dragging, zooming—currently present challenges. So we encourage exploration with low-risk tasks.

We expect this to rapidly improve in the coming months.

We expect this to rapidly improve in the coming months.

Even while recording these demos, we encountered some amusing moments. In one, Claude accidentally stopped a long-running screen recording, causing all footage to be lost.

Later, Claude took a break from our coding demo and began to peruse photos of Yellowstone National Park.

Later, Claude took a break from our coding demo and began to peruse photos of Yellowstone National Park.

Beyond computer use, the new Claude 3.5 Sonnet delivers significant gains in coding—an area where it already led the field.

Sonnet scores higher on SWE-bench Verified than all available models—including reasoning models like OpenAI o1-preview and specialized agentic systems.

Sonnet scores higher on SWE-bench Verified than all available models—including reasoning models like OpenAI o1-preview and specialized agentic systems.

Claude 3.5 Haiku is the next generation of our fastest model.

Haiku now outperforms many state-of-the-art models on coding tasks—including the original Claude 3.5 Sonnet and GPT-4o—at the same cost as before.

The new Claude 3.5 Haiku will be released later this month.

Haiku now outperforms many state-of-the-art models on coding tasks—including the original Claude 3.5 Sonnet and GPT-4o—at the same cost as before.

The new Claude 3.5 Haiku will be released later this month.

We believe these developments will open up new possibilities for how you work with Claude, and we look forward to seeing what you'll create.

Read the updates in full: anthropic.com/news/3-5-model…

Read the updates in full: anthropic.com/news/3-5-model…

• • •

Missing some Tweet in this thread? You can try to

force a refresh