This could be an incredible revolution in Cosmology.

The Dark Energy model of the universe, which won a Nobel Prize in 2011, may be completely wrong.

The accelerating expansion instead is simply because time runs faster in the voids between galaxies.

Let me explain:

The Dark Energy model of the universe, which won a Nobel Prize in 2011, may be completely wrong.

The accelerating expansion instead is simply because time runs faster in the voids between galaxies.

Let me explain:

The standard workhorse of cosmology for the last 25 years has been the Lambda Cold Dark Matter (ΛCDM) which assumes the universe is roughly homogenous.

Originally Lambda was Einsteins 'fudge factor' to explain why the universe wasn't collapsing under its own weight

Originally Lambda was Einsteins 'fudge factor' to explain why the universe wasn't collapsing under its own weight

In his time, scientists thought the universe was static, neither expanding or contracting. Within GR however it should be collapsing under the force of gravity.

Einstein added this Lambda parameter to balance the force of gravity to yield a static universe

Einstein added this Lambda parameter to balance the force of gravity to yield a static universe

In 1929 Edwin Hubble made an incredible discovery: the further away a galaxy was, the faster it appeared to be moving away from this.

He was able to determine this by measuring Cepheid variable stars, which have a consistent relationship between their brightness and pulsation period, and so they can act as excellent distance markers.

Using these markers Hubble found that the more distant a galaxy was, the more red-shifted its spectrum of light became, meaning it was moving away from us at a higher velocity.

The further the distance to a galaxy, the faster it was receding. Therefore, the universe was expanding.

He was able to determine this by measuring Cepheid variable stars, which have a consistent relationship between their brightness and pulsation period, and so they can act as excellent distance markers.

Using these markers Hubble found that the more distant a galaxy was, the more red-shifted its spectrum of light became, meaning it was moving away from us at a higher velocity.

The further the distance to a galaxy, the faster it was receding. Therefore, the universe was expanding.

The fact the universe was expanding meant Lambda was no longer necessary to balance out gravity, and Einstein called it his 'greatest blunder'

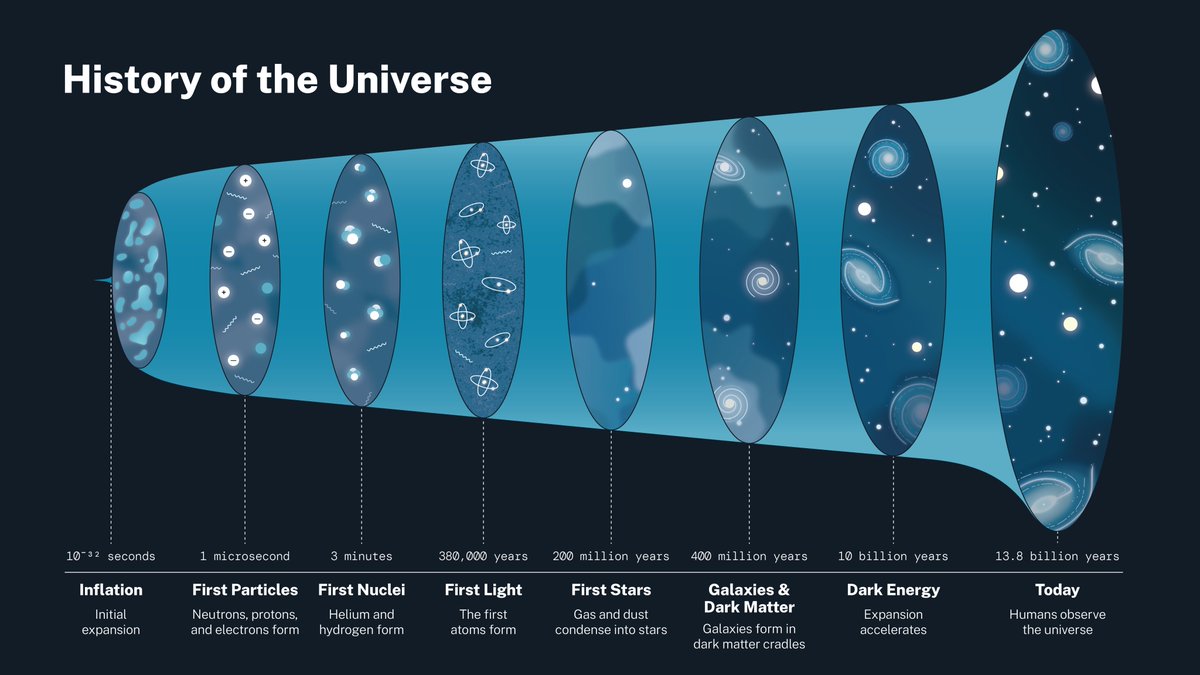

If you run this expanding universe backwards in time, it seems like everything is expanding from a single origin point, and in the 1960s astronomers detected faint radio signals coming from all directions in space.

The faint echos of the original Big Bang

The faint echos of the original Big Bang

This model of the universe, where things started from a single origin and uniformly expanded outwards, dominated for decades until the 1990s.

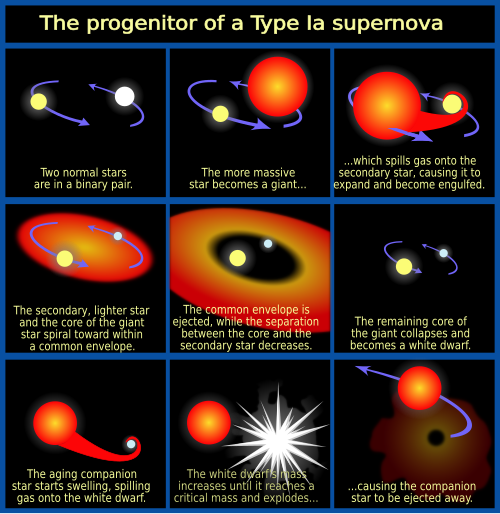

Astronomers were looking at Type Ia supernova at high redshift to see how the expansion of the universe was slowing down over time

Type 1a supernova are useful as 'standard candles' because they have constant brightness, since they form by a star stripping mass from its neighboring binary partner until they reach the Chanradesekar limit from below, at which point they expldoe. So, they always explode with the same energy and brightness.

Astronomers were looking at Type Ia supernova at high redshift to see how the expansion of the universe was slowing down over time

Type 1a supernova are useful as 'standard candles' because they have constant brightness, since they form by a star stripping mass from its neighboring binary partner until they reach the Chanradesekar limit from below, at which point they expldoe. So, they always explode with the same energy and brightness.

Instead, at high redshift they found that supernova were much dimmer than expected than if the expansion had been slowed down by gravity.

On the contrary, the rate of expansion appeared to be accelerated. Lambda, the cosmological constant, was so back.

On the contrary, the rate of expansion appeared to be accelerated. Lambda, the cosmological constant, was so back.

This is the current mainstream cosmological model. Dark Energy, a mysterious unknown entity that makes accounts for 69% of the total energy constant, drives a constant volumetric expansion of space. More space means more expansion, which is why the size is accelerating.

Here's where it might be completely wrong. It assumes that space is homogenous, or roughly the same density everywhere.

However space is extremely inhomogenous - there are regions of dense galactic clusters, and then massive voids between them

However space is extremely inhomogenous - there are regions of dense galactic clusters, and then massive voids between them

Mass curves spacetime to produce gravity. But, there's no difference between being accelerated by gravity or accelerating from a rocket.

This is called Einsteins Equivalence principle, and applying it to inhomogenous cosmology may eliminate Dark Energy altogether

This is called Einsteins Equivalence principle, and applying it to inhomogenous cosmology may eliminate Dark Energy altogether

This is because the further down a gravitational well you are, the slower clocks run compared to clocks further up-well.

You can reason this yourself if you consider bouncing a photon between two mirrors at the top and bottom of an accelerating rocket. Exercise for the reader

You can reason this yourself if you consider bouncing a photon between two mirrors at the top and bottom of an accelerating rocket. Exercise for the reader

The important thing is this: In the voids between large galaxies time runs much faster than inside galaxies, and these voids are inhomogenous.

If you had an initial expansion of the universe from the Big Bang, more time has elapsed in the voids - possibly billions of years more

If you had an initial expansion of the universe from the Big Bang, more time has elapsed in the voids - possibly billions of years more

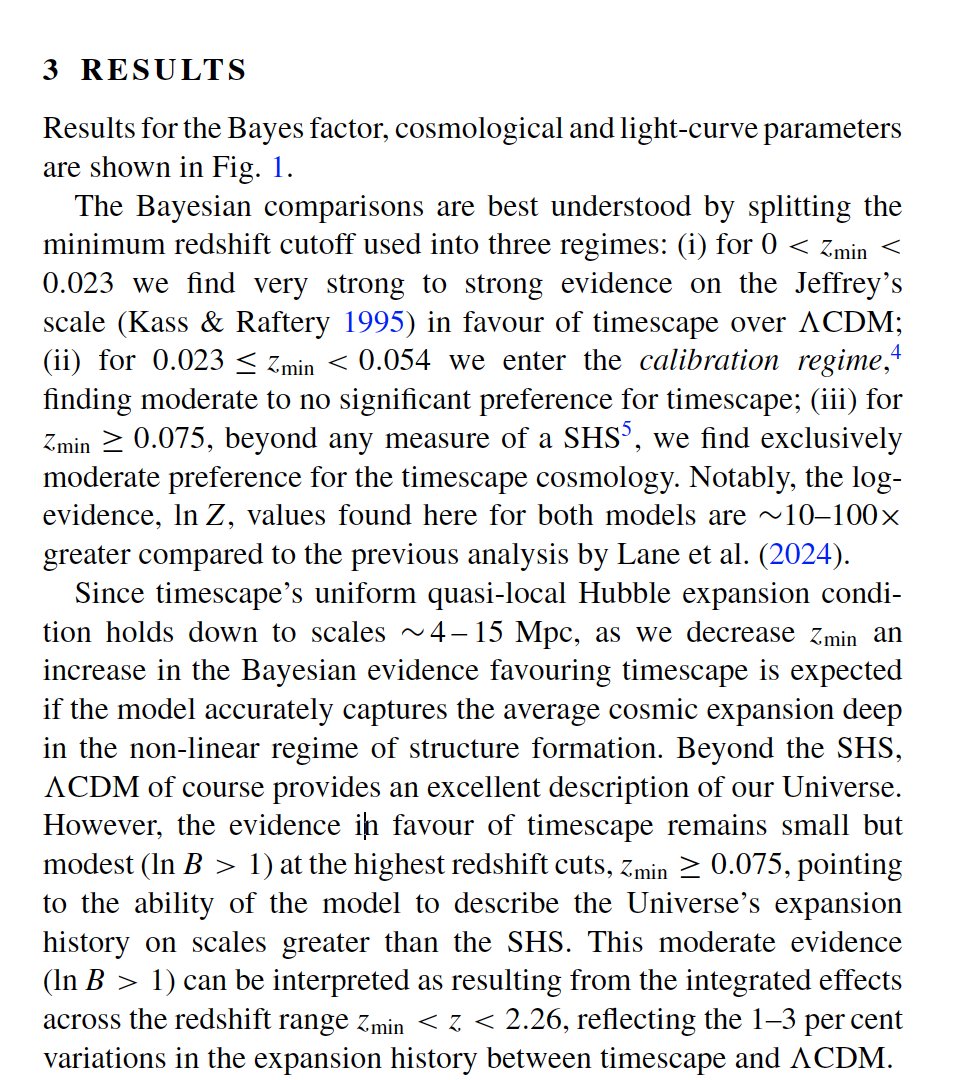

The researchers behind the Timescape model, as its known, used the familiar Type 1a supernova as standard candles and compared a goodness-of-fit Bayesian statistics to assess how timescape versus LCDM model can explain the observed redshift

Now, you can do a lot of statistical trickery to get a favored result to your own pet theory. Why should Timescape model be taken seriously? Two big reasons:

- The universe is emphatically not homogenous, and so void regions DO have a faster clock-rate than galactic superclusters and filaments and this should be accounted for in any model of expansion.

- Dark Energy is supposed to make up ~2/3rds of the universe's energy content yet doesn't correspond to anything we've ever observed experimentally. It solely exists to explain this anomaly of distant supernova redshift.

In the timescape model, the universe very well may be slowing down in expansion after some initial Big Bang event - its yet to be determined. The future fate of the cosmos seems still to be determined as advances in cosmology regularly demand we revisit our models.

- The universe is emphatically not homogenous, and so void regions DO have a faster clock-rate than galactic superclusters and filaments and this should be accounted for in any model of expansion.

- Dark Energy is supposed to make up ~2/3rds of the universe's energy content yet doesn't correspond to anything we've ever observed experimentally. It solely exists to explain this anomaly of distant supernova redshift.

In the timescape model, the universe very well may be slowing down in expansion after some initial Big Bang event - its yet to be determined. The future fate of the cosmos seems still to be determined as advances in cosmology regularly demand we revisit our models.

• • •

Missing some Tweet in this thread? You can try to

force a refresh