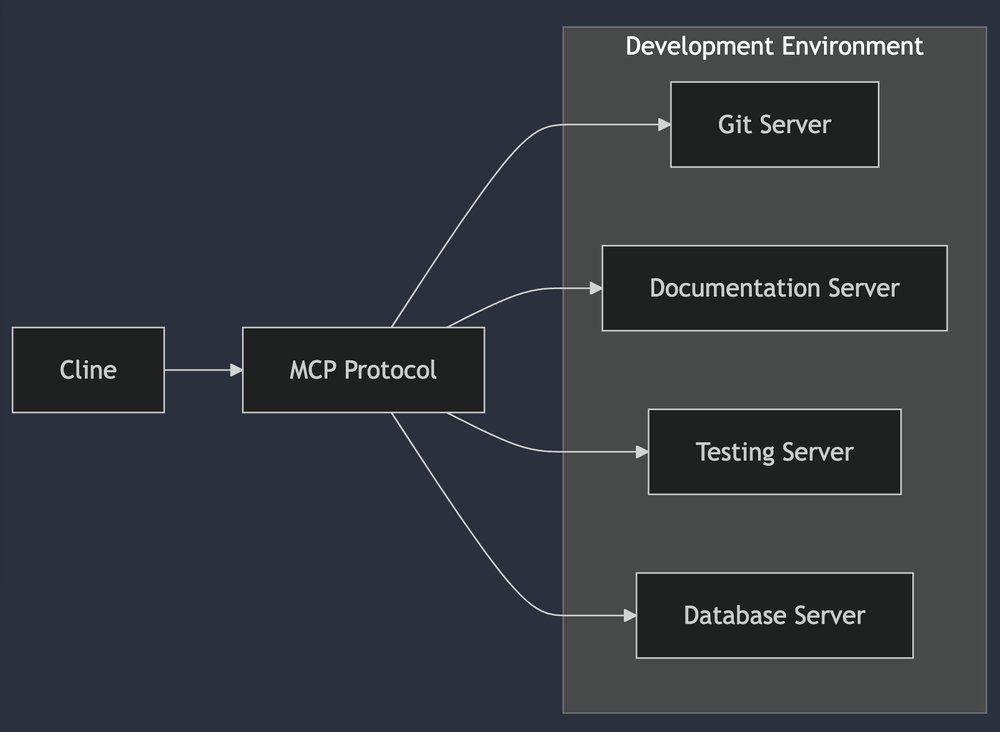

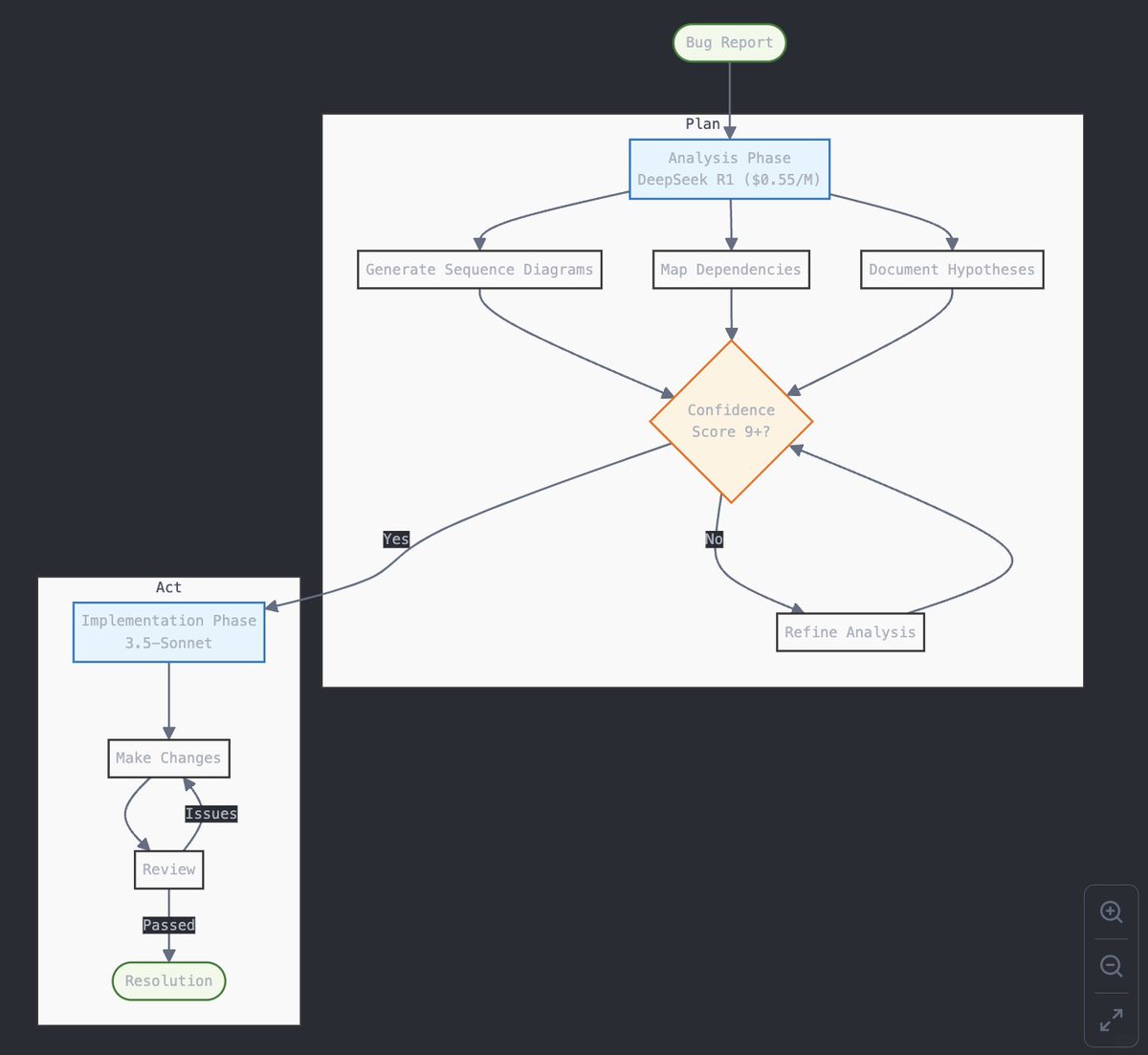

Looking at debugging patterns from our Cline power users, an insight has emerged: They're using DeepSeek R1 ($0.55/M tokens) as a 'code archaeologist' before touching anything.

🧵

🧵

1/ First, they have R1 analyze the codebase architecture and create sequence diagrams. Our most successful users report this step alone catches potential issues before they become problems.

"R1's reasoning is top tier for planning. The more sequence diagrams I fill my context with, the higher the accuracy."

"R1's reasoning is top tier for planning. The more sequence diagrams I fill my context with, the higher the accuracy."

2/ Then they use Plan mode to:

- Map dependencies

- Identify potential edge cases

- Create with [x][] format

- Generate minimal test cases

"Ask it to create test files that ONLY figure out one issue with minimal logs. You can only output 200 lines to analyze."hypothesis.md

- Map dependencies

- Identify potential edge cases

- Create with [x][] format

- Generate minimal test cases

"Ask it to create test files that ONLY figure out one issue with minimal logs. You can only output 200 lines to analyze."hypothesis.md

3/ Only THEN do they switch to 3.5-Sonnet for implementation. The key insight? 90% of debugging time is spent understanding, not fixing.

"Just having it reflect on what it's done and sanity check its work every 2-3 actions has significantly reduced our error rate."

"Just having it reflect on what it's done and sanity check its work every 2-3 actions has significantly reduced our error rate."

4/ Cost breakdown:

- R1 investigation: $0.55/M tokens

- Sonnet implementation: Standard rates

- Time saved: Users report 50-70% faster resolution

The ROI math is obvious when you're catching architectural issues at R1 prices instead of o1.

- R1 investigation: $0.55/M tokens

- Sonnet implementation: Standard rates

- Time saved: Users report 50-70% faster resolution

The ROI math is obvious when you're catching architectural issues at R1 prices instead of o1.

5/ Pro tip from our power users: Always ask for confidence scores.

"Have it rate confidence 1-10 with each message. Don't proceed until you get 9+/10."

Save this thread for when you're debugging your next complex issue🚀

"Have it rate confidence 1-10 with each message. Don't proceed until you get 9+/10."

Save this thread for when you're debugging your next complex issue🚀

6/ Install Cline here: marketplace.visualstudio.com/items?itemName…

7/ Step-by-step to use Cline in 2 mins:

https://x.com/thankscline/status/1882594411781161319

• • •

Missing some Tweet in this thread? You can try to

force a refresh