In this thread I want to share some thoughts about the FrontierMath benchmark, on which, according to OpenAI, some frontier models are scoring ~20%. This is benchmark consisting of difficult math problems with numerical answers. What does it measure, and what doesn't it measure?

I'll try to organize my thoughts around this problem. To be clear I don't intend any of this as criticism of the benchmark or of Epoch AI, which I think is doing fantastic work. Please understand anything you'd read as criticism as aimed at the problem's author, namely me.

(FWIW the problem is not exactly as I wrote it--I asked for the precise value of the limit L, not its first 3 digits. The answer is the sum of the reciprocals of the first 3264 positive integers. That said I think the edit made by Epoch did not alter the question substantially.)

When I wrote this problem I was convinced it was far out of reach of existing models. It's not clear to me whether it was solved during the internal OpenAI evaluations, but o3-mini-high solves it fairly regularly. When o3-mini-high came out and I discovered this I was shocked.

Here is an example from today of o3-mini-high getting the right answer:

FWIW it took me 3 tries and some prompt manipulation to get this to work, but I find it exceedingly impressive.chatgpt.com/share/67cc9a1c…

FWIW it took me 3 tries and some prompt manipulation to get this to work, but I find it exceedingly impressive.chatgpt.com/share/67cc9a1c…

The argument is more or less what I outline for the official solution, which you can see here:

It has 4 steps, which I outline below.epoch.ai/frontiermath/b…

It has 4 steps, which I outline below.epoch.ai/frontiermath/b…

(1) Over an algebraically closed field, the number of conics tangent to five general conics is 3264. This was discovered by a number of people in the 1850s and 1860s, correcting an earlier incorrect claim by Steiner.

(2) The "Galois group" of the relevant enumerative problem is S_3264, the full symmetric group on 3264 letters. This was proven by Harris in 1979.

(3) The number of components we are interested in is the number of cycles of Frobenius acting on this 3264-element set. As p gets large Frobenius is equidistributed in the symmetric group, by Chebotarev density.

(4) The number of cycles of a random permutation in S_n is the n-th harmonic number. This is a classical combinatorics fact.

Putting these facts together, we win.

Putting these facts together, we win.

This is more or less what o3-mini-high seems to be doing when it successfully answers the question. To be clear, this is extremely impressive in my view.

What makes this problem hard? I think this is where I made two mistakes when I was writing it. The first thing that makes the problem hard is that it requires a lot of background--you have to know the facts 1-4 above. Most mathematicians don't know these facts.

Indeed, even understanding some of the statements--like the relevant form of the Chebotarev density theorem, what the Galois group of an enumerative problem are, etc. require a fair amount of background.

The second thing is that proving these statements is hard. And I hadn't internalized that to answer the question YOU DON'T NEED TO PROVE THESE STATEMENTS.

Knowing obscure facts is hard for a person but much easier for an LLM. And if you ask the LLM to prove any of 1-4 above, it will typically whiff.

So what is the benchmark measuring? I think it's something like the following: (a) how much known (if possibly obscure) mathematical knowledge does the LLM have, and (b) can it match a problem to the knowledge it has memorized.

Thinking about this over the past couple of months, I've come to the conclusion that a fair amount of math research actually does have this flavor--one looks up or recalls some known facts and puts them together. This is the 90% of math research that is "routine."

What this suggests to me is that these reasoning models are not too far from being very useful aids in this part of doing math. I expect them to be regularly useful at this kind of thing by the end of the year.

What about the non-routine part of math research--coming up with genuinely new ideas or techniques, understanding previously poorly-understood structures, etc.? First, I think it's worth saying that this is (i) the important part of research, and (ii) it happens pretty rarely.

I'm not necessarily skeptical that an AI tool can do this. If this incident has revealed anything to me it is that I don't necessarily fully understand what skills one needs to do non-routine mathematics work.

My sense is that the skills used to do this are quite different from what the AI is using to solve FrontierMath problems--that is, I don't think the primary skill being used is recall. It is, I think, something more like philosophy, and it's less clear how to train for it.

I've been thinking hard about how one would make a benchmark that is more about what I think of as the fundamental skills for math research. Please let me know if you have any thoughts on this.

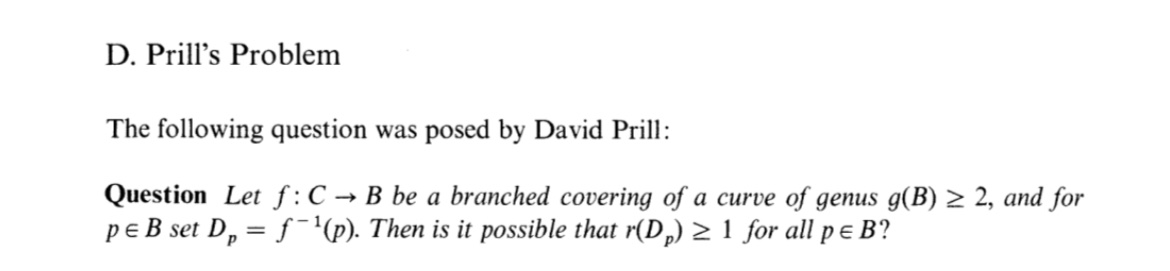

Addendum on a related problem:

https://x.com/littmath/status/1898550947955048743?s=46&t=41cpGRZavPniB0OfiIMsDA

• • •

Missing some Tweet in this thread? You can try to

force a refresh