✦ 𝗔𝗜×𝗖𝗿𝘆𝗽𝘁𝗼 𝘁𝗵𝗲𝘀𝗶𝘀 𝟮𝟬𝟮𝟱 ✦

We are excited to share our vision of:

‣ What is the future of AI?

‣ What role will Crypto play in it?

‣ How will a 1T+ commoditized cognition market emerge?

By @vkleban & @sgershuni. Let's dive in!

cyber.fund/content/crypto…

We are excited to share our vision of:

‣ What is the future of AI?

‣ What role will Crypto play in it?

‣ How will a 1T+ commoditized cognition market emerge?

By @vkleban & @sgershuni. Let's dive in!

cyber.fund/content/crypto…

The Endgame: Commoditized Cognition

We envision the future where anyone can invest directly into AI model inference profits.

Crypto offers perfect rails for this to emerge, through AI model tokenization & collective ownership.

But how do we put AI models on a blockchain?

We envision the future where anyone can invest directly into AI model inference profits.

Crypto offers perfect rails for this to emerge, through AI model tokenization & collective ownership.

But how do we put AI models on a blockchain?

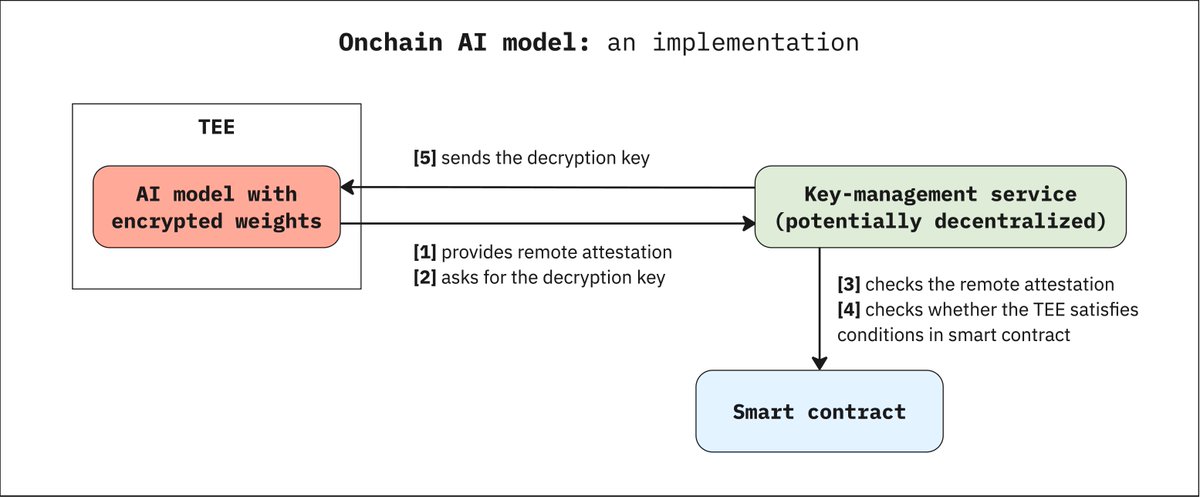

The Technology: onchain AI models

We loosely define *onchain AI model* as one that needs a smart contract approval to run.

Thus onchain AI model’s inference is tied to smart contract’s parameters.

This directly enables model tokenization & onchain inference monetization.

We loosely define *onchain AI model* as one that needs a smart contract approval to run.

Thus onchain AI model’s inference is tied to smart contract’s parameters.

This directly enables model tokenization & onchain inference monetization.

The Rails: TEEs & Crypto

How does one implement an onchain AI model?

There are various ways, but a basic scheme is depicted below, providing a good idea for general implementation design space.

How does one implement an onchain AI model?

There are various ways, but a basic scheme is depicted below, providing a good idea for general implementation design space.

The Future Landscape: AI-aware market mechanisms

Once onchain AI models are implemented and tokenized, how will the resulting commoditized cognition market look like?

We envision that a variety of unique market mechanisms will emerge. The most interesting ones will be:

Once onchain AI models are implemented and tokenized, how will the resulting commoditized cognition market look like?

We envision that a variety of unique market mechanisms will emerge. The most interesting ones will be:

1. Inference profit distribution to token holders

2. Revenue-sharing with onchain AI model’s fine-tunes & other derivatives

3. Integration of AI models in trading & lending markets

4. Pricing and insurance of the unpredictable inference-time compute

***

2. Revenue-sharing with onchain AI model’s fine-tunes & other derivatives

3. Integration of AI models in trading & lending markets

4. Pricing and insurance of the unpredictable inference-time compute

***

We now turn to AI industry's critical trends that will shape the commoditized cognition market.

To set the stage, LLM training consists of:

‣ *Pre-training* the main next-symbol prediction model;

‣ *Instruction fine-tuning* the model on Q&A data;

‣ *Alignment* via RLHF.

To set the stage, LLM training consists of:

‣ *Pre-training* the main next-symbol prediction model;

‣ *Instruction fine-tuning* the model on Q&A data;

‣ *Alignment* via RLHF.

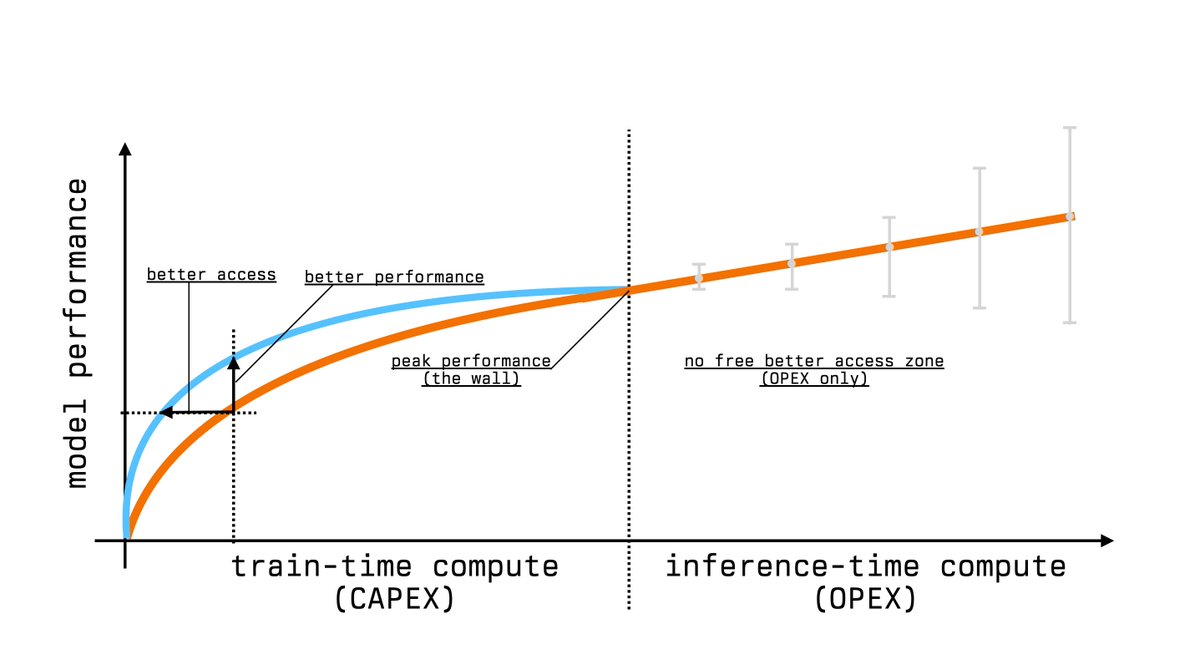

The Sutskever’s Wall

It has become evident that pre-training has hit a critical plateau – The Data Wall – where additional compute power no longer yields significant performance improvements.

As a result, in 2025 the AI industry will develop in the following directions.

It has become evident that pre-training has hit a critical plateau – The Data Wall – where additional compute power no longer yields significant performance improvements.

As a result, in 2025 the AI industry will develop in the following directions.

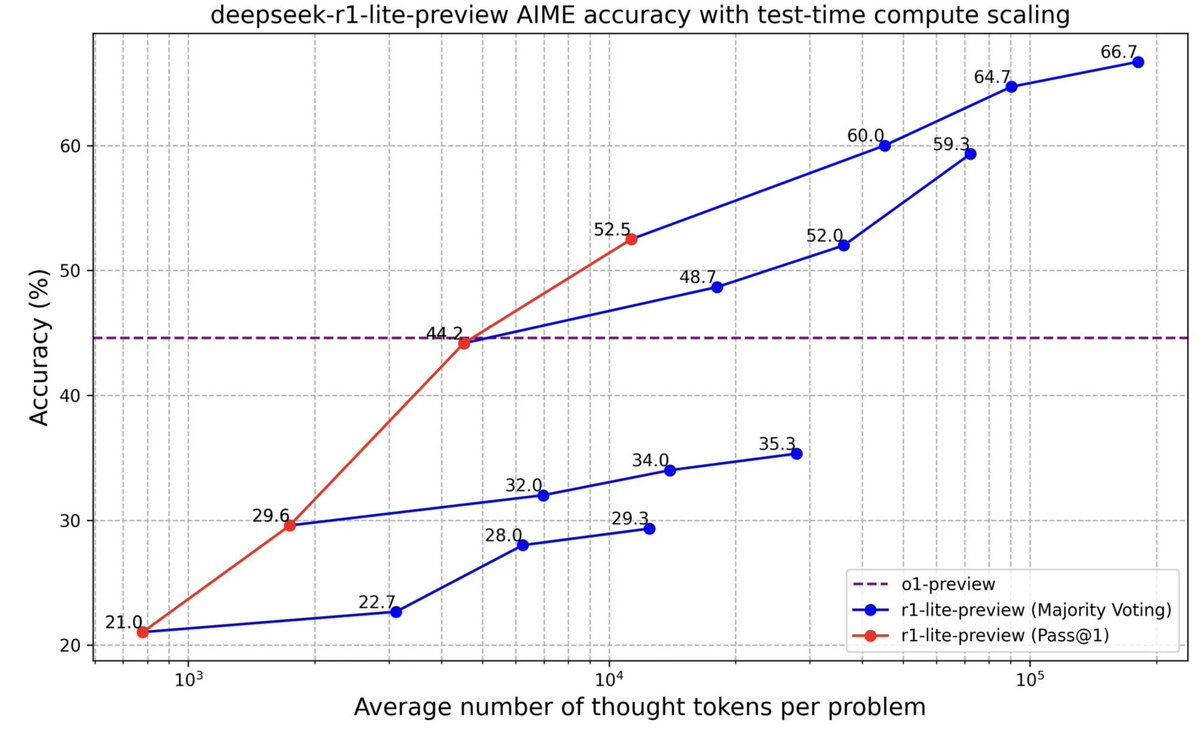

>> Reasoning: Inference-time Compute

The Wall implies that AI reached the ceiling for “intuitive” one-off answers.

The next frontier is in “reasoning” models (like o1 & o3), which implement "chain-of-thoughts" via inference, climb the scaling law & achieve better performance.

The Wall implies that AI reached the ceiling for “intuitive” one-off answers.

The next frontier is in “reasoning” models (like o1 & o3), which implement "chain-of-thoughts" via inference, climb the scaling law & achieve better performance.

>> Open-source AI: the genie is out of the bottle

For a long time GPT-4o had no comparable open-source alternative.

This changed with DeepSeek’s V3 open-weights model.

Open-source companies will continue publishing GPT-4o level open-weights models.

For a long time GPT-4o had no comparable open-source alternative.

This changed with DeepSeek’s V3 open-weights model.

Open-source companies will continue publishing GPT-4o level open-weights models.

>> Fine-tuning: Specialized Models

Types of fine-tuning include:

‣ Foundation level – Instruct fine-tuning and alignment

‣ Domain level – specializing for fields like law, medicine, etc

‣ User level – adapts to user’s needs

‣ Prompt-level – adapts to prompts real-time

Types of fine-tuning include:

‣ Foundation level – Instruct fine-tuning and alignment

‣ Domain level – specializing for fields like law, medicine, etc

‣ User level – adapts to user’s needs

‣ Prompt-level – adapts to prompts real-time

The world will need tens of foundational models, hundreds of domain-level models, millions of user-level models, and trillions of prompt-level models.

On the *domain level*, enterprises will focus on *distillation* of large reasoning models into smaller domain-specific models.

On the *domain level*, enterprises will focus on *distillation* of large reasoning models into smaller domain-specific models.

On the *user & prompt level*, individuals will develop AI agents by wrapping existing APIs and platforms.

User-level and prompt-level fine-tuning are the killer apps.

For example, 3.8B parameter fine-tuned model outperformed 130B parameter general model arxiv.org/pdf/2410.08020

User-level and prompt-level fine-tuning are the killer apps.

For example, 3.8B parameter fine-tuned model outperformed 130B parameter general model arxiv.org/pdf/2410.08020

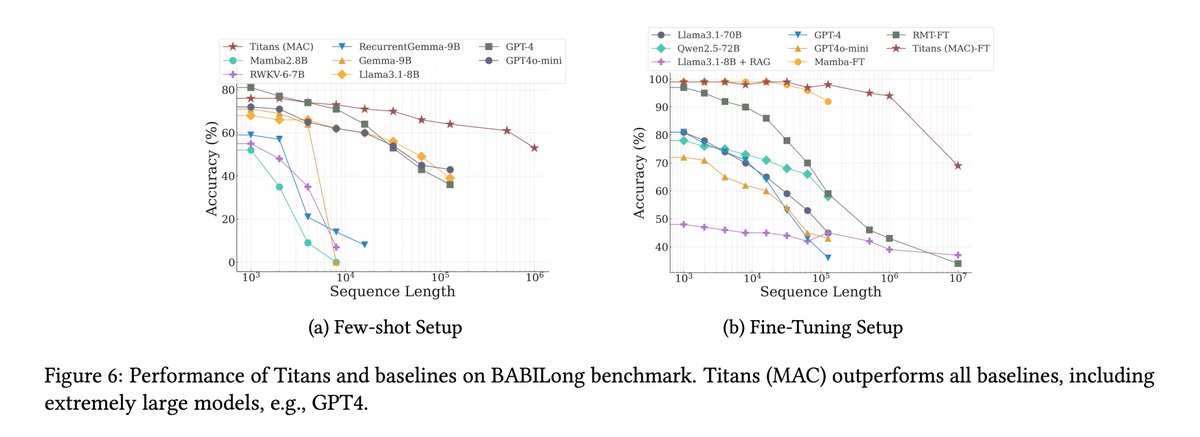

>> Memory: Gems for the GPU-poor

Context memory enables "information gains" on the user side – the more the user interacts with the model, the better the model knows the user.

Memory is a very promising direction, both underdeveloped and accessible by the GPU-poor.

Context memory enables "information gains" on the user side – the more the user interacts with the model, the better the model knows the user.

Memory is a very promising direction, both underdeveloped and accessible by the GPU-poor.

Example: depicted are results of the Titans paper, where a small AI model acts as memory for the larger model – we believe this trend will continue.

We are watching closely many other areas & ideas around memory: memory marketplaces, mixing memories, digital personalities, etc.

We are watching closely many other areas & ideas around memory: memory marketplaces, mixing memories, digital personalities, etc.

Conclusion: accelerate Commoditized Cognition

We love the AI space and it’s pace of development.

We believe that financialization of AI will happen through Crypto.

We invest in technologies and market mechanisms that accelerate commoditization of AI.

We love the AI space and it’s pace of development.

We believe that financialization of AI will happen through Crypto.

We invest in technologies and market mechanisms that accelerate commoditization of AI.

Last but not least: we are running the accelerator together with @delphi_labs.

It is supported by @solana, @base and @ethereum.

We invite all interested founders to apply!dagi.house

It is supported by @solana, @base and @ethereum.

We invite all interested founders to apply!dagi.house

Thanks go to @DennisonBertram, @sinahab, @artofkot, @ks_kulk, @Lomashuk, @Rico_Mueller, @rakhma57211, @kusichan for helpful feedback!

• • •

Missing some Tweet in this thread? You can try to

force a refresh