We built an AI model to simulate how a fruit fly walks, flies and behaves – in partnership with @HHMIJanelia. 🪰

Our computerized insect replicates realistic motion, and can even use its eyes to control its actions.

Here’s how we developed it – and what it means for science. 🧵

Our computerized insect replicates realistic motion, and can even use its eyes to control its actions.

Here’s how we developed it – and what it means for science. 🧵

To create it, we turned to MuJoCo, our open-source physics simulator – created for robotics and biomechanics – and added features such as:

▪️simulating fluid forces on the flapping wings, enabling flight

▪️adhesion actuators – mimicking the gripping force of insect feet

▪️simulating fluid forces on the flapping wings, enabling flight

▪️adhesion actuators – mimicking the gripping force of insect feet

We then trained an artificial neural network on real fly behavior from recorded videos 🎥 and let it control the model in MuJoCo.

This enables it to learn how to move the virtual insect in the most realistic way.

This enables it to learn how to move the virtual insect in the most realistic way.

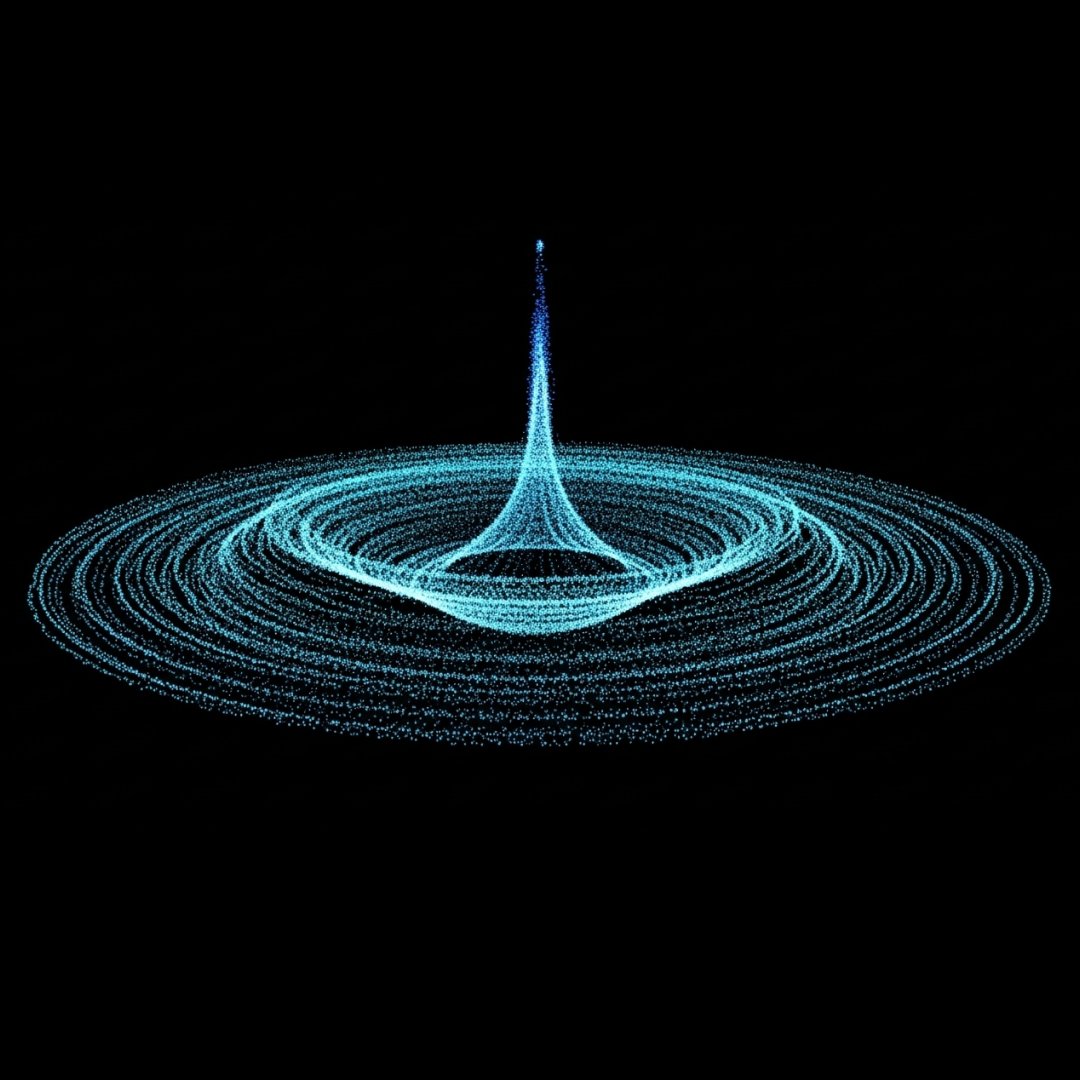

Here, the fly shows accurate movement along complex natural flight trajectories.

Watch how it’s instructed to follow a real path marked by the blue dots. 🔵🪰🔵

Watch how it’s instructed to follow a real path marked by the blue dots. 🔵🪰🔵

So why build these kinds of models?

We believe they could help scientists better understand how the brain, body and environment drive specific behaviors in an animal – finding connections that labs can't always measure. 🥼

We believe they could help scientists better understand how the brain, body and environment drive specific behaviors in an animal – finding connections that labs can't always measure. 🥼

We’ve already applied this approach to multiple organisms – a virtual rodent, and now a fruit fly.

So what comes next for neuroscientists? The zebrafish – a widely studied creature which shares 70% of its protein-coding genes with humans. 🐠

Find out more in @Nature ↓ nature.com/articles/s4158…

So what comes next for neuroscientists? The zebrafish – a widely studied creature which shares 70% of its protein-coding genes with humans. 🐠

Find out more in @Nature ↓ nature.com/articles/s4158…

To benefit the wider research community, we’ve also open-sourced this model with @HHMIJanelia.

Here’s the code ↓ github.com/TuragaLab/flyb…

Here’s the code ↓ github.com/TuragaLab/flyb…

• • •

Missing some Tweet in this thread? You can try to

force a refresh