TL;DR: We built a transformer-based payments foundation model. It works.

For years, Stripe has been using machine learning models trained on discrete features (BIN, zip, payment method, etc.) to improve our products for users. And these feature-by-feature efforts have worked well: +15% conversion, -30% fraud.

But these models have limitations. We have to select (and therefore constrain) the features considered by the model. And each model requires task-specific training: for authorization, for fraud, for disputes, and so on.

Given the learning power of generalized transformer architectures, we wondered whether an LLM-style approach could work here. It wasn’t obvious that it would—payments is like language in some ways (structural patterns similar to syntax and semantics, temporally sequential) and extremely unlike language in others (fewer distinct ‘tokens’, contextual sparsity, fewer organizing principles akin to grammatical rules).

So we built a payments foundation model—a self-supervised network that learns dense, general-purpose vectors for every transaction, much like a language model embeds words. Trained on tens of billions of transactions, it distills each charge’s key signals into a single, versatile embedding.

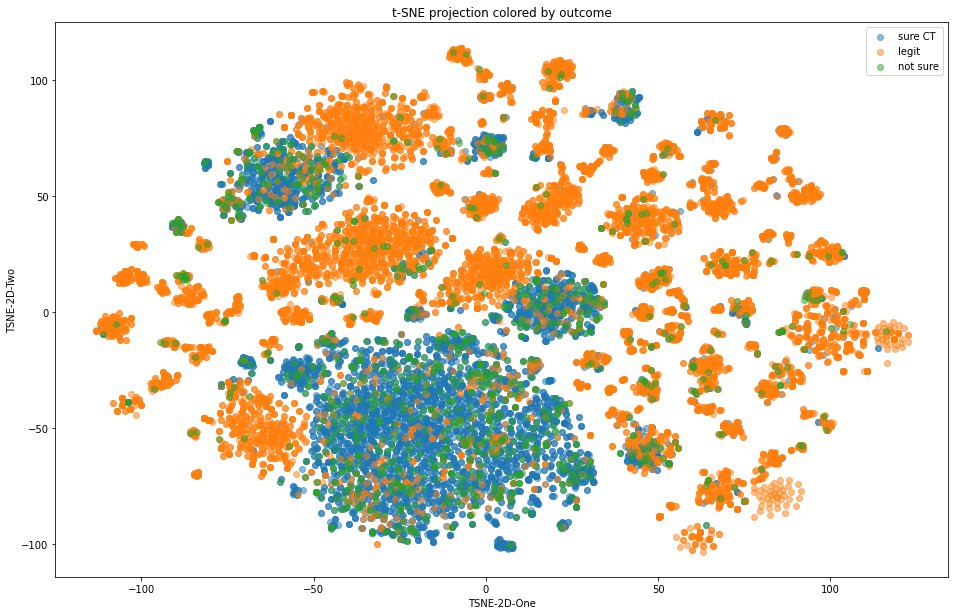

You can think of the result as a vast distribution of payments in a high-dimensional vector space. The location of each embedding captures rich data, including how different elements relate to each other. Payments that share similarities naturally cluster together: transactions from the same card issuer are positioned closer together, those from the same bank even closer, and those sharing the same email address are nearly identical.

These rich embeddings make it significantly easier to spot nuanced, adversarial patterns of transactions; and to build more accurate classifiers based on both the features of an individual payment and its relationship to other payments in the sequence.

Take card-testing. Over the past couple of years traditional ML approaches (engineering new features, labeling emerging attack patterns, rapidly retraining our models) have reduced card testing for users on Stripe by 80%. But the most sophisticated card testers hide novel attack patterns in the volumes of the largest companies, so they’re hard to spot with these methods.

We built a classifier that ingests sequences of embeddings from the foundation model, and predicts if the traffic slice is under an attack. It leverages transformer architecture to detect subtle patterns across transaction sequences. And it does this all in real time so we can block attacks before they hit businesses.

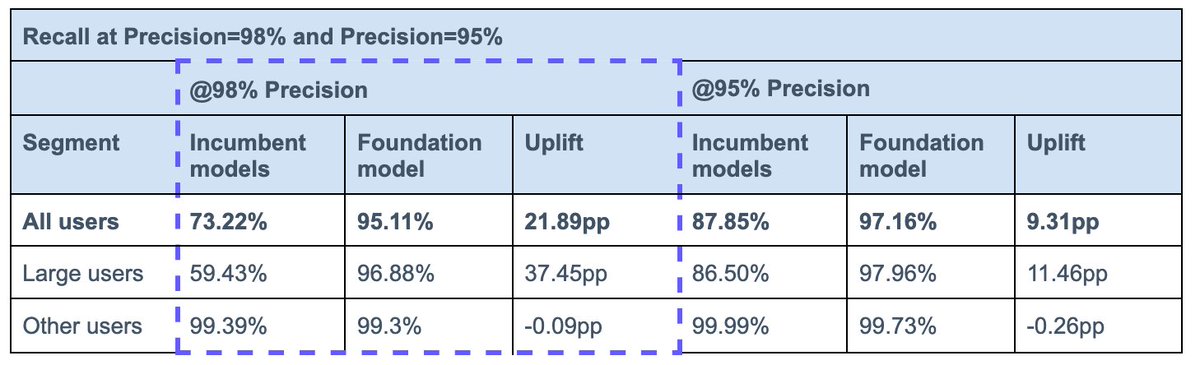

This approach improved our detection rate for card-testing attacks on large users from 59% to 97% overnight.

This has an instant impact for our large users. But the real power of the foundation model is that these same embeddings can be applied across other tasks, like disputes or authorizations.

Perhaps even more fundamentally, it suggests that payments have semantic meaning. Just like words in a sentence, transactions possess complex sequential dependencies and latent feature interactions that simply can’t be captured by manual feature engineering.

Turns out attention was all payments needed!

For years, Stripe has been using machine learning models trained on discrete features (BIN, zip, payment method, etc.) to improve our products for users. And these feature-by-feature efforts have worked well: +15% conversion, -30% fraud.

But these models have limitations. We have to select (and therefore constrain) the features considered by the model. And each model requires task-specific training: for authorization, for fraud, for disputes, and so on.

Given the learning power of generalized transformer architectures, we wondered whether an LLM-style approach could work here. It wasn’t obvious that it would—payments is like language in some ways (structural patterns similar to syntax and semantics, temporally sequential) and extremely unlike language in others (fewer distinct ‘tokens’, contextual sparsity, fewer organizing principles akin to grammatical rules).

So we built a payments foundation model—a self-supervised network that learns dense, general-purpose vectors for every transaction, much like a language model embeds words. Trained on tens of billions of transactions, it distills each charge’s key signals into a single, versatile embedding.

You can think of the result as a vast distribution of payments in a high-dimensional vector space. The location of each embedding captures rich data, including how different elements relate to each other. Payments that share similarities naturally cluster together: transactions from the same card issuer are positioned closer together, those from the same bank even closer, and those sharing the same email address are nearly identical.

These rich embeddings make it significantly easier to spot nuanced, adversarial patterns of transactions; and to build more accurate classifiers based on both the features of an individual payment and its relationship to other payments in the sequence.

Take card-testing. Over the past couple of years traditional ML approaches (engineering new features, labeling emerging attack patterns, rapidly retraining our models) have reduced card testing for users on Stripe by 80%. But the most sophisticated card testers hide novel attack patterns in the volumes of the largest companies, so they’re hard to spot with these methods.

We built a classifier that ingests sequences of embeddings from the foundation model, and predicts if the traffic slice is under an attack. It leverages transformer architecture to detect subtle patterns across transaction sequences. And it does this all in real time so we can block attacks before they hit businesses.

This approach improved our detection rate for card-testing attacks on large users from 59% to 97% overnight.

This has an instant impact for our large users. But the real power of the foundation model is that these same embeddings can be applied across other tasks, like disputes or authorizations.

Perhaps even more fundamentally, it suggests that payments have semantic meaning. Just like words in a sentence, transactions possess complex sequential dependencies and latent feature interactions that simply can’t be captured by manual feature engineering.

Turns out attention was all payments needed!

Here’s a plot identifying waves of fraudulent activity by plotting transactions based on their semantic similarity to previous transactions.

Here’s a plot of embeddings by new cluster patterns observed during a card testing (CT) attack on a business.

If you’re interested in exploring that with us, we’re hiring: .stripe.com/jobs/search?te…

• • •

Missing some Tweet in this thread? You can try to

force a refresh