just shipped taskmaster v0.14 🚀

→ know the cost of your taskmaster calls

→ @ollama provider support

→ baseUrl support across roles

→ task complexity score in tasks

→ strong focus on fixes & stability

→ over 9,500⭐ on @github

follow + bookmark + dive in

👀👇

→ know the cost of your taskmaster calls

→ @ollama provider support

→ baseUrl support across roles

→ task complexity score in tasks

→ strong focus on fixes & stability

→ over 9,500⭐ on @github

follow + bookmark + dive in

👀👇

1

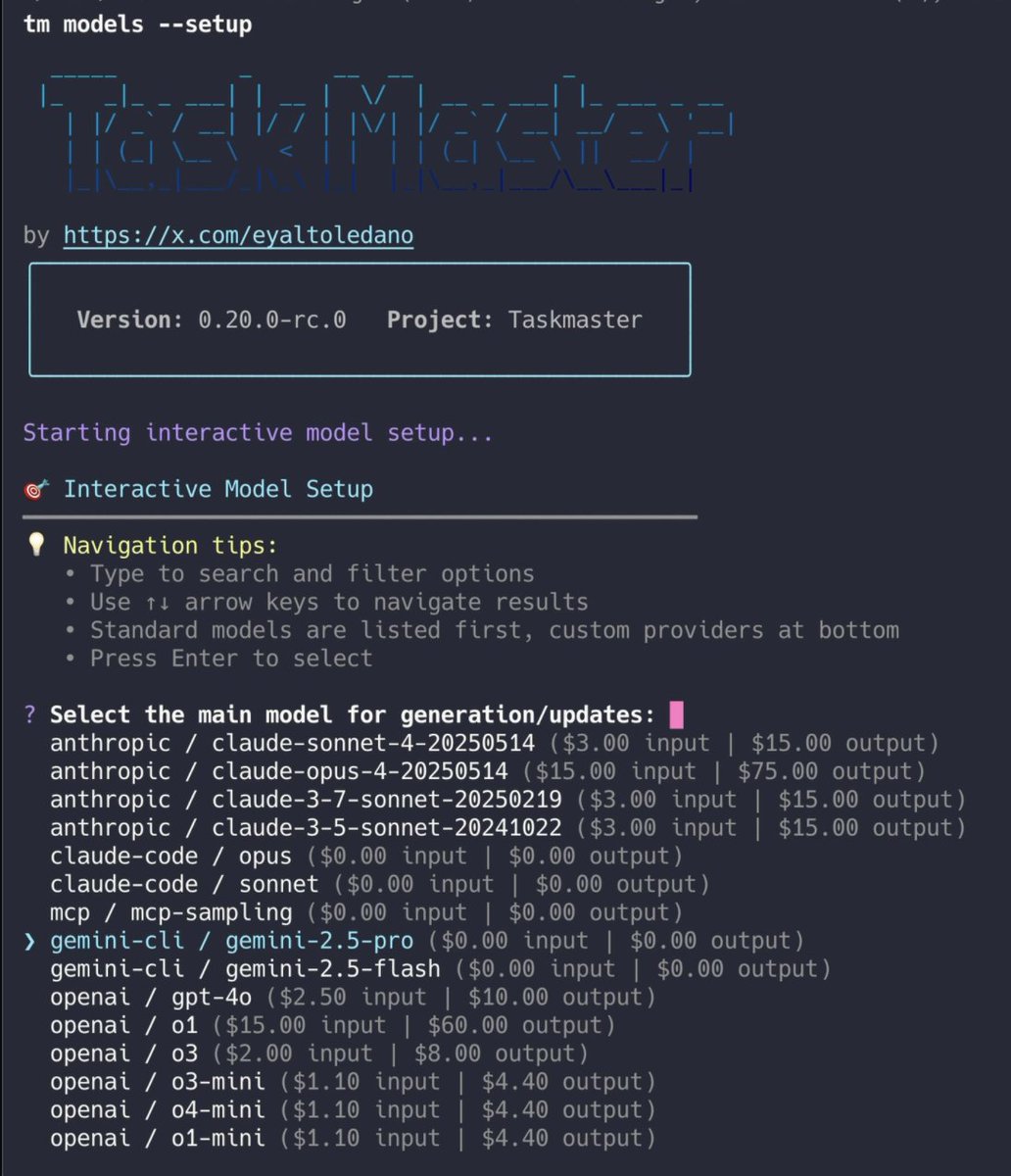

introducing cost telemetry for ai commands

→ costs reported across ai providers

→ breaks down input/output token usage

→ calculates cost of ai command

→ both CLI & MCP

we don't store this information yet

but it will eventually be used to power model leaderboards +++

introducing cost telemetry for ai commands

→ costs reported across ai providers

→ breaks down input/output token usage

→ calculates cost of ai command

→ both CLI & MCP

we don't store this information yet

but it will eventually be used to power model leaderboards +++

2

knowing the cost of ai commands might make you more sensitive to certain providers

@ollama support uses your local ai endpoint to power @taskmasterai ai commands at no cost

→ use any installed model

→ models without tool_use are not ideal

→ telemetry will show $0 cost

knowing the cost of ai commands might make you more sensitive to certain providers

@ollama support uses your local ai endpoint to power @taskmasterai ai commands at no cost

→ use any installed model

→ models without tool_use are not ideal

→ telemetry will show $0 cost

3

baseUrl support has been added to let you adjust the endpoint for any of the 3 roles

you can adjust this by adding 'baseUrl' to any of the roles in .taskmasterconfig

this opens up some support for currently unsupported ai providers like @awscloud @Azure

baseUrl support has been added to let you adjust the endpoint for any of the 3 roles

you can adjust this by adding 'baseUrl' to any of the roles in .taskmasterconfig

this opens up some support for currently unsupported ai providers like @awscloud @Azure

4

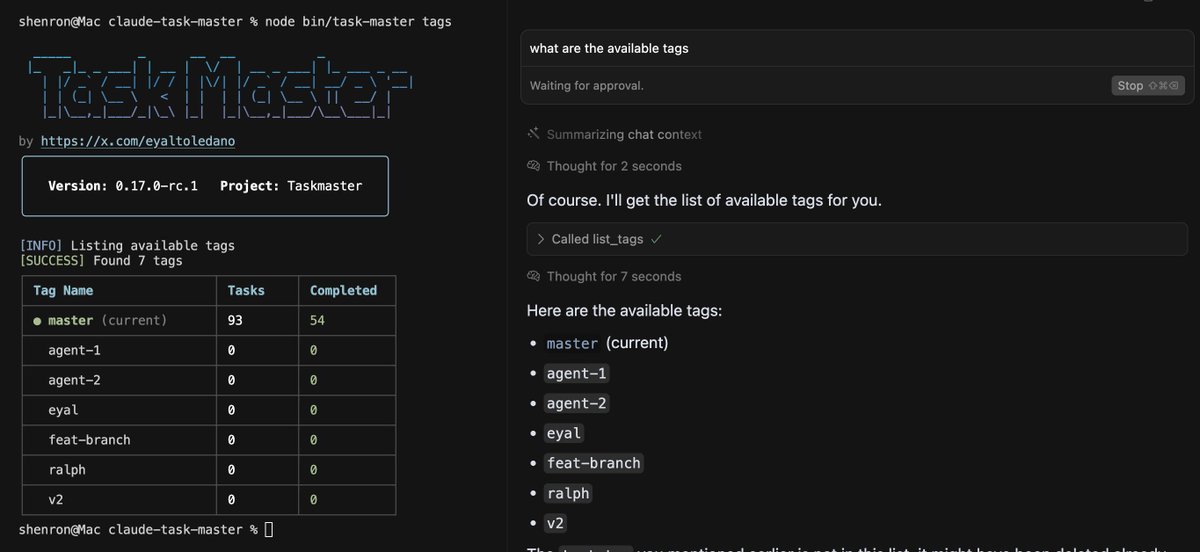

after parsing a PRD into tasks, using analyze-complexity asks ai to score how complex your tasks are and to figure out how many subtasks you need based on their complexity

task complexity scores now appear across task lists, next task, and task details views

s/o @JoeDanz

after parsing a PRD into tasks, using analyze-complexity asks ai to score how complex your tasks are and to figure out how many subtasks you need based on their complexity

task complexity scores now appear across task lists, next task, and task details views

s/o @JoeDanz

5

main focus on bug fixes across the stack & stability is now at an all-time high

→ fix MCP rough edges

→ fix parse-prd --append & --force

→ fix versioning issues

→ fix some error handling

→ removes cache layer

→ adjust default fallbacks

+++

thanks contributors!

main focus on bug fixes across the stack & stability is now at an all-time high

→ fix MCP rough edges

→ fix parse-prd --append & --force

→ fix versioning issues

→ fix some error handling

→ removes cache layer

→ adjust default fallbacks

+++

thanks contributors!

we've been cooking some next level stuff while delivering this excellent release

taskmaster will continue to improve even faster

but holy moly is the future bright and i'm excited to share what that looks like soon

in the meantime, help us cross 10,000 ⭐ on @github

taskmaster will continue to improve even faster

but holy moly is the future bright and i'm excited to share what that looks like soon

in the meantime, help us cross 10,000 ⭐ on @github

that's it for now, till next time!

v0.14 changelog

github.com/eyaltoledano/c…

→ npm i -g task-master-ai@latest

→ MCP auto-updates

→ founding team is locked-in!

→ join discord.gg/taskmasterai

→ more info task-master.dev

→ hug your loved ones

vibe on friends 🩶

v0.14 changelog

github.com/eyaltoledano/c…

→ npm i -g task-master-ai@latest

→ MCP auto-updates

→ founding team is locked-in!

→ join discord.gg/taskmasterai

→ more info task-master.dev

→ hug your loved ones

vibe on friends 🩶

help taskmaster reach more people by retweeting the first tweet and sharing it with your friends who are tired of getting stuck coding with ai

follow if you bookmark!

follow if you bookmark!

https://x.com/EyalToledano/status/1924642024566706304

• • •

Missing some Tweet in this thread? You can try to

force a refresh