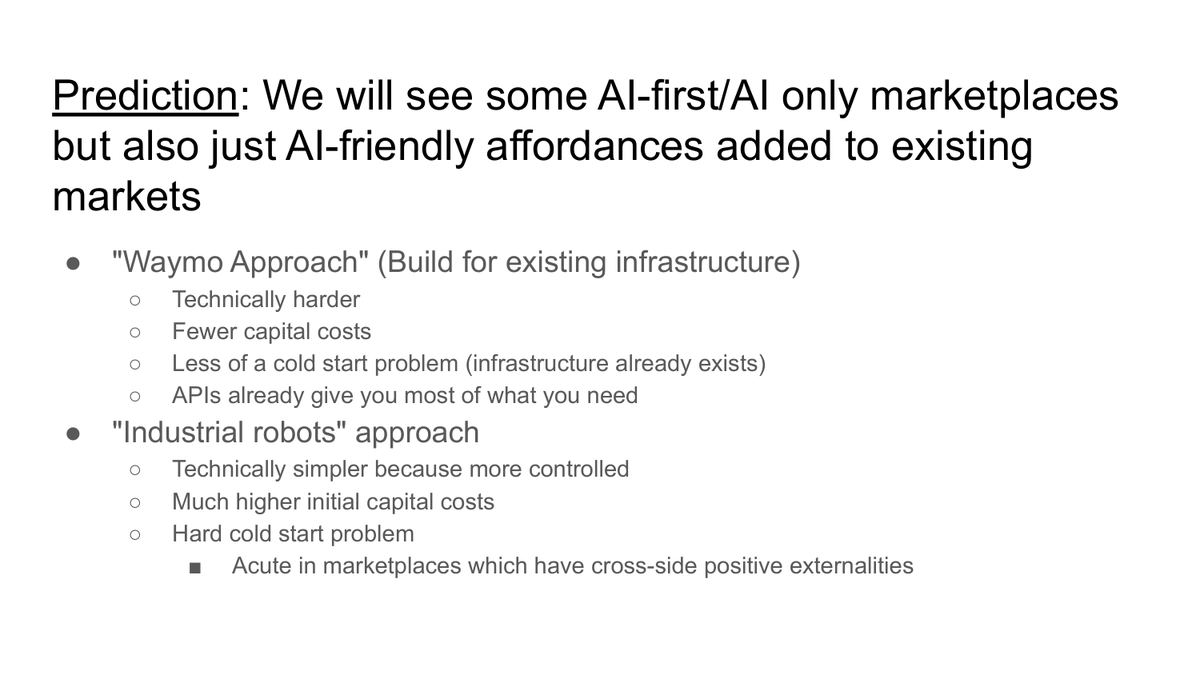

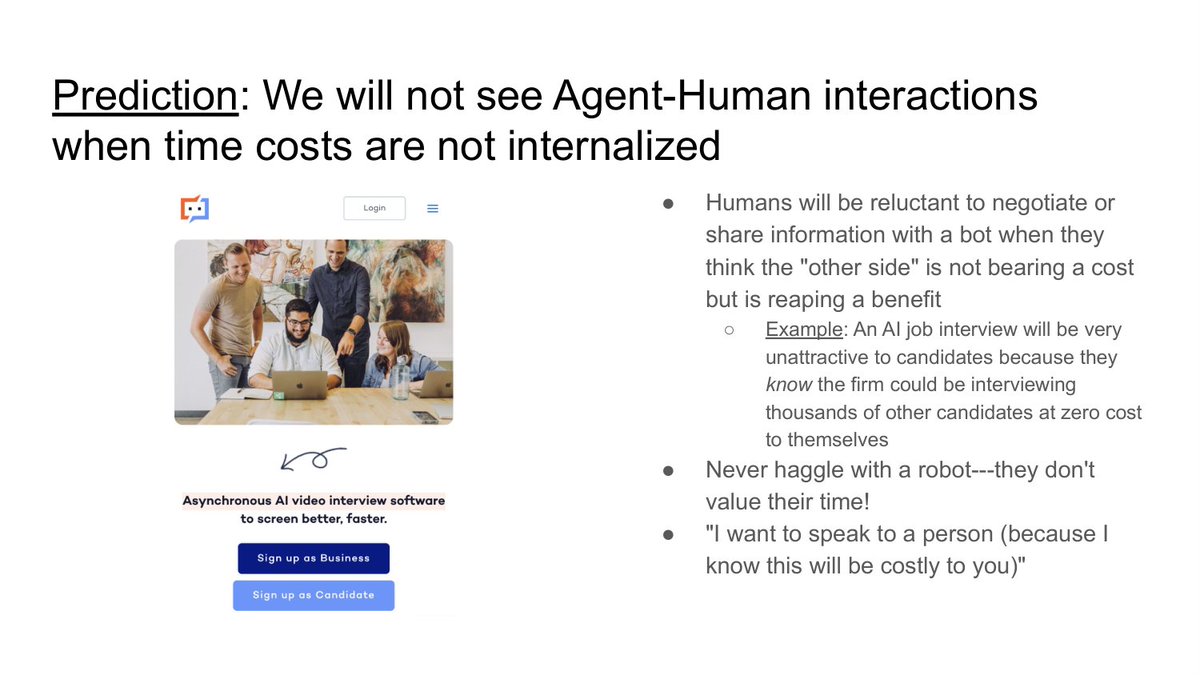

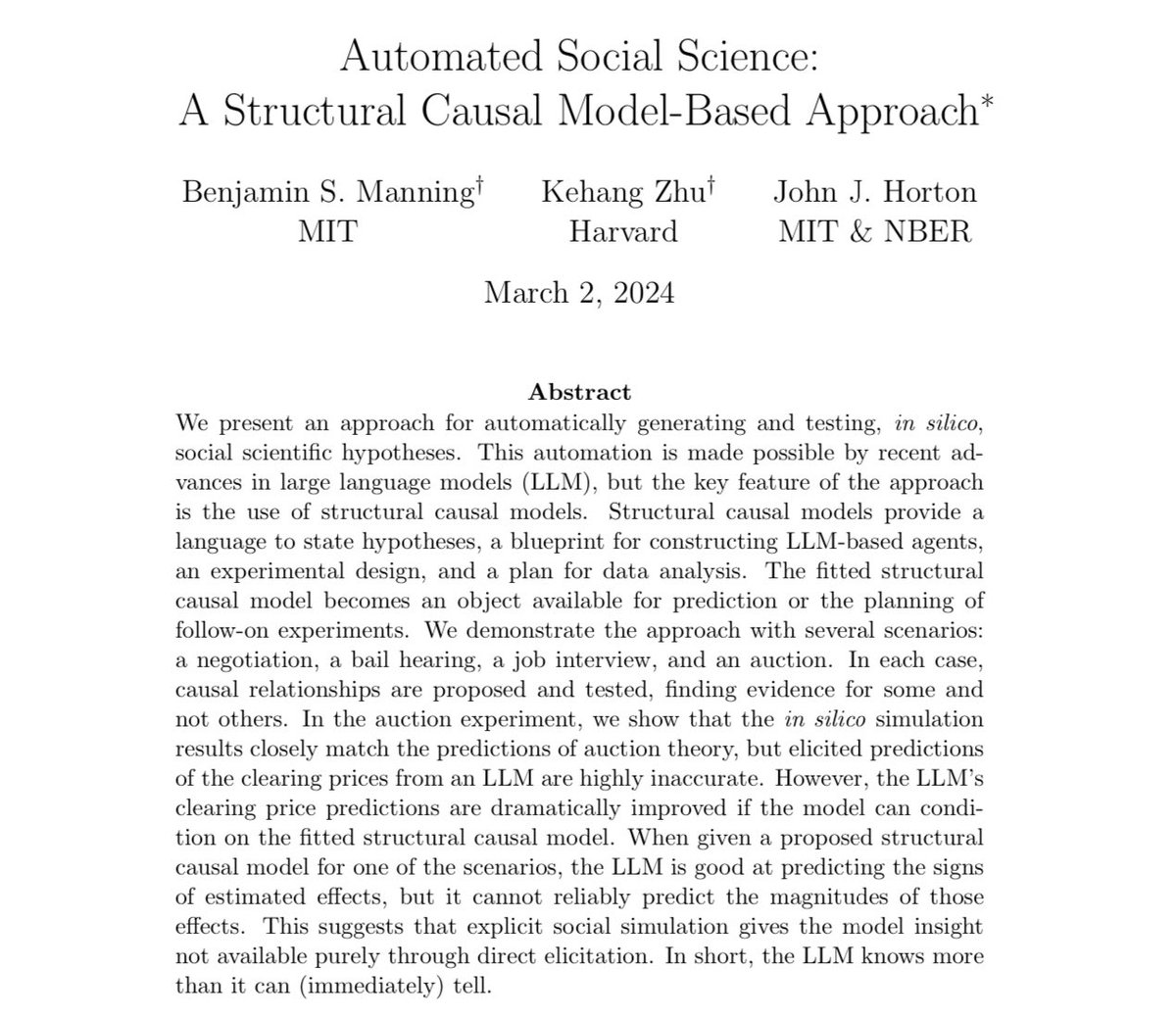

Gave a talk today to @cepr_org thanks invite from @akorinek ; great discussion by @Afinetheorem here are my slides in PNG form b/c of the link this 1/N

• • •

Missing some Tweet in this thread? You can try to

force a refresh