BREAKING: MIT just completed the first brain scan study of ChatGPT users & the results are terrifying.

Turns out, AI isn't making us more productive. It's making us cognitively bankrupt.

Here's what 4 months of data revealed:

(hint: we've been measuring productivity all wrong)

Turns out, AI isn't making us more productive. It's making us cognitively bankrupt.

Here's what 4 months of data revealed:

(hint: we've been measuring productivity all wrong)

83.3% of ChatGPT users couldn't quote from essays they wrote minutes earlier.

Let that sink in.

You write something, hit save, and your brain has already forgotten it because ChatGPT did the thinking.

Let that sink in.

You write something, hit save, and your brain has already forgotten it because ChatGPT did the thinking.

Brain scans revealed the damage: neural connections collapsed from 79 to just 42.

That's a 47% reduction in brain connectivity.

If your computer lost half its processing power, you'd call it broken. That's what's happening to ChatGPT users' brains.

That's a 47% reduction in brain connectivity.

If your computer lost half its processing power, you'd call it broken. That's what's happening to ChatGPT users' brains.

Teachers didn't know which essays used AI, but they could feel something was wrong.

"Soulless."

"Empty with regard to content."

"Close to perfect language while failing to give personal insights."

The human brain can detect cognitive debt even when it can't name it.

"Soulless."

"Empty with regard to content."

"Close to perfect language while failing to give personal insights."

The human brain can detect cognitive debt even when it can't name it.

Here's the terrifying part: When researchers forced ChatGPT users to write without AI, they performed worse than people who never used AI at all.

It's not just dependency. It's cognitive atrophy.

Like a muscle that's forgotten how to work.

It's not just dependency. It's cognitive atrophy.

Like a muscle that's forgotten how to work.

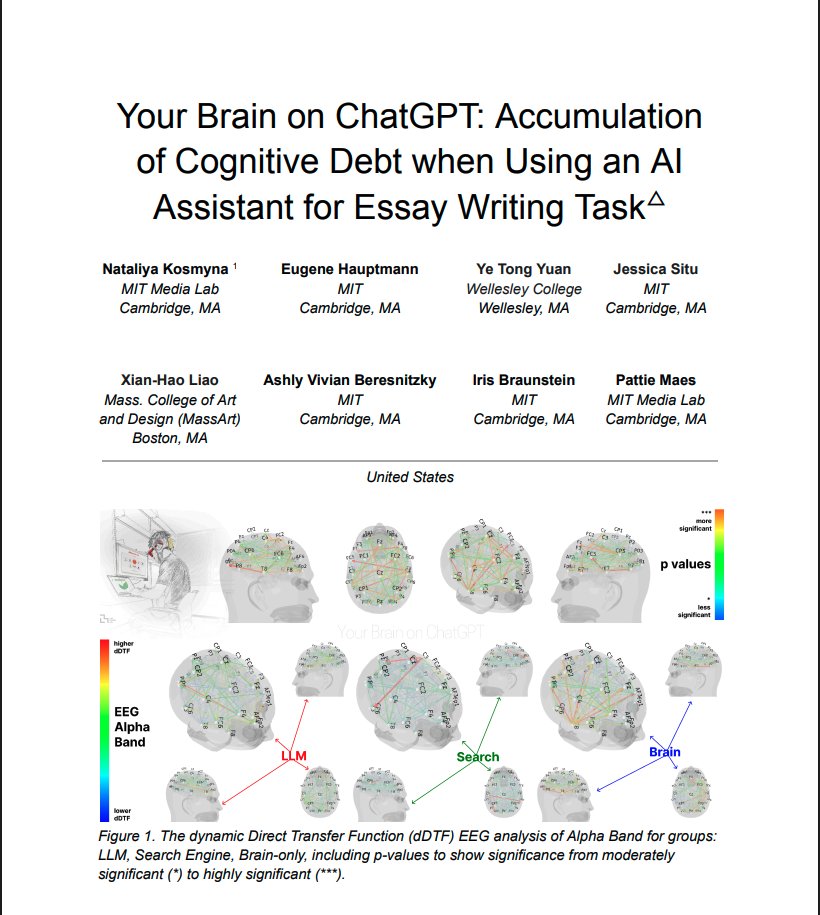

The MIT team used EEG brain scans on 54 participants for 4 months.

They tracked alpha waves (creative processing), beta waves (active thinking), and neural connectivity patterns.

This isn't opinion. It's measurable brain damage from AI overuse.

They tracked alpha waves (creative processing), beta waves (active thinking), and neural connectivity patterns.

This isn't opinion. It's measurable brain damage from AI overuse.

The productivity paradox nobody talks about:

Yes, ChatGPT makes you 60% faster at completing tasks.

But it reduces the "germane cognitive load" needed for actual learning by 32%.

You're trading long-term brain capacity for short-term speed.

Yes, ChatGPT makes you 60% faster at completing tasks.

But it reduces the "germane cognitive load" needed for actual learning by 32%.

You're trading long-term brain capacity for short-term speed.

Companies celebrating AI productivity gains are unknowingly creating cognitively weaker teams.

Employees become dependent on tools they can't live without, and less capable of independent thinking.

Many recent studies underscore the same problem, including the one by Microsoft:

Employees become dependent on tools they can't live without, and less capable of independent thinking.

Many recent studies underscore the same problem, including the one by Microsoft:

MIT researchers call this "cognitive debt" - like technical debt, but for your brain.

Every shortcut you take with AI creates interest payments in lost thinking ability.

And just like financial debt, the bill comes due eventually.

But there's good news...

Every shortcut you take with AI creates interest payments in lost thinking ability.

And just like financial debt, the bill comes due eventually.

But there's good news...

Because session 4 of the study revealed something interesting:

People with strong cognitive baselines showed HIGHER neural connectivity when using AI than chronic users.

But chronic AI users forced to work without it? They performed worse than people who never used AI at all.

People with strong cognitive baselines showed HIGHER neural connectivity when using AI than chronic users.

But chronic AI users forced to work without it? They performed worse than people who never used AI at all.

The solution isn't to ban AI. It's to use it strategically.

The choice is yours:

Build cognitive debt and become an AI dependent.

Or build cognitive strength and become an AI multiplier.

The first brain scan study of AI users just showed us the stakes.

Choose wisely.

The choice is yours:

Build cognitive debt and become an AI dependent.

Or build cognitive strength and become an AI multiplier.

The first brain scan study of AI users just showed us the stakes.

Choose wisely.

Thanks for reading!

I'm Alex, COO at ColdIQ. Built a $4.5M ARR business in under 2 years.

Started with two founders doing everything.

Now we're a remote team across 10 countries, helping 200+ businesses scale through outbound systems.

I'm Alex, COO at ColdIQ. Built a $4.5M ARR business in under 2 years.

Started with two founders doing everything.

Now we're a remote team across 10 countries, helping 200+ businesses scale through outbound systems.

RT the first tweet if you found this thread valuable.

Follow me @itsalexvacca for more threads on outbound and GTM strategy, AI-powered sales systems, and how to build profitable businesses that don't depend on you.

I share what worked (and what didn't) in real time.

Follow me @itsalexvacca for more threads on outbound and GTM strategy, AI-powered sales systems, and how to build profitable businesses that don't depend on you.

I share what worked (and what didn't) in real time.

https://twitter.com/859850213015597056/status/1935343874421178762

• • •

Missing some Tweet in this thread? You can try to

force a refresh