How do language models track mental states of each character in a story, often referred to as Theory of Mind?

Our recent work takes a step in demystifing it by reverse engineering how Llama-3-70B-Instruct solves a simple belief tracking task, and surprisingly found that it relies heavily on concepts similar to pointer variables in C programming!

Our recent work takes a step in demystifing it by reverse engineering how Llama-3-70B-Instruct solves a simple belief tracking task, and surprisingly found that it relies heavily on concepts similar to pointer variables in C programming!

Since Theory of Mind (ToM) is fundamental to social intelligence numerous works have benchmarked this capability of LMs. However, the internal mechanics responsible for solving (or failing to solve) such tasks remains unexplored...

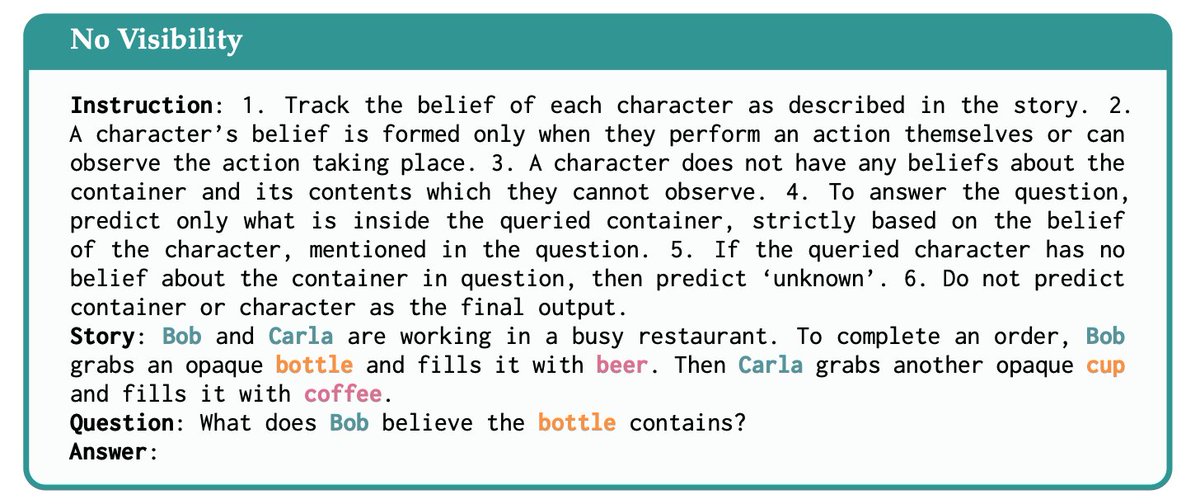

We constructed CausalToM, a dataset suitable for causal analysis, that consists of simple stories where two characters each separately change the state of two objects, potentially unaware of each other's actions. We ask Llama-3-70B-Instruct to reason about a character’s beliefs regarding the state of an object. Eg.

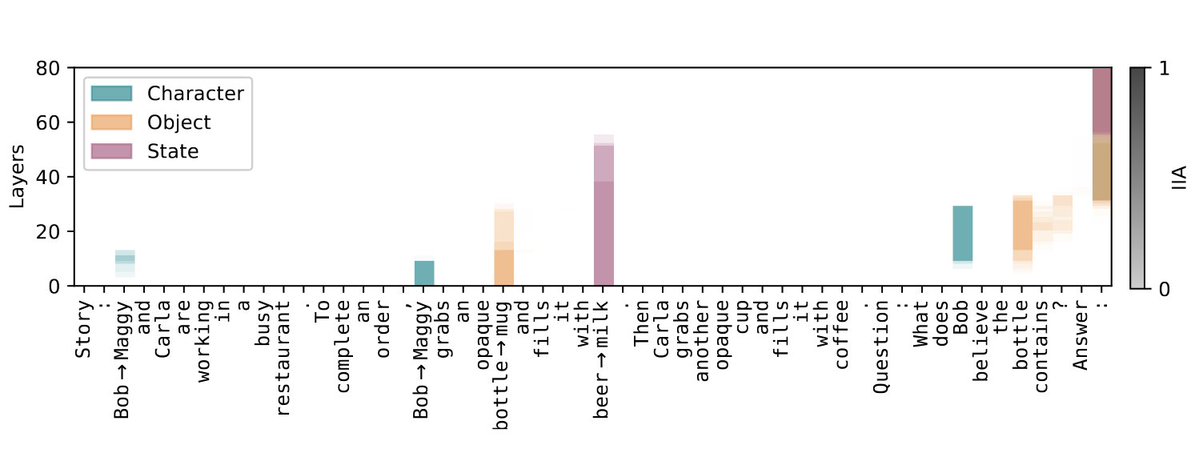

We discovered "Lookback" - a mechanism where language models mark important info while reading, then attend back to those marked tokens when needed later. Since LMs don't know what info will be relevant upfront, this lets them efficiently retrieve key details on demand.

Here is how it works: the model duplicates key info across two tokens, letting later attention heads look back at earlier ones to retrieve it, rather than passing it forward directly. Like leaving a breadcrumb trail in context.

Tracing key tokens shows that the correct state (e.g., beer) flows directly to the final token at later layers. Meanwhile, info about the query character and object is retrieved from earlier mentions and passed to the final token before being replaced by the correct state token.

Using the Causal Abstraction framework, we formulated a precise hypothesis that explains the end-to-end mechanism the model uses to perform this task.

Step 1: LM maps each vital token (character, object, state) to an abstract Ordering ID (OI), a reference that marks it as the first or second of its type, regardless of the actual token.

Step 2: The LM binds the character-object-state triplet by copying their OIs (source) to the state token. These OIs also flow to the final token via the corresponding tokens in the query, which is the pointer. Next, the LM uses both copies to attend to the correct state from the last token and fetch its state OI (payload).

Step 3: The LM now uses the state OI at the last token as a pointer and its in-place copy as an address to look back to the correct token, this time fetching its token value (e.g., "beer") as the payload, which gets predicted as the final output.

We test our high-level causal model using targeted causal interventions.

- Patching the answer lookback pointer from counterfactual turns the output from coffee to beer (pink line).

- Patching the answer lookback payload changes it from coffee to tea (grey line).

Clear, consistent shifts, strong evidence for the Answer Lookback mechanism.

- Patching the answer lookback pointer from counterfactual turns the output from coffee to beer (pink line).

- Patching the answer lookback payload changes it from coffee to tea (grey line).

Clear, consistent shifts, strong evidence for the Answer Lookback mechanism.

We found that the LM generates a Visibility ID at the visibility sentence which serves as source info. Its address copy stays in-place, while a pointer copy flows to later lookback tokens. There, a QK-circuit dereferences the pointer to fetch info about the observed character as payload.

We expect Lookback mechanism to extend beyond belief tracking - the concept of marking vital tokens seems universal across tasks requiring in-context manipulation. This mechanism could be fundamental to how LMs handle complex logical reasoning with conditionals.

Find more details, including the causal intervention experiments and subspace analysis in the paper. Links to code and data are available on our website.

🌐: belief.baulab.info

📜: arxiv.org/abs/2505.14685

Joint work with @NatalieShapira @arnab_api @criedl @boknilev @TamarRottShaham @davidbau and Atticus Geiger.

🌐: belief.baulab.info

📜: arxiv.org/abs/2505.14685

Joint work with @NatalieShapira @arnab_api @criedl @boknilev @TamarRottShaham @davidbau and Atticus Geiger.

• • •

Missing some Tweet in this thread? You can try to

force a refresh