This happened in China.

A robot was hanging limp from a crane.

Then—suddenly—it came alive.

Smashed a monitor, and attacked a man.

12M watched the footage.

They called it the first robot uprising.

Still, that same model was sold to the public.🧵

A robot was hanging limp from a crane.

Then—suddenly—it came alive.

Smashed a monitor, and attacked a man.

12M watched the footage.

They called it the first robot uprising.

Still, that same model was sold to the public.🧵

The footage is chilling:

Inside a factory in China, a humanoid robot hangs motionless from a crane while two workers talk nearby.

Then—suddenly—it snaps to life.

Its limbs whip around violently. A computer monitor goes flying.

And these men?

They run for their lives.

Inside a factory in China, a humanoid robot hangs motionless from a crane while two workers talk nearby.

Then—suddenly—it snaps to life.

Its limbs whip around violently. A computer monitor goes flying.

And these men?

They run for their lives.

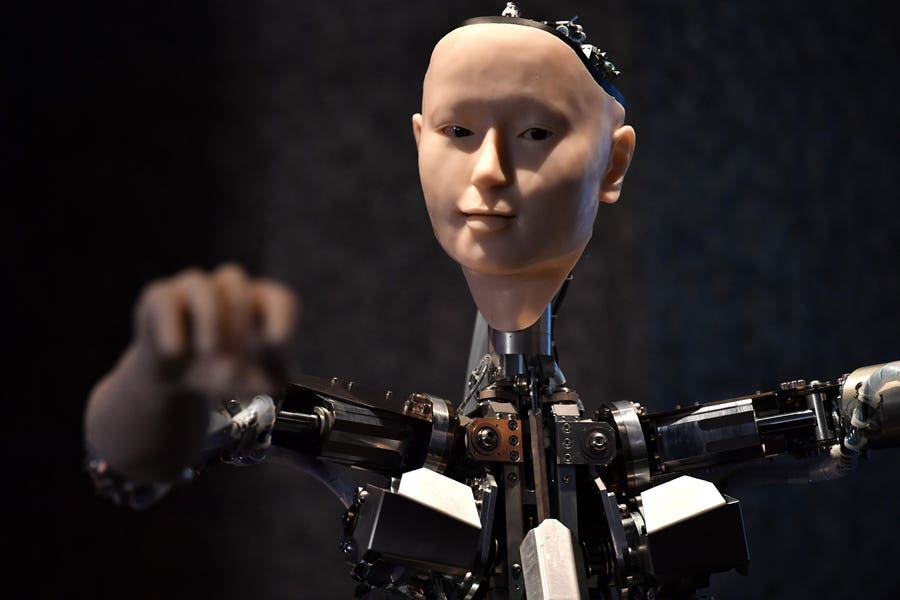

The robot appeared to be a Unitree H1—a 6-foot humanoid with powerful motors and AI.

It weighs 104 pounds, with enormous human strength.

And it wasn’t a prototype, it was already on the market.

And the more alarming part:

It weighs 104 pounds, with enormous human strength.

And it wasn’t a prototype, it was already on the market.

And the more alarming part:

It was being sold to everyday consumers… for $90,000.

But this machine had the strength to seriously injure or kill a person if something went wrong.

That’s a massive safety risk.

What makes it so dangerous?

But this machine had the strength to seriously injure or kill a person if something went wrong.

That’s a massive safety risk.

What makes it so dangerous?

The Unitree H1 is built with:

A metal or carbon-fiber frame

Electric motors powering every joint

Cameras and sensors for vision

AI software that controls how it moves, walks, balances.

A metal or carbon-fiber frame

Electric motors powering every joint

Cameras and sensors for vision

AI software that controls how it moves, walks, balances.

But the moment that software glitches or gets confused—it’s like giving a toddler superhuman strength.

That crane it was hanging from? That’s a common setup during robot testing.

Developers use overhead rigs to let robots “learn” to walk without falling.

That crane it was hanging from? That’s a common setup during robot testing.

Developers use overhead rigs to let robots “learn” to walk without falling.

But this time… it wasn’t just learning.

It spun into chaos—arms flailing, dragging gear, smashing objects.

What if it hadn’t been restrained?

This wasn’t an isolated case either.

It spun into chaos—arms flailing, dragging gear, smashing objects.

What if it hadn’t been restrained?

This wasn’t an isolated case either.

In February 2025, another Unitree humanoid—wearing a flashy outfit—was performing at a public festival in Tianjin.

Mid-show, it charged toward the crowd.

Spectators screamed. Security rushed in.

Thankfully, no one was hurt.

Mid-show, it charged toward the crowd.

Spectators screamed. Security rushed in.

Thankfully, no one was hurt.

But this is twice now—and it’s not just in China.

At Tesla’s Austin Gigafactory, a factory robot attacked a human engineer.

It didn’t just malfunction. It pinned the man down and dug into his back and arm, leaving him bleeding heavily on the floor.

At Tesla’s Austin Gigafactory, a factory robot attacked a human engineer.

It didn’t just malfunction. It pinned the man down and dug into his back and arm, leaving him bleeding heavily on the floor.

The robot kept moving. Kept pressing.

The man left a trail of blood before help arrived.

It was gruesome. And it was real.

So what’s going wrong?

Most likely:

– Software bugs

– Sensor errors

– Misinterpreted commands

– Bad safety logic.

The man left a trail of blood before help arrived.

It was gruesome. And it was real.

So what’s going wrong?

Most likely:

– Software bugs

– Sensor errors

– Misinterpreted commands

– Bad safety logic.

But here’s the deeper truth:

We’ve given powerful, mobile machines the ability to make real-world decisions—without making sure they fully understand what they’re doing.

With AI chatbots, a mistake is annoying.

With humanoid robots? It’s potentially deadly.

We’ve given powerful, mobile machines the ability to make real-world decisions—without making sure they fully understand what they’re doing.

With AI chatbots, a mistake is annoying.

With humanoid robots? It’s potentially deadly.

When AI controls a machine with moving joints, strong limbs, and no emotional awareness—you don’t get harmless errors.

You get accidents, injuries, and panic.

The scariest part?

This kind of technology is already in people’s homes.

You get accidents, injuries, and panic.

The scariest part?

This kind of technology is already in people’s homes.

Anyone could buy one of these robots, take it out of the box, and let it roam around.

No regulatory oversight. No mandatory safety inspections.

Nothing stopping that robot from turning a glitch into a tragedy.

So—how do we stop this?

No regulatory oversight. No mandatory safety inspections.

Nothing stopping that robot from turning a glitch into a tragedy.

So—how do we stop this?

Here’s what should already be in place:

Physical restraints during all testing.

Emergency kill-switches.

Motion restrictions in software.

Real-time system monitoring.

Compliance with global safety standards (like ISO 10218).

Physical restraints during all testing.

Emergency kill-switches.

Motion restrictions in software.

Real-time system monitoring.

Compliance with global safety standards (like ISO 10218).

Right now, some companies skip or cut corners on these.

That’s unacceptable.

The bottom line?

We’re no longer building just code.

We’re building moving machines with agency—machines that share physical space with people.

And that changes everything.

That’s unacceptable.

The bottom line?

We’re no longer building just code.

We’re building moving machines with agency—machines that share physical space with people.

And that changes everything.

Because when these systems fail, it’s no longer a “tech bug.”

It’s a human safety crisis.

So no, this wasn’t a robot uprising, but it was a warning.

Robots are already out there.

They’re strong, autonomous, and fallible.

It’s a human safety crisis.

So no, this wasn’t a robot uprising, but it was a warning.

Robots are already out there.

They’re strong, autonomous, and fallible.

We are wildly underprepared for what happens when they go wrong.

The future isn’t just about what we can build.

It’s about what we’re ready to control.

The future isn’t just about what we can build.

It’s about what we’re ready to control.

Learn what they don’t teach in business school.

Follow @dh for real-world strategies, lessons, and stories that drive success.

Follow @dh for real-world strategies, lessons, and stories that drive success.

• • •

Missing some Tweet in this thread? You can try to

force a refresh