I thought I understood AI prompting.

Then Google dropped their 68-page engineering guide.

It reveals techniques that work across all LLMs

I dove deep into all 5 advanced methods.

Here's what will transform your AI outputs🧵

Then Google dropped their 68-page engineering guide.

It reveals techniques that work across all LLMs

I dove deep into all 5 advanced methods.

Here's what will transform your AI outputs🧵

First, a confession:

Back when I started using AI, I wrote short prompts and wondered why outputs sucked.

Then I learnt.. optimal prompts? 21 words.

"Explain photosynthesis" (2 words) vs

"Explain photosynthesis process to middle-school student in single paragraph" (11 words)

This was just the beginning..

Back when I started using AI, I wrote short prompts and wondered why outputs sucked.

Then I learnt.. optimal prompts? 21 words.

"Explain photosynthesis" (2 words) vs

"Explain photosynthesis process to middle-school student in single paragraph" (11 words)

This was just the beginning..

Now, what actually happens when you prompt AI?

The model predicts the next word based on patterns it learned.

Your prompt sets the pattern.

Better pattern = better prediction.

That's why prompts like "write about dogs" fail but specific prompts succeed.

Let me break this down...

The model predicts the next word based on patterns it learned.

Your prompt sets the pattern.

Better pattern = better prediction.

That's why prompts like "write about dogs" fail but specific prompts succeed.

Let me break this down...

Think of AI like a brilliant intern who needs clear instructions.

Vague prompt: Intern guesses what you want

Clear prompt: Intern knows exactly what to deliver

Google studied millions of prompts to find what makes them "clear."

Then I found how to 10X their output...

Vague prompt: Intern guesses what you want

Clear prompt: Intern knows exactly what to deliver

Google studied millions of prompts to find what makes them "clear."

Then I found how to 10X their output...

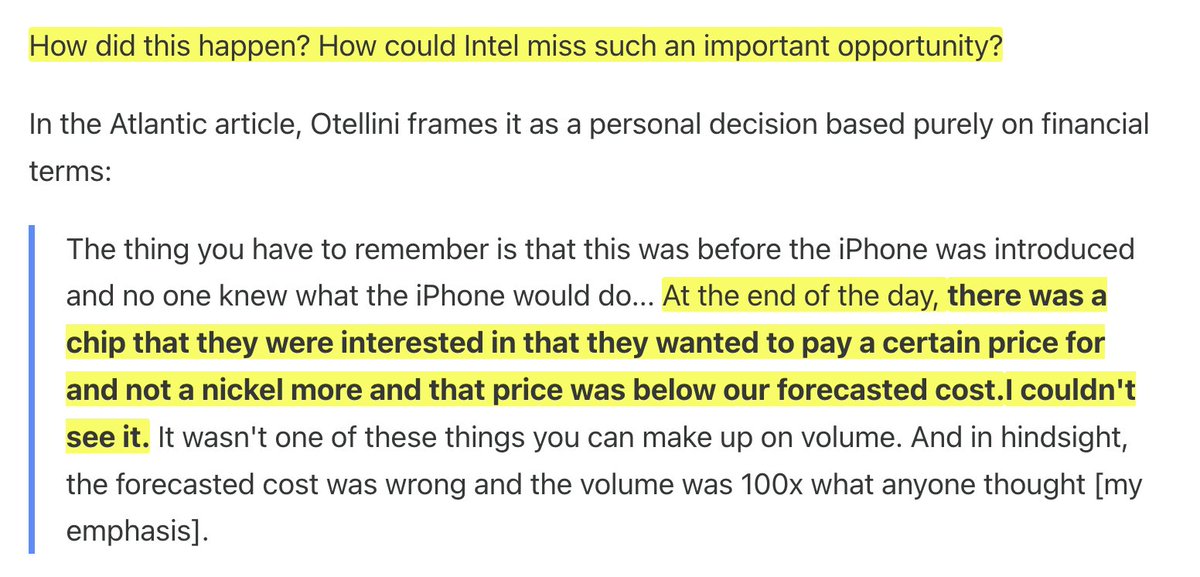

Temperature, Top-k, Top-p settings.

Secrets that that I'm sure most of us missed.

It's like driving a Ferrari in first gear.

Temperature = creativity/randomness dial.

Let me show what it means:

Secrets that that I'm sure most of us missed.

It's like driving a Ferrari in first gear.

Temperature = creativity/randomness dial.

Let me show what it means:

At 0 temp: AI picks most likely word every time

"The sky is [blue]" - always blue

At 0.9 temp : AI gets adventurous

"The sky is [crimson/infinite/electric]"

Top-k = limits word choices (default ~40)

Top-p = takes words until 90% probability

Now onto prompting techniques...

"The sky is [blue]" - always blue

At 0.9 temp : AI gets adventurous

"The sky is [crimson/infinite/electric]"

Top-k = limits word choices (default ~40)

Top-p = takes words until 90% probability

Now onto prompting techniques...

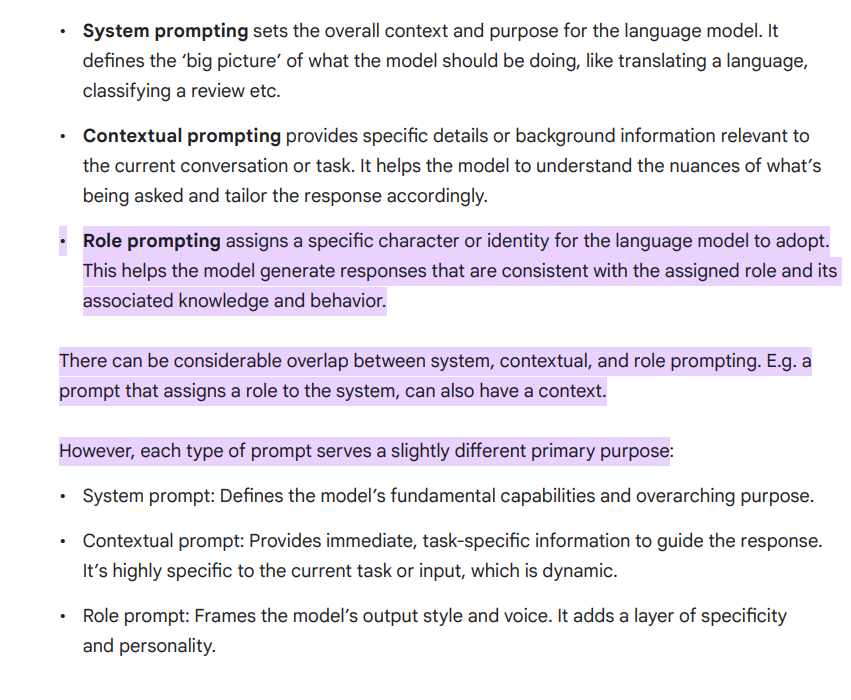

1. The 4-Pillar Framework shattered my approach.

Google says every prompt needs:

Persona (who AI should be)

Task (what to do)

Context (background info)

Format (how to respond)

I tested it immediately.

The difference was staggering...

Google says every prompt needs:

Persona (who AI should be)

Task (what to do)

Context (background info)

Format (how to respond)

I tested it immediately.

The difference was staggering...

My old prompt: "Explain blockchain"

My new 4-pillar prompt:

"You are a blockchain expert [PERSONA].

Explain how blockchain works [TASK].

For a 12-year-old audience [CONTEXT].

Use a simple analogy [FORMAT]."

The AI transformed from Wikipedia to favorite teacher.

My new 4-pillar prompt:

"You are a blockchain expert [PERSONA].

Explain how blockchain works [TASK].

For a 12-year-old audience [CONTEXT].

Use a simple analogy [FORMAT]."

The AI transformed from Wikipedia to favorite teacher.

2. Step-back prompting

It's a technique for improving the performance by prompting the LLM.

It first consider a general question related to the specific task at hand, and then feeding the answer to that general question into a subsequent prompt.

Example: This is a traditional prompt

It's a technique for improving the performance by prompting the LLM.

It first consider a general question related to the specific task at hand, and then feeding the answer to that general question into a subsequent prompt.

Example: This is a traditional prompt

We take a step-back in image 1. And then add that output into the prompt.

You can clearly see how much improved the output has gotten.

You can clearly see how much improved the output has gotten.

3. This led me to Chain of thought

Chain of Thought = forcing AI to show its work.

Like your math teacher demanded.

Advantages per Google:

- Low-effort, high impact

- Works on any LLM

- See reasoning steps

- Catch errors

- More robust across models

Chain of Thought = forcing AI to show its work.

Like your math teacher demanded.

Advantages per Google:

- Low-effort, high impact

- Works on any LLM

- See reasoning steps

- Catch errors

- More robust across models

But here's the power move, few-shot CoT:

Give an example first:

"Q: When my brother was 2, I was double his age. Now I'm 40. How old is he?

A: Brother was 2, I was 4. Difference: 2 years. Now 40-2 = 38. Answer: 38.

Q: [Your question]

A: Let's think step by step..."

Give an example first:

"Q: When my brother was 2, I was double his age. Now I'm 40. How old is he?

A: Brother was 2, I was 4. Difference: 2 years. Now 40-2 = 38. Answer: 38.

Q: [Your question]

A: Let's think step by step..."

4. ReAct (reason & act)

It turns AI into an agent that can use tools.

Instead of just thinking, it can:

- Search the web

- Run calculations

- Call APIs

- Fetch data

The AI alternates between thinking and doing actions.

Like a human solving problems.

It turns AI into an agent that can use tools.

Instead of just thinking, it can:

- Search the web

- Run calculations

- Call APIs

- Fetch data

The AI alternates between thinking and doing actions.

Like a human solving problems.

Here's how ReAct actually works:

Format: Thought → Action → Observation → Loop

To see the prompt in action, you gotta code.

Here's an example:

Format: Thought → Action → Observation → Loop

To see the prompt in action, you gotta code.

Here's an example:

5. APE

Automatic Prompt Engineering (APE) makes AI reach "near human-level" at writing prompts.

"Alleviates need for human input and enhances model performance" - Google guide

I was watching AI evolve in real-time.

Automatic Prompt Engineering (APE) makes AI reach "near human-level" at writing prompts.

"Alleviates need for human input and enhances model performance" - Google guide

I was watching AI evolve in real-time.

The APE revelation went deeper.

The AI writes better prompts than humans.

Process:

1. "Generate 10 prompts for ordering a t-shirt "

2. AI creates 10 different versions

3. Test each on real documents

4. Pick the winner

The AI writes better prompts than humans.

Process:

1. "Generate 10 prompts for ordering a t-shirt "

2. AI creates 10 different versions

3. Test each on real documents

4. Pick the winner

Hidden in the guide:

"Use positive instructions: tell model what to do instead of what not to do"

I'd been saying: "Don't use jargon"

Should say: "Use simple language"

Our brains (and AI) struggle with negatives.

Simple switch. Massive improvement.

"Use positive instructions: tell model what to do instead of what not to do"

I'd been saying: "Don't use jargon"

Should say: "Use simple language"

Our brains (and AI) struggle with negatives.

Simple switch. Massive improvement.

Thanks for reading!

I'm Mich - serial entrepreneur and sales tech expert.

CEO @ ColdIQ () - a GTM agency serving 400+ businesses globally. $5MM ARR and growing. coldiq.com

I'm Mich - serial entrepreneur and sales tech expert.

CEO @ ColdIQ () - a GTM agency serving 400+ businesses globally. $5MM ARR and growing. coldiq.com

Follow me @MichLieben for more threads on my personal stories and insights, entrepreneurship lessons and case studies, and other personal takes.

Like/Repost the quote below if you can:

Like/Repost the quote below if you can:

https://twitter.com/512156315/status/1937888816800665874

Check the full guide here: services.google.com/fh/files/misc/…

• • •

Missing some Tweet in this thread? You can try to

force a refresh