BREAKING: xAI announces Grok 4

"It can reason at a superhuman level!"

Here is everything you need to know:

"It can reason at a superhuman level!"

Here is everything you need to know:

Elon claims that Grok 4 is smarter than almost all grad students in all disciplines simultaneously.

100x more training than Grok 2.

10x more compute on RL than any of the models out there.

100x more training than Grok 2.

10x more compute on RL than any of the models out there.

With native tool calling, Grok 4 increases the performance significantly.

Look at those curves!

It's important to give AI the right tools. The scaling is clear. Crazy!

Look at those curves!

It's important to give AI the right tools. The scaling is clear. Crazy!

Reliable signals are key to making RL work.

There is still the challenge of data.

Elon: "Ultimate reasoning test is AI operating in reality."

There is still the challenge of data.

Elon: "Ultimate reasoning test is AI operating in reality."

Scaling test-time compute

More than 50% of the text-only subset of the HLE problems are solved!

The curves keep getting more ridiculous.

More than 50% of the text-only subset of the HLE problems are solved!

The curves keep getting more ridiculous.

Grok 4 is the single-agent version.

Grok 4 Heavy is the multi-agent version.

Multi-agent systems are no joke!

Grok 4 Heavy is the multi-agent version.

Multi-agent systems are no joke!

Grok 4 is being used to predict the World Series champions for this year.

These are the interesting tasks that reasoning models need to be tested on. On actual real-world events.

These are the interesting tasks that reasoning models need to be tested on. On actual real-world events.

A visualization of two black holes colliding.

Grok 4 uses all kinds of references like papers, reads PDFs, reasons about the details of the simulation, and what data to use.

Grok 4 uses all kinds of references like papers, reads PDFs, reasons about the details of the simulation, and what data to use.

The example shows a summary of the timeline/changes and score announcements in the HLE.

That's pretty cool!

That's pretty cool!

Multi-modal performance

Grok 4 Heavy performance is higher than Grok 4, but needs to be improved further. It's one of the weaknesses, according to the team.

Grok 4 Heavy performance is higher than Grok 4, but needs to be improved further. It's one of the weaknesses, according to the team.

Performance on Reasoning benchmarks.

Perfect score on AIME25!

Leaps are crazy compared to the last best model on these tasks.

Perfect score on AIME25!

Leaps are crazy compared to the last best model on these tasks.

Where to test the models.

Available as SuperGrok Heavy tier.

$30/m for Super Grok

$300/m for SuperGrok Heavy.

Available as SuperGrok Heavy tier.

$30/m for Super Grok

$300/m for SuperGrok Heavy.

Voice updates included, too!

Grok feels snappier and is designed to be more natural.

- 2x faster

- 5 voices

- 10x daily user seconds

Grok feels snappier and is designed to be more natural.

- 2x faster

- 5 voices

- 10x daily user seconds

ARC-AGI

Grok 4 on ARC-AGI v2 (private subset)

It breaks the 10% barrier (15.9%).

2x the second place, which is the Claude Opus 4 model.

Grok 4 on ARC-AGI v2 (private subset)

It breaks the 10% barrier (15.9%).

2x the second place, which is the Claude Opus 4 model.

What is next?

Smart and fast will be the focus.

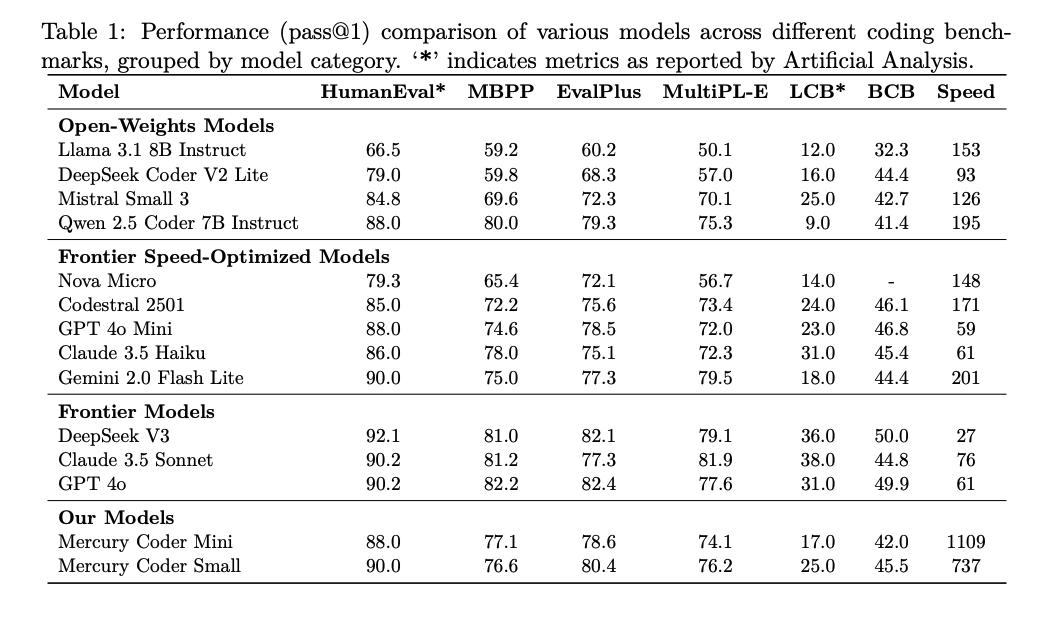

Coding models are also a big focus.

More capable multi-modal agents are coming too.

Video generation models are also on the horizon.

Smart and fast will be the focus.

Coding models are also a big focus.

More capable multi-modal agents are coming too.

Video generation models are also on the horizon.

@elonmusk and the @xai team really cooked with Grok 4. All very exciting to see focus on AI for reality, truth-seeking, and unlocking multi-modal agents next.

• • •

Missing some Tweet in this thread? You can try to

force a refresh