Let's build a multi-agent content creation system (100% local):

Before we dive in, here's a quick demo of what we're building!

Tech stack:

- @motiadev as the unified backend framework

- @firecrawl_dev to scrape web content

- @ollama to locally serve Deepseek-R1 LLM

The only AI framework you'll ever need to learn! 🚀

Tech stack:

- @motiadev as the unified backend framework

- @firecrawl_dev to scrape web content

- @ollama to locally serve Deepseek-R1 LLM

The only AI framework you'll ever need to learn! 🚀

Here's the workflow:

- User submits URL to scrape

- Firecrawl scrapes content and converts it to markdown

- Twitter and LinkedIn agents run in parallel to generate content

- Generated content gets scheduled via Typefully

Now, let's dive into code!

- User submits URL to scrape

- Firecrawl scrapes content and converts it to markdown

- Twitter and LinkedIn agents run in parallel to generate content

- Generated content gets scheduled via Typefully

Now, let's dive into code!

Steps are the fundamental building blocks of Motia.

They consist of two main components:

1️⃣ The Config object: It instructs Motia on how to interact with a step.

2️⃣ The handler function: It defines the main logic of a step.

Check this out 👇

They consist of two main components:

1️⃣ The Config object: It instructs Motia on how to interact with a step.

2️⃣ The handler function: It defines the main logic of a step.

Check this out 👇

With that understanding in mind, let's start building our content creation workflow... 👇

Step 1: Entry point (API)

We start our content generation workflow by defining an API step that takes in a URL from the user via a POST request.

Check this out 👇

We start our content generation workflow by defining an API step that takes in a URL from the user via a POST request.

Check this out 👇

Step 2: Web scraping

This step scrapes the article content using Firecrawl and emits the next step in the workflow.

Steps can be connected together in a sequence, where the output of one step becomes the input for another.

Check this out 👇

This step scrapes the article content using Firecrawl and emits the next step in the workflow.

Steps can be connected together in a sequence, where the output of one step becomes the input for another.

Check this out 👇

Step 3: Content generation

The scraped content gets fed to the X and LinkedIn agents that run in parallel and generate curated posts.

We define all our prompting and AI logic in the handler that runs automatically when a step is triggered.

Check this out 👇

The scraped content gets fed to the X and LinkedIn agents that run in parallel and generate curated posts.

We define all our prompting and AI logic in the handler that runs automatically when a step is triggered.

Check this out 👇

Step 4: Scheduling

After the content is generated we draft it in Typefully where we can easily review our social media posts.

Motia also allows us to mix and match different languages within the same workflow providing great flexibility.

Check this typescript code 👇

After the content is generated we draft it in Typefully where we can easily review our social media posts.

Motia also allows us to mix and match different languages within the same workflow providing great flexibility.

Check this typescript code 👇

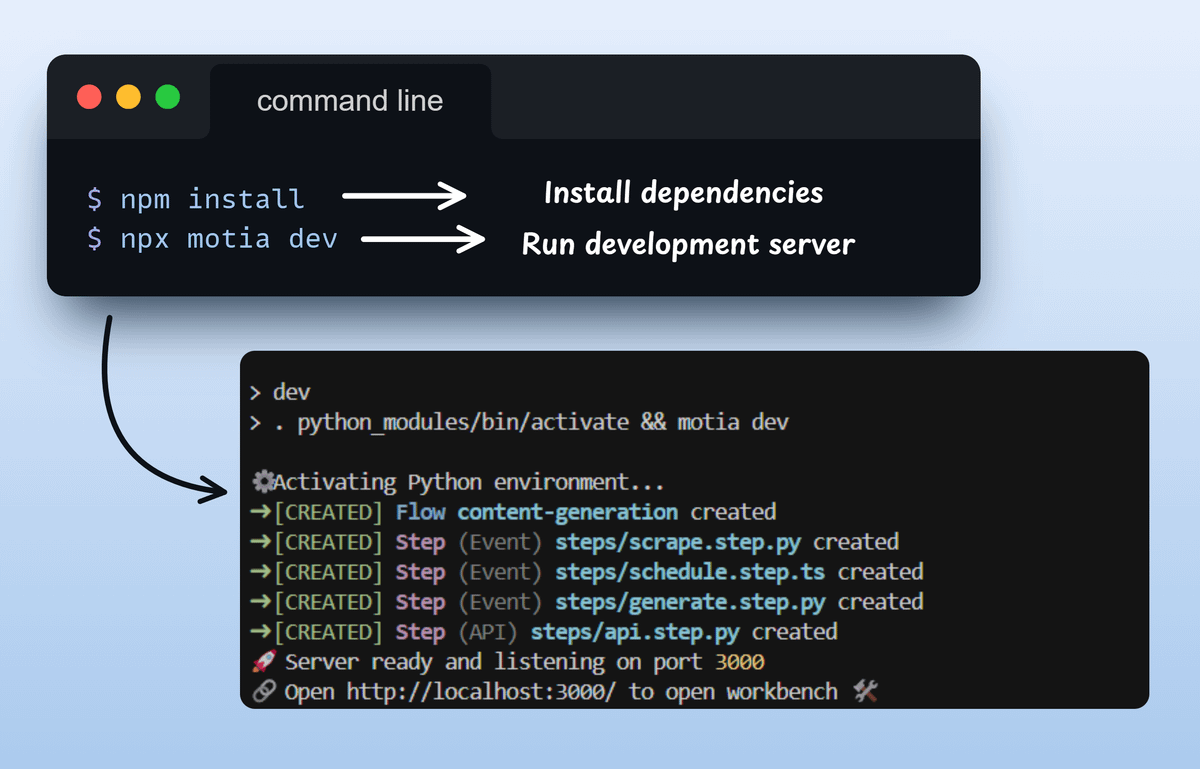

After defining our steps, we install required dependencies using `npm install` and run the Motia workbench using `npm run dev` commands.

Check this out 👇

Check this out 👇

Motia workbench provides an interactive UI to help build, monitor and debug our flows.

With one-click you can also deploy it to cloud! 🚀

Check this out 👇

With one-click you can also deploy it to cloud! 🚀

Check this out 👇

This project is built using Motia!

Motia is a unified system where APIs, background jobs, events, and agents are just plug-and-play steps.

100% open-source, Check this out👇

github.com/MotiaDev/motia

Motia is a unified system where APIs, background jobs, events, and agents are just plug-and-play steps.

100% open-source, Check this out👇

github.com/MotiaDev/motia

To summarise here's how it works

- User submits URL to scrape

- Firecrawl scrapes content and converts to markdown

- Twitter and LinkedIn agents run in parallel to generate content

- Generated content gets scheduled via Typefully

- User submits URL to scrape

- Firecrawl scrapes content and converts to markdown

- Twitter and LinkedIn agents run in parallel to generate content

- Generated content gets scheduled via Typefully

If you found it insightful, reshare with your network.

Find me → @akshay_pachaar ✔️

For more insights and tutorials on LLMs, AI Agents, and Machine Learning!

Find me → @akshay_pachaar ✔️

For more insights and tutorials on LLMs, AI Agents, and Machine Learning!

https://x.com/akshay_pachaar/status/1945469037888307275

• • •

Missing some Tweet in this thread? You can try to

force a refresh