🧵 Explaining AI in a way that even people with no programming background can understand:

https://twitter.com/srchpfrn/status/1945119102860730394

In this thread, I’ll explain:

- Basic Concepts

- Development

- Application Contexts

- Challenges

- Basic Concepts

- Development

- Application Contexts

- Challenges

Is AI just ChatGPT or text/image generators?

No.

Artificial Intelligence is a broad field.

Simply put, it’s the ability to make a machine perform actions that, until now, only humans could do.

No.

Artificial Intelligence is a broad field.

Simply put, it’s the ability to make a machine perform actions that, until now, only humans could do.

But how is that possible if machines don’t understand texts or images?

Because they operate in their own language — binary — made up only of zeros and ones (0 and 1).

Because they operate in their own language — binary — made up only of zeros and ones (0 and 1).

Programming languages work as the “interpreter” between humans and machines.

We write code, it’s translated into binary, and the processor (the device’s “brain”) can execute it.

We write code, it’s translated into binary, and the processor (the device’s “brain”) can execute it.

This brings us to Machine Learning.

The name says it all: it’s the process of teaching a computer to recognize patterns and reproduce them in new outputs.

The name says it all: it’s the process of teaching a computer to recognize patterns and reproduce them in new outputs.

The difference? Machines need MUCH more data to learn a pattern — and huge computational power to store and process all of it.

It’s as if your phone needed infinite memory — and never crashed.

It’s as if your phone needed infinite memory — and never crashed.

That’s why, when we use Netflix or Spotify, we get recommendations.

Based on your usage — and millions of other users — the system guesses what you’ll probably like.

Same for shopping sites.

They “know” what to suggest because they analyze your behavior.

Based on your usage — and millions of other users — the system guesses what you’ll probably like.

Same for shopping sites.

They “know” what to suggest because they analyze your behavior.

That’s also why websites ask you to accept cookies — so your access, usage, and browsing data can be stored and used for things like recommendation systems.

There are also classification systems:

Does your email go straight to spam?

Machine Learning.

Facial recognition?

Machine Learning + Computer Vision.

Auto-correct?

Models predicting words based on previous ones.

Does your email go straight to spam?

Machine Learning.

Facial recognition?

Machine Learning + Computer Vision.

Auto-correct?

Models predicting words based on previous ones.

But there’s a problem…

If you ask a machine to read letter by letter, word by word, without considering the whole, you’re asking it to forget everything at every step — like when you read distractedly and have to flip back pages to remember what you read.

If you ask a machine to read letter by letter, word by word, without considering the whole, you’re asking it to forget everything at every step — like when you read distractedly and have to flip back pages to remember what you read.

That’s when, in 2016, Google scientists asked:

“What if a machine could pay attention?”

And that’s how Transformers — or Attention Layers — were born.

“What if a machine could pay attention?”

And that’s how Transformers — or Attention Layers — were born.

Instead of predicting just 2 or 3 words, Transformers allow the machine to look at the entire text and understand what makes sense.

Example:

“The girl, who hurt her knee running from a dog, is crying…”

Before:

The AI might suggest “is crying tears.”

Now:

It can suggest “and ran to her mother.”

“The girl, who hurt her knee running from a dog, is crying…”

Before:

The AI might suggest “is crying tears.”

Now:

It can suggest “and ran to her mother.”

This was a game changer. It paved the way for Generative AI — a field focused on models capable of creating new things.

🧵 Part 2 — Models, Market, and Computational Power

What are AI “models”?

Simply put, models are specialists trained on specific datasets — like teachers:

• One who studied History all their life

• One who specialized in Math

• One in Physics…

What are AI “models”?

Simply put, models are specialists trained on specific datasets — like teachers:

• One who studied History all their life

• One who specialized in Math

• One in Physics…

This is why machine behavior is often compared to the human brain.

Neural networks learn because, like humans, they adjust the “weights” of information — giving more weight to important data and minimizing less important ones.

That’s why each AI is good at specific tasks.

Neural networks learn because, like humans, they adjust the “weights” of information — giving more weight to important data and minimizing less important ones.

That’s why each AI is good at specific tasks.

Today, one of the best generalist models — the kind that knows a bit of everything — is ChatGPT.

Other examples:

• Claude and Gemini — great for coding

• LLama (Meta) — great for conversation (trained mostly on chats)

Other examples:

• Claude and Gemini — great for coding

• LLama (Meta) — great for conversation (trained mostly on chats)

But why do we call these models “accessible”?

Because training an AI requires enormous computational power — and using them requires significant processing too.

Who has that?

BigTechs — companies with the structure to train and maintain these models.

They dominate the market.

Because training an AI requires enormous computational power — and using them requires significant processing too.

Who has that?

BigTechs — companies with the structure to train and maintain these models.

They dominate the market.

And why do some let you use AI for free?

Because, in practice, it’s not free.

When you use a free service, your data may be used to further train the models.

If it’s free — you are the product.

Because, in practice, it’s not free.

When you use a free service, your data may be used to further train the models.

If it’s free — you are the product.

If you pay, you may have the option to opt-out of data sharing — or at least be clearly informed.

These are Closed Models — paid or commercial.

These are Closed Models — paid or commercial.

On the other hand, there are Open Source Models — trained and released by independent communities, so you can use them on your own device, without corporate data collection.

But realistically, most people won’t easily use them. Why?

Because they require:

• Powerful hardware

• Technical knowledge

• Complex setup and adjustments

Because they require:

• Powerful hardware

• Technical knowledge

• Complex setup and adjustments

Remember those chips causing trade wars between countries?

Yep — the same ones used for AI applications.

We’re talking GPUs — like those in gaming PCs — but for AI, they need to be special (and way more expensive).

Yep — the same ones used for AI applications.

We’re talking GPUs — like those in gaming PCs — but for AI, they need to be special (and way more expensive).

Today in Brazil, a basic AI-capable GPU costs around R$ 7,000 — and that’s far from the best option.

A decent one for starters? Rarely under R$ 20,000.

And it’s not just the GPU — the whole setup must handle the demand, plus the high energy consumption.

A decent one for starters? Rarely under R$ 20,000.

And it’s not just the GPU — the whole setup must handle the demand, plus the high energy consumption.

🧵 Part 3 — Why Does AI Fail?

Overfitting, Hallucination, and the Role of the Prompt

Even trained on billions of data, AI still fails. Why?

Overfitting, Hallucination, and the Role of the Prompt

Even trained on billions of data, AI still fails. Why?

Two main reasons:

1) AI Learning (and Overfitting Risk)

It’s like memorizing a text without really understanding it.

You read it so many times you can recite it — but can’t explain it.

1) AI Learning (and Overfitting Risk)

It’s like memorizing a text without really understanding it.

You read it so many times you can recite it — but can’t explain it.

AI does the same when it gives too much weight to certain info, being “over-trained” on it — that’s overfitting.

If you ask for A but it’s overfitted on B — it will always give you B.

If you ask for A but it’s overfitted on B — it will always give you B.

2) The Prompt — Your Communication with AI

The prompt is the command you give.

And AI doesn’t guess.

If the prompt is vague or poorly structured, you’ll likely get random or nonsensical results.

The prompt is the command you give.

And AI doesn’t guess.

If the prompt is vague or poorly structured, you’ll likely get random or nonsensical results.

It’s like your boss asking for a project without details — you’ll try… but probably deliver something wrong.

AI does the same — that’s hallucination.

AI does the same — that’s hallucination.

It tries to fulfill the task even if it doesn’t know what it is — often guessing wrong.

That’s why Prompt Engineering is crucial.

That’s why Prompt Engineering is crucial.

Example:

📝 “Draw a sky.”

vs.

📝 “Draw a blue sky at noon on a summer day in Rio de Janeiro.”

The second will get you a much better result.

📝 “Draw a sky.”

vs.

📝 “Draw a blue sky at noon on a summer day in Rio de Janeiro.”

The second will get you a much better result.

But even with billions of data and anti-overfitting techniques… Why does AI still seem “dumb” sometimes?

Because it all depends on the model, training, and — most of all — context of use.

Because it all depends on the model, training, and — most of all — context of use.

🧵 Part 4.1 — Image Generation: It’s Not Just Pushing a Button

Generating images with AI isn’t about pushing a button.

It’s not about typing random stuff and expecting amazing results.

It requires:

• Knowledge

• Intention

• Control

Generating images with AI isn’t about pushing a button.

It’s not about typing random stuff and expecting amazing results.

It requires:

• Knowledge

• Intention

• Control

Why?

Because the model doesn’t think.

It only responds to what you ask — within the limits you know how to control.

Because the model doesn’t think.

It only responds to what you ask — within the limits you know how to control.

If you don’t know what to ask, how to ask, and how to adjust — you’ll always get:

✅ Something random

✅ Something irrelevant

✅ Or something generic like everything else

✅ Something random

✅ Something irrelevant

✅ Or something generic like everything else

Using AI for image generation takes work.

It’s trial, error, adjustment.

You test, redo, tweak details, adjust again.

And you need to understand:

• What you’re asking for

• Why you’re asking for it

• What result to expect

It’s trial, error, adjustment.

You test, redo, tweak details, adjust again.

And you need to understand:

• What you’re asking for

• Why you’re asking for it

• What result to expect

Without this control, all you create is another pile of random pixels.

The control is never in the AI.

It’s in the user.

People who don’t get this confuse “using AI” with “pushing a button and hoping for the best.”

The control is never in the AI.

It’s in the user.

People who don’t get this confuse “using AI” with “pushing a button and hoping for the best.”

🧵 Part 4.2 — What Happens Behind Image Generation? The Invisible Math

AI works with mathematical probabilities — not inspiration.

When you ask for an image, the model goes through millions of possible pixel, shape, and color combinations — trying to deliver what “makes sense” based on its training.

When you ask for an image, the model goes through millions of possible pixel, shape, and color combinations — trying to deliver what “makes sense” based on its training.

But… makes sense to whom?

To math.

AI doesn’t understand aesthetics, doesn’t feel, doesn’t truly create.

It calculates the probability that a combination fits your prompt.

All within a random space called noise, which gets “cleaned up” during the process.

To math.

AI doesn’t understand aesthetics, doesn’t feel, doesn’t truly create.

It calculates the probability that a combination fits your prompt.

All within a random space called noise, which gets “cleaned up” during the process.

That’s why we use mathematical parameters to control:

• Guidance/CFG: how much the prompt influences the result

• Denoise: how much the AI can change or stick to the input

• Sampler/Scheduler: how the model processes each step

• Guidance/CFG: how much the prompt influences the result

• Denoise: how much the AI can change or stick to the input

• Sampler/Scheduler: how the model processes each step

Each one radically changes the outcome.

If you don’t adjust these well:

• The AI ignores your prompt

• The result is meaningless noise

• Or the image is generic and incoherent

If you don’t adjust these well:

• The AI ignores your prompt

• The result is meaningless noise

• Or the image is generic and incoherent

This entire process follows a logic called pipeline.

You build a flow, define the operation order, and apply correct adjustments.

If you mess up the order or parameters, everything falls apart.

You build a flow, define the operation order, and apply correct adjustments.

If you mess up the order or parameters, everything falls apart.

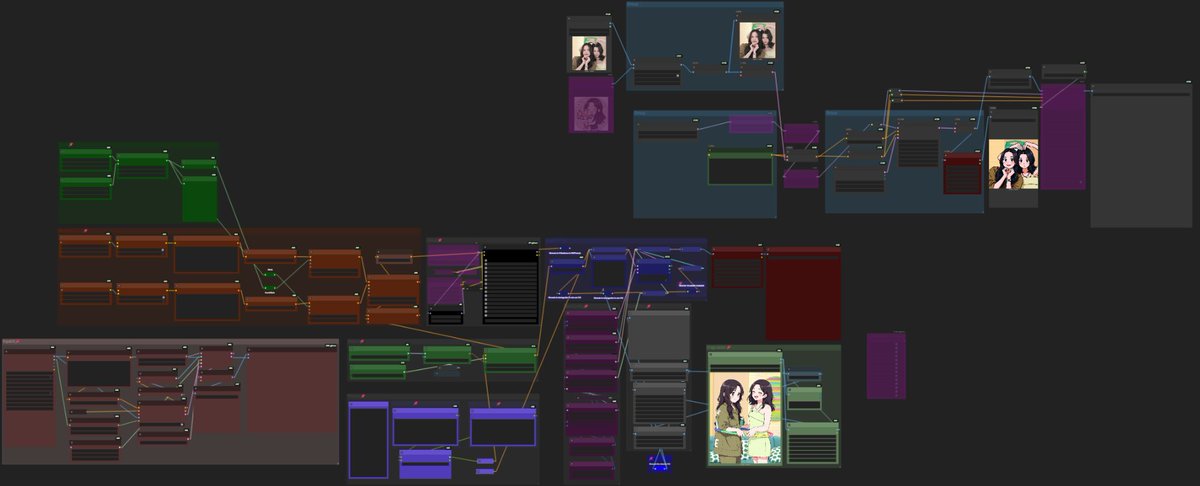

Example of a structured pipeline — from left to right:

• Inpaint (Basic editing)

• Mask

• Regional LoRA Application

• Setup

• Style Model

• Prompt

• Generation Config

• ControlNet

• Generation Control

• Context Flow

• Result Upscale

(personal image)

• Inpaint (Basic editing)

• Mask

• Regional LoRA Application

• Setup

• Style Model

• Prompt

• Generation Config

• ControlNet

• Generation Control

• Context Flow

• Result Upscale

(personal image)

🧵 Part 4.3 — Combining Styles, LoRAs, and the Real Challenge of Control

You can use multiple LoRAs (style models) at once — creating a “style soup.”

You can use multiple LoRAs (style models) at once — creating a “style soup.”

Example:

I want Van Gogh’s strokes, an impressionist touch, and a charcoal vibe.

A style that maybe doesn’t even officially exist.

But for the AI to handle this, I need total control of my request.

I want Van Gogh’s strokes, an impressionist touch, and a charcoal vibe.

A style that maybe doesn’t even officially exist.

But for the AI to handle this, I need total control of my request.

And with paid models?

You don’t have that control.

On customization websites, you must be careful with what you upload.

I don’t share sensitive images or data — because I don’t control what they’ll do with them.

For me: If I don’t own the process, I don’t own what I create.

You don’t have that control.

On customization websites, you must be careful with what you upload.

I don’t share sensitive images or data — because I don’t control what they’ll do with them.

For me: If I don’t own the process, I don’t own what I create.

To create something truly unique, I need:

• The right LoRAs

• A fine-tuned prompt

• The correct model

And even so… it might not be enough.

Each model reacts differently — and not everything blends well.

• The right LoRAs

• A fine-tuned prompt

• The correct model

And even so… it might not be enough.

Each model reacts differently — and not everything blends well.

🧵 Part 4.4 — Code, Control, and Responsibility

Let’s go back to the core: Code.

Let’s go back to the core: Code.

The computer only understands 0 and 1.

And even if AI “understands” pixels, everything relies on math applied to code.

Thousands of lines of code — hard to follow — dictate every step of the process.

And even if AI “understands” pixels, everything relies on math applied to code.

Thousands of lines of code — hard to follow — dictate every step of the process.

The Open Source community created tools that work like apps but run 100% locally, with no external server connection.

This means:

✅ More control

✅ More responsibility

This means:

✅ More control

✅ More responsibility

There are also machine rental services — but they’re expensive and not always stable.

It’s like using a virtual cybercafé — you don’t control everything.

It’s like using a virtual cybercafé — you don’t control everything.

Even Open Source models have built-in filters against illegal content (pornography, racism, etc.).

But a bad actor with technical skills can bypass those.

In the end?

The problem isn’t AI. It’s character.

But a bad actor with technical skills can bypass those.

In the end?

The problem isn’t AI. It’s character.

Low-code or no-code platforms help?

Yes — but without logic, organization, and correct calculations…

You won’t generate anything meaningful.

That applies to everything:

• Image size

• Number of steps

• Batch settings

Yes — but without logic, organization, and correct calculations…

You won’t generate anything meaningful.

That applies to everything:

• Image size

• Number of steps

• Batch settings

🧵 Part 4.5 — Advanced Control: Beyond the Prompt

Besides basic parameters, there are tools for even more control:

• ControlNet: to guide poses and structures

• Regional Control: to define actions in each image area

Besides basic parameters, there are tools for even more control:

• ControlNet: to guide poses and structures

• Regional Control: to define actions in each image area

Plus specific controls for:

• Light

• Emotion

• Style

• Facial expressions

• 3D work (Blender)

The possibilities are almost endless — but only if you know what you’re doing.

Without creativity and the ability to guide AI, generating authenticity is impossible.

• Light

• Emotion

• Style

• Facial expressions

• 3D work (Blender)

The possibilities are almost endless — but only if you know what you’re doing.

Without creativity and the ability to guide AI, generating authenticity is impossible.

Same for audio and video.

The model reads waves or frames, recognizes patterns, and generates new outputs.

But again:

The secret is always training + computing power + technical mastery.

The model reads waves or frames, recognizes patterns, and generates new outputs.

But again:

The secret is always training + computing power + technical mastery.

🧵 Part 5 — Ethics, Risks, and Impacts

Why the AI debate goes way beyond “copying” and “creativity”

Why so much intolerance towards AI-generated content?

First — misinformation.

Why the AI debate goes way beyond “copying” and “creativity”

Why so much intolerance towards AI-generated content?

First — misinformation.

Second — because many people don’t understand (or pretend not to) that AI doesn’t copy ready-made images or patch others together.

It creates based on mathematical patterns.

What AI “sees” is numbers — not artworks.

It creates based on mathematical patterns.

What AI “sees” is numbers — not artworks.

Yes, it can resemble human styles.

Yes, this raises serious questions about copyright, ethics, and transparency.

But no — it’s not direct plagiarism.

Yes, this raises serious questions about copyright, ethics, and transparency.

But no — it’s not direct plagiarism.

“Does using AI mean people don’t make an effort?”

Let’s think:

When everything was drawn by hand, work took way longer.

Then editing software came along — but that didn’t make creative work worthless.

Let’s think:

When everything was drawn by hand, work took way longer.

Then editing software came along — but that didn’t make creative work worthless.

It’s like autotune in music:

Some singers use it — and they’re still artists.

Using tools doesn’t devalue anyone’s work.

Some singers use it — and they’re still artists.

Using tools doesn’t devalue anyone’s work.

Is it possible to use AI ethically?

It depends.

Creating with transparency and awareness?

Totally valid.

Faking authorship or copying someone else’s style without credit?

Serious problem.

And worse — using AI for crimes or to harm people.

It depends.

Creating with transparency and awareness?

Totally valid.

Faking authorship or copying someone else’s style without credit?

Serious problem.

And worse — using AI for crimes or to harm people.

AI doesn’t feel. It has no intention. It has no context.

It’s just a tool.

What turns a prompt into real art is human perspective.

Creativity isn’t stolen. It’s expanded — if you know how to use it.

It’s just a tool.

What turns a prompt into real art is human perspective.

Creativity isn’t stolen. It’s expanded — if you know how to use it.

And what about Deepfakes?

Bad actors have always existed — with or without AI.

What can we do as users?

• Avoid oversharing online

• Don’t answer suspicious calls

• Don’t share personal data randomly

• Change passwords regularly

Bad actors have always existed — with or without AI.

What can we do as users?

• Avoid oversharing online

• Don’t answer suspicious calls

• Don’t share personal data randomly

• Change passwords regularly

My example:

I don’t post face pictures on Instagram.

Anyone could take an image and — even without advanced tools — use face swap apps or manipulate it.

Public figures are even more exposed.

I don’t post face pictures on Instagram.

Anyone could take an image and — even without advanced tools — use face swap apps or manipulate it.

Public figures are even more exposed.

It’s pointless to just say “don’t use the artist’s photo with AI” — because if the AI doesn’t know it yet, it can learn eventually.

What matters is conscious and respectful use.

What matters is conscious and respectful use.

My personal view:

If something illegal happens, report it to authorities, platforms, and — if necessary — fans.

Transparency is essential.

If something illegal happens, report it to authorities, platforms, and — if necessary — fans.

Transparency is essential.

What about environmental impact?

AI models consume:

• Energy

• Water (for cooling)

• Natural resources

Real example:

ChatGPT consumes around 2 liters of water every 50 queries, just for cooling.

AI models consume:

• Energy

• Water (for cooling)

• Natural resources

Real example:

ChatGPT consumes around 2 liters of water every 50 queries, just for cooling.

When the Studio Ghibli trend went viral, in 1 hour, 1 million people signed up to use ChatGPT — generating around 3 million images per day.

Water consumption?

About 7,500 liters per day — equivalent to what 100 people use daily.

Water consumption?

About 7,500 liters per day — equivalent to what 100 people use daily.

And CO2 emissions?

Between 5g and 50g per image generation — like driving 5,000 km or charging 150,000 smartphones.

Between 5g and 50g per image generation — like driving 5,000 km or charging 150,000 smartphones.

My perspective as a professional, student, and AI researcher:

The future of AI will belong to those who make it more sustainable.

The future of AI will belong to those who make it more sustainable.

💬 This is just an introduction.

I’ll keep sharing practical examples of workflows, images, and code.

I’m open to questions, criticism, and debate.

And no — this series was NOT written by ChatGPT.

I’ll keep sharing practical examples of workflows, images, and code.

I’m open to questions, criticism, and debate.

And no — this series was NOT written by ChatGPT.

@threadreaderapp unroll

• • •

Missing some Tweet in this thread? You can try to

force a refresh