Got back last night from the World AI Conference in Shanghai. Megathread with photos/videos/thoughts from the conf itself + giant expo next door (ended up going back to the expo 3 times bc there were so many interesting booths)

First up: robots robots robots

(yes, inc Unitree)

First up: robots robots robots

(yes, inc Unitree)

The first couple of robots I saw were not very impressive: this fella (from Zhiyuan 智元机器人) had pride of place in the main conference venue, but his handshake game could use some work

This dog was notably more of a scamper-er than I've seen before, though either the dog or its human controller was not great at judging backflip distance

And this robot was on display very prominently near the main entrance, but didn't exactly blow me away. Its job is to move the red containers onto the shorter loop, but it struggled with the highly complex situation of two containers arriving in close succession

Robots doing basic choreography is usually 🥱, but these ones have pompoms!

CHINA POISED TO WIN THE RACE TO AI CHEERLEADERS, ONCE BASTION OF US DOMINANCE, you heard it here first

CHINA POISED TO WIN THE RACE TO AI CHEERLEADERS, ONCE BASTION OF US DOMINANCE, you heard it here first

And their K9 friends seem to be better at backflips than the scamperer above...or rather, their human operators are better at judging when to hit the “do a backflip” button

I was more interested in the (minority of) robots that were operating autonomously. This guy could play hacky sack tic tac toe, sorta

Great product placement from Pepsi on this robot who could autonomously stack the cans as well as (slowly) finding your hand, putting a can in it, and then releasing the can once you close your hand

Zhipu (apparently rebranded Z.AI in English?) is not very widely known outside China, but they’re a company to watch. Here they’re showing off how their AutoGLM model can steer this robot to operate a (simple hold-cup-press-button) coffee machine. Not a very hard robotics task, but if the model didn’t need too much tuning for this use case then it’s neat.

(The coffee was not good, but that’s not the robot’s fault.

(The coffee was not good, but that’s not the robot’s fault.

One more autonomous robot before Unitree: this package labeler was solid. Not super fast, but 2 separate arms flexibly working together on a more industrial task. There were a handful of other industrial-style robots on display in the expo—less flashy, but more useful (for now)

OK, Unitree! Their booth was absolutely mobbed—5 people deep on all sides, with almost everyone filming or taking photos. Much of the time there didn’t seem to be anything too thrilling going on, e.g. this lady rolling around (ride-on dog = the new ride-on mower?

But Unitree did have the demo that impressed me most at the expo—two humanoids boxing. I’m actually not sure how much of this was autonomous vs. remote operated, but at a minimum the balance/stabilization must be automatic, and that’s impressive. Not perfect though (see 0:50)

Also notable in ⬆️—how they led the robots out into the crowd at the end of the demo. Even if they were being remotely operated, it stuck out to me whenever robots were allowed to move through the (at times quite thick) crowds

More robots in the crowd, though less surprising to see for these dogs, since 1) they’re definitely being remotely operated, 2) since they’re 4-legged, the risk of falling on someone is much lower, 3) they’re not really doing much

And now for something completely different, some highly realistic robots—starting with this one, which I’m like… 80% sure is a robot rather than a human in costume? (At the end you glimpse a man in a gray shirt who I think might be holding a controller

Final 2 robots for now are from another front in the AI wars that China is definitely winning: highly realistic robots dressed as Chinese historical figures

(Anyone recognize whether these are supposed to be specific people? I'm not sure)

(Anyone recognize whether these are supposed to be specific people? I'm not sure)

A few broader thoughts:

1) It was very fun to get to be around so many robots in person, and in slightly less controlled settings than you see in demo videos. But at a technical level I was impressed, but not blown away. I expect Google, π, Figure, and of course Boston Dynamics could put on a better show if they wanted to.

(Links:

deepmind.google/discover/blog/…

physicalintelligence.company/blog/pi05

figure.ai

bostondynamics.com )

1) It was very fun to get to be around so many robots in person, and in slightly less controlled settings than you see in demo videos. But at a technical level I was impressed, but not blown away. I expect Google, π, Figure, and of course Boston Dynamics could put on a better show if they wanted to.

(Links:

deepmind.google/discover/blog/…

physicalintelligence.company/blog/pi05

figure.ai

bostondynamics.com )

2) I was intrigued by the number of booths advertising a “Deepseek 一体机”/“all-in-one machine”—a ready-to-go server with an AI chip with DeepSeek loaded for you to use. With amazing timing, @jjding99's ChinAI newsletter this week was on exactly this 👀

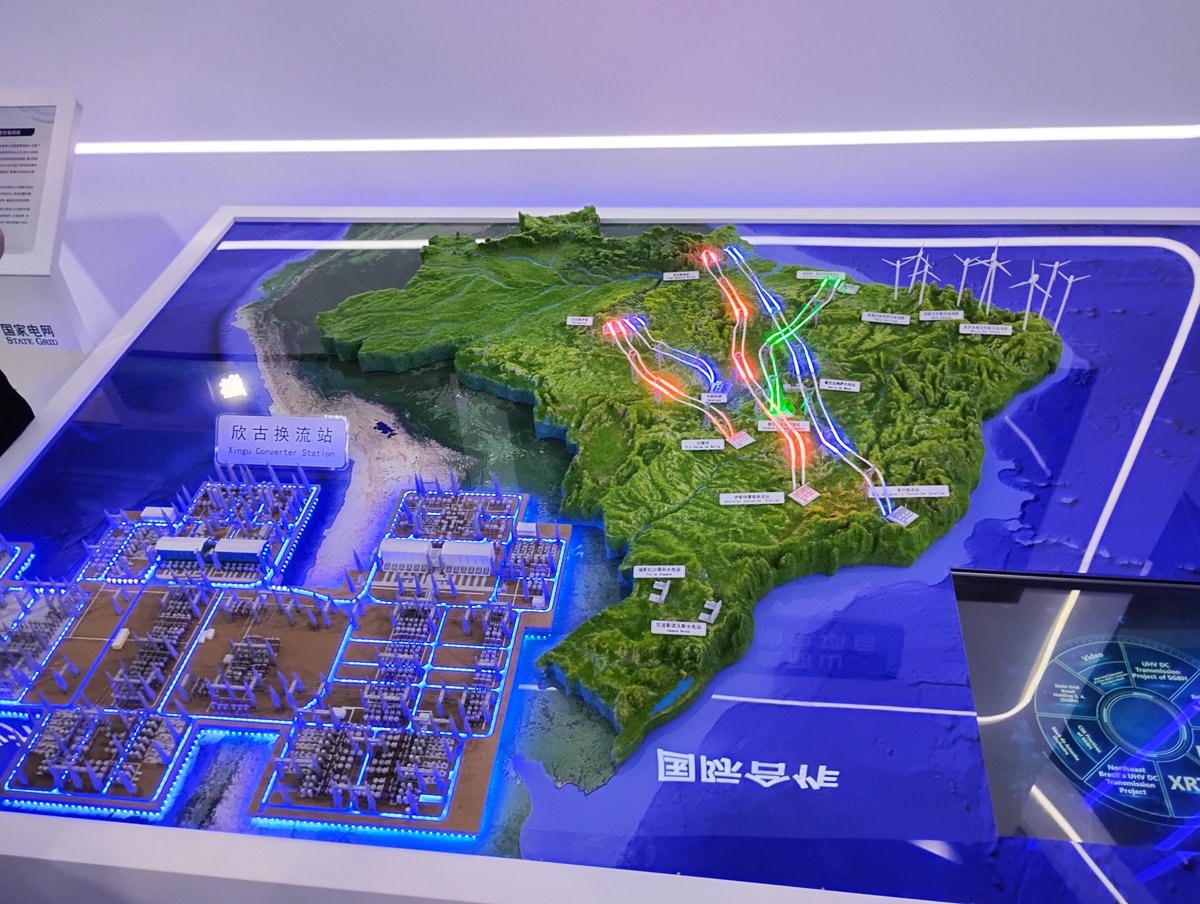

@jjding99 3) Maybe the exhibit that interested me most of all was this shiny diorama of a huge energy project China’s State Grid has in Brazil, building ultra-high-voltage transmission lines. They’re using AI in several ways in the project, including to identify failed pieces of equipment.

4) Walking around the exhibits, I couldn’t help but notice how many of the UIs were basically exact copies of ChatGPT. This isn’t a knock on China—most US AI companies do this as well. Cursor, the hugely popular AI coding tool, seems to be successful in large part due to UI choices (building around the IDE interface coders are used to). There’s prob a ton of alpha in figuring out what UIs come next for different kinds of AI products.

5) I found the conference itself—supposedly the main event—less intriguing than the expo hall, though there were some nuggets. There were rumors Xi himself might speak, but in the end Li Qiang (his #2) spoke for the 2nd year in a row. Entrance to that opening session was tightly controlled—certainly no foreigners allowed, and I heard that even Chinese conference attendees couldn’t go unless they were government officials. I find it amusing that China adopted the US’ “action plan” language to describe what they’re doing—they released a “global governance action plan” to go along with Li’s speech.

@jjding99 6) The conference’s theme was “Global Solidarity in the AI Era.” Classic Chinese messaging, but in a week where the US released a plan focused on US “dominance” and “winning the race,” it was easy to see which message would appeal more to most 3rd countries.

7) Of the 3 conf sessions I attended, one was huge (hundreds of people), one medium (dozens), one small (~30). The small one was the most interesting, though under Chatham House rule so I can’t share too much. It did suffer from the classic AI meeting problem of not knowing how broadly scoped it wanted to be—one organizer said the 3-hour discussion should focus specifically on loss of control risks (!) i.e. a specific subset of safety concerns, then another immediately said they wanted to talk about both safety and development, i.e., everything.

Much of the conference seemed like this: some people were quite switched-on and in the weeds, while others still seemed to be approaching AI from a very broad perspective.

Much of the conference seemed like this: some people were quite switched-on and in the weeds, while others still seemed to be approaching AI from a very broad perspective.

@jjding99 Still v jetlagged so I’ll leave it there for now. To close out, it’s a robot that… isn’t a robot? This booth’s audience was riveted, but these are definitely just human women pretending vaguely to be robots. I guess if it worked for Elon Musk, why not.

Top of the thread for easy retweeting:

https://x.com/hlntnr/status/1950734147099435037

Oh, and if you like this then you may also enjoy Rising Tide, my substack. Subscribe!

helentoner.substack.com

helentoner.substack.com

• • •

Missing some Tweet in this thread? You can try to

force a refresh