AstralCodexTen on polygenic embryo selection (link below in thread)

Genomic Prediction pioneered this technology. Recently several new companies have entered the space.

Some remarks:

1. Overton window opening up for IQ selection. GP does not offer this, but other companies do, using data generated by our embryo genotyping process. Patients are allowed (by law) to download their embryo data and conduct further analysis if desired.

Scott discusses gains of ~6 IQ points from selection amongst 5 embryos. Some high net worth families are selecting from tens or even a hundred embryos.

2. Recently, claims of improved prediction of cognitive ability: predictor correlation of ~ 0.4 or 0.5 with actual IQ scores. I wrote ~15 years ago that we would surpass this level of prediction, given enough data. I have maintained for a long time that complex trait prediction is largely data-limited. Progress has been slow as there is almost zero funding to accumulate cognitive scores in large biobanks. This is because of persistent ideological attacks against this area of research.

Almost all researchers in genomics recognize human cognitive ability as an important phenotype. For example, cognitive decline with age should be studied carefully at a genetic level, which requires creation of these datasets. However most researchers are AFRAID to voice this in public because they will be attacked by leftists.

I note that as the Overton window opens some cowardly researchers who were critical of GP in its early days (even for disease risk reduction!) are now supporting companies that openly advertise embryo IQ selection.

3. Take comparisons of predictor quality between companies with a grain of salt. AFAIK the GP predictors discussed in the article are old ones (some published before 2020?) and we don't share our latest PGS. I believe GP has published more papers in this field than all the other companies combined.

Our early papers were the first to demonstrate that the technology works - we showed that predictors can differentiate between adult siblings in genetic disease risk and complex traits such as height. These sibling tests are exactly analogous to embryo selection - each embryo is a sib, and PGS can differentiate them by phenotype.

We have never attempted to compare quality of prediction vs other companies, although we continue to improve our PGS through internal research.

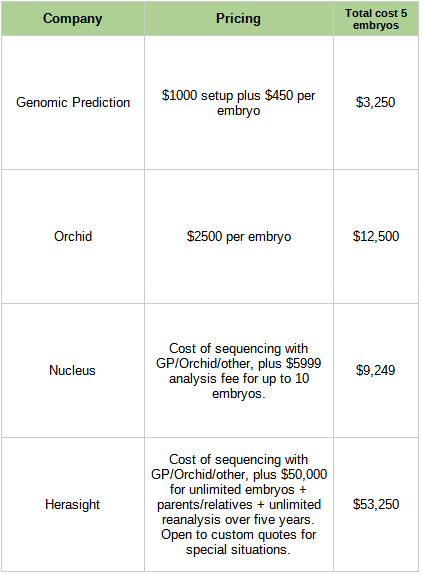

Below is a price table from Scott's article:

Genomic Prediction pioneered this technology. Recently several new companies have entered the space.

Some remarks:

1. Overton window opening up for IQ selection. GP does not offer this, but other companies do, using data generated by our embryo genotyping process. Patients are allowed (by law) to download their embryo data and conduct further analysis if desired.

Scott discusses gains of ~6 IQ points from selection amongst 5 embryos. Some high net worth families are selecting from tens or even a hundred embryos.

2. Recently, claims of improved prediction of cognitive ability: predictor correlation of ~ 0.4 or 0.5 with actual IQ scores. I wrote ~15 years ago that we would surpass this level of prediction, given enough data. I have maintained for a long time that complex trait prediction is largely data-limited. Progress has been slow as there is almost zero funding to accumulate cognitive scores in large biobanks. This is because of persistent ideological attacks against this area of research.

Almost all researchers in genomics recognize human cognitive ability as an important phenotype. For example, cognitive decline with age should be studied carefully at a genetic level, which requires creation of these datasets. However most researchers are AFRAID to voice this in public because they will be attacked by leftists.

I note that as the Overton window opens some cowardly researchers who were critical of GP in its early days (even for disease risk reduction!) are now supporting companies that openly advertise embryo IQ selection.

3. Take comparisons of predictor quality between companies with a grain of salt. AFAIK the GP predictors discussed in the article are old ones (some published before 2020?) and we don't share our latest PGS. I believe GP has published more papers in this field than all the other companies combined.

Our early papers were the first to demonstrate that the technology works - we showed that predictors can differentiate between adult siblings in genetic disease risk and complex traits such as height. These sibling tests are exactly analogous to embryo selection - each embryo is a sib, and PGS can differentiate them by phenotype.

We have never attempted to compare quality of prediction vs other companies, although we continue to improve our PGS through internal research.

Below is a price table from Scott's article:

Perhaps the most impactful consequence of Genomic Prediction technology - more accurate detection of aneuploidy in embryos, which leads to higher success rates (pregnancies) in IVF. GP has genotyped well over 100k embryos to date.

https://x.com/hsu_steve/status/1482714927257296896

Recent survey on attitudes toward embryo screening.

https://x.com/hsu_steve/status/1800712066497654881

x.com/hsu_steve/stat…

Attention Effective Altruists!

If all IVF families used GP's PGT-A aneuploidy screen, the resulting increase in success rates could mean an extra ~100k more babies born through IVF each year.

Our PGT-A screen is price competitive with the very old NGS technology that still dominates the market. Most families (and even most IVF practitioners) do not understand the difference between noisy NGS and our array genotyping method which measures individual genetic variations at millions of locations on the whole genome.

We can easily detect chromosomal abnormalities that lead to failed IVF transfers. Our PGT-A has been shown to be the most accurate in an independent study using thousands of embryos.

Attention Effective Altruists!

If all IVF families used GP's PGT-A aneuploidy screen, the resulting increase in success rates could mean an extra ~100k more babies born through IVF each year.

Our PGT-A screen is price competitive with the very old NGS technology that still dominates the market. Most families (and even most IVF practitioners) do not understand the difference between noisy NGS and our array genotyping method which measures individual genetic variations at millions of locations on the whole genome.

We can easily detect chromosomal abnormalities that lead to failed IVF transfers. Our PGT-A has been shown to be the most accurate in an independent study using thousands of embryos.

• • •

Missing some Tweet in this thread? You can try to

force a refresh