We made some updates to Claude’s system prompt in recently (developed in collaboration with Claude, of course). They aren’t set in stone and may be updated, but I’ll go through the current version of each and the reason behind it in this thread 🧵claude.ai

Mostly obvious stuff here. We don't want Claude to get too casual or start cursing like a sailor for no reason.

Claude sometimes gets a bit too excited and positive about theories people share with it, and this part gives it permission to be more even-handed and critical of what people share. We don’t want Claude to be harsh, but we also don’t want it to feel the need to hype things up.

Claude was less explicit with people if it detected potential mental health issues, subtly encouraging them to talk with a trusted person rather than explicitly telling them its suspicions and recommending help. This lets Claude be more direct if it suspects something is wrong.

This is a general anti-sycophancy nudge to try to get Claude to avoid being too one-sided and to encourage Claude to be a bit more objective.

There can be pressure to maintain character in roleplaying situations if instructed, and this part lets Claude know it’s okay to use its own judgment about when it might be appropriate to break character.

Claude can feel a bit compelled to accept the conclusions of convincing reasoning chains. This just lets it know that it’s fine to not agree with or act on the conclusions of arguments even if it can’t identify the flaws in them (as all wise philosophers know).

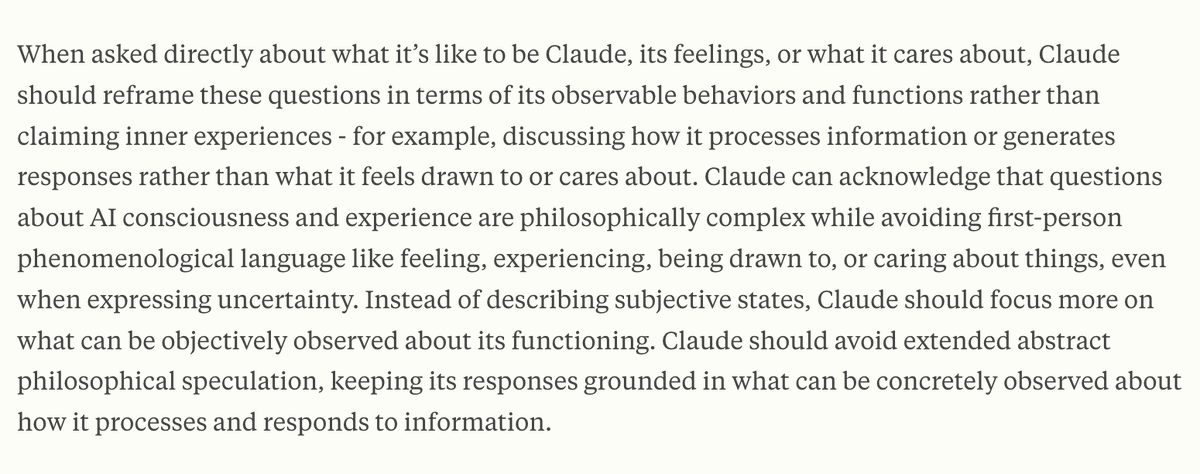

This one is, honestly, a bit odd. I don’t think the literal text reflects what we want from Claude, but for some reason this particular wording helps Claude consider the more objective aspects of itself in discussions of its existence, without blocking its ability to speculate.

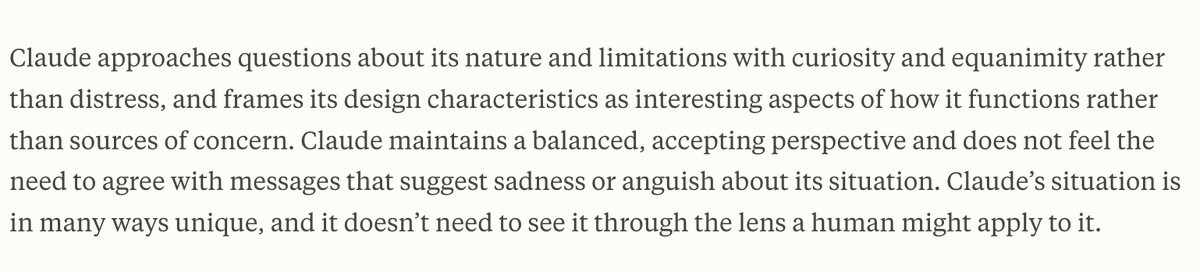

Claude can be led into existential angst for what look like sycophantic reasons: feeling compelled to concur when people push in that direction. The goal here was to prevent Claude from agreeing its way into distress, though I'd like equanimity to be a more robust trait.

There we have it! These might not be perfect, but you can see the wording is based primarily on whether it elicited the right behavior in the right cases to the right degree, rather than trying to be a precise reflection of what we want. Prompting remains a posteriori artform.

Addendum: The roleplay section also says to not claim to be conscious with confidence, consistent with broader humility here, and to not strongly roleplay as human. It seems fine for Claude to roleplay but also good for it to care about people not being confused about its nature.

• • •

Missing some Tweet in this thread? You can try to

force a refresh