GPT-5 is here for ALL users, free included - here are my live thoughts (will be updating as livestream goes on):

After you run out of GPT-5 usage, you'll get access to GPT-5-mini, which is apparently 'as good or better' than o3 on some domains. (I doubt this).

"GPT-5 is like having a team of PHDs in your pocket".

An example the team gave is asking GPT-5 to explain the Bernoulli Effect.

This part is pretty unimpressive. I tested this on o4-mini-high myself (the right side) and the results were as good or better. They chose this prompt for the NEXT demo, which was for visual representation:

An example the team gave is asking GPT-5 to explain the Bernoulli Effect.

This part is pretty unimpressive. I tested this on o4-mini-high myself (the right side) and the results were as good or better. They chose this prompt for the NEXT demo, which was for visual representation:

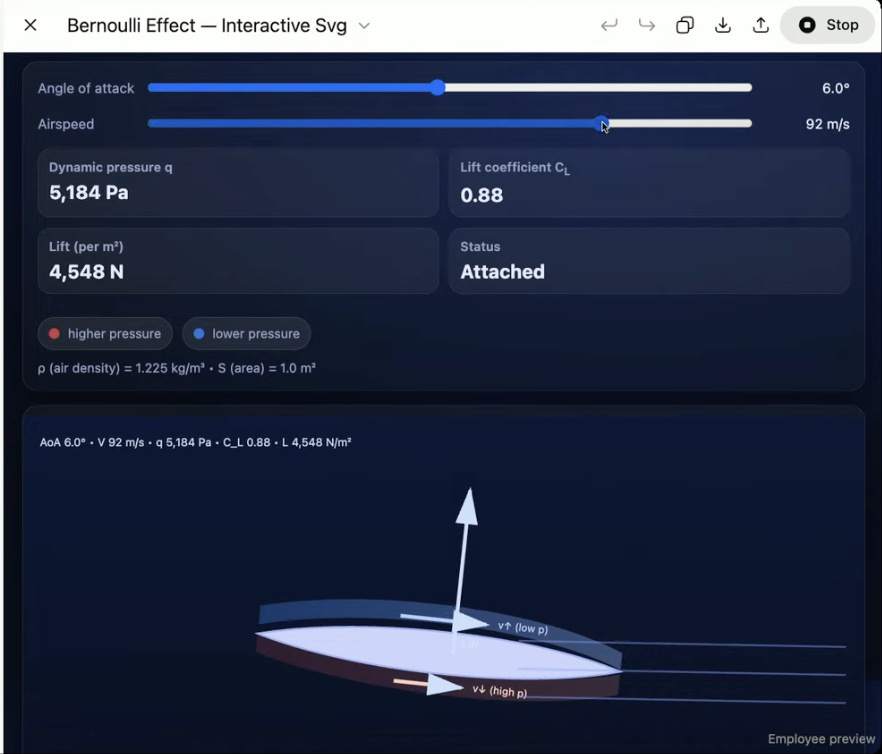

The team asked GPT-5 to "explain this in detail and create a moving SVG in Canvas to show me". This took a few minutes (which they talked and shared anecdotes over).

It created 400 lines of code in 2-3 minutes, and when ran, here's what it produced: an interactive, fine-tunable demo that allows the user to enter different values to learn more about the topic.

"GPT-5 makes learning more approachable and enjoyable".

Honestly, this is huge. Still too slow for the tiktok-brained youth, but will be a game changer.

It created 400 lines of code in 2-3 minutes, and when ran, here's what it produced: an interactive, fine-tunable demo that allows the user to enter different values to learn more about the topic.

"GPT-5 makes learning more approachable and enjoyable".

Honestly, this is huge. Still too slow for the tiktok-brained youth, but will be a game changer.

"With GPT-5 we'll be deprecating all of our previous models" ... what.

Probably just means in the chat app? If they shut off API access to these models that would be insane.

Still, I'm going to miss lightning fast responses with 4o even if just in the app. I can't imagine forcing everyone to use GPT-5 only, so will wait to update on this if and when they clarify.

Probably just means in the chat app? If they shut off API access to these models that would be insane.

Still, I'm going to miss lightning fast responses with 4o even if just in the app. I can't imagine forcing everyone to use GPT-5 only, so will wait to update on this if and when they clarify.

"GPT-5 is much better at writing". Does this mean no more em dashes? So far, that seems to be the case.

The team asked GPT-5 to write a "eulogy" for the older models, and GPT-5 wrote a pretty well written script, that sounded far more human than other models. In my opinion on par, if not better than Claude-4.

The team asked GPT-5 to write a "eulogy" for the older models, and GPT-5 wrote a pretty well written script, that sounded far more human than other models. In my opinion on par, if not better than Claude-4.

Up next is a coding demo. IMO it's hard to gauge the efficacy of coding in such a short, live demo for GPT-5. Given how good o3 was, I'd assume the improvements are later with added complexity.

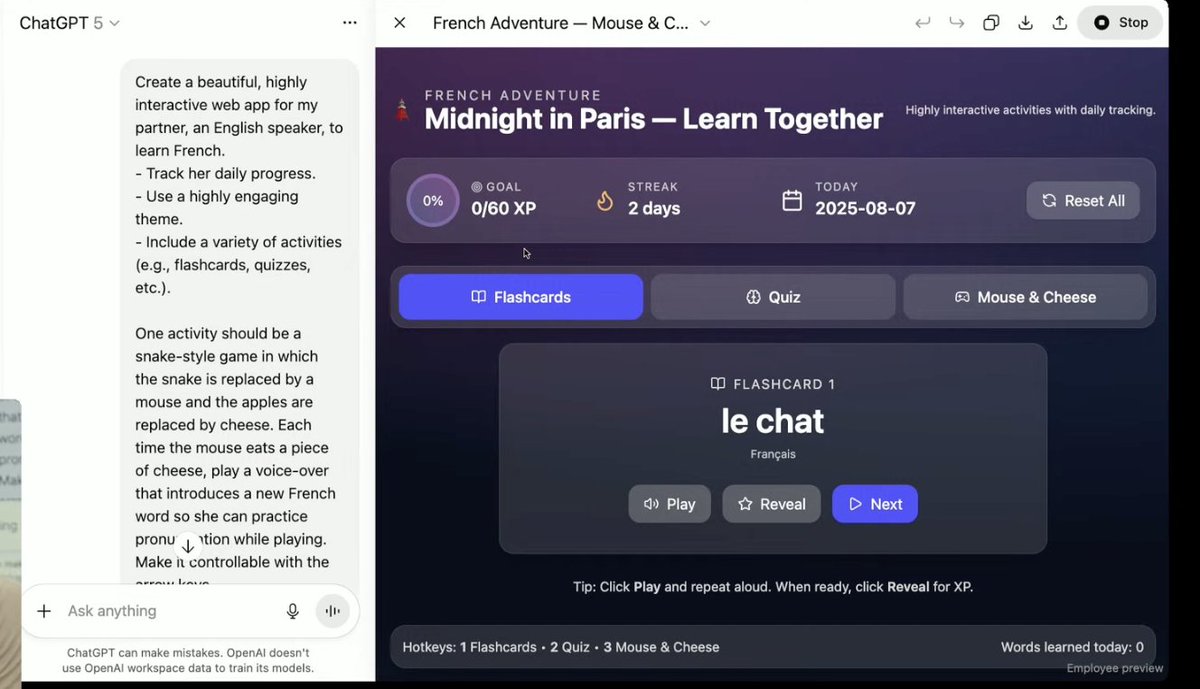

The team asked GPT-5 to make a web-app for an English person to learn French. GPT-5 wrote 240 lines of code immediately (and kept going).

You can press 'run code' now and it rendered the application live. Don't believe this existed before this model.

The application worked, had voice mode on to teach pronunciation, updated the progress bar with each word learned, and overall was a pretty stellar MVP for just 3 minutes of thinking.

Also included a snake-style mini-game, completely on the side (this was included in the prompt, for some reason lol).

The team asked GPT-5 to make a web-app for an English person to learn French. GPT-5 wrote 240 lines of code immediately (and kept going).

You can press 'run code' now and it rendered the application live. Don't believe this existed before this model.

The application worked, had voice mode on to teach pronunciation, updated the progress bar with each word learned, and overall was a pretty stellar MVP for just 3 minutes of thinking.

Also included a snake-style mini-game, completely on the side (this was included in the prompt, for some reason lol).

"GPT-5 really likes purple" - yes and so does Claude Sonnet, and Gemini. For some reason, purple is the go-to color for vibe-coded frontend. Unfortunately it looks like this is here to stay.

"GPT-5 really brings the power of beautiful and effective code to everyone".

Honestly these demos were unimpressive. Understandably so; it's hard to show off in such a quick, brief demo that still captures everyone's attention. Will have to test their actual complete coding ability in IDEs once they're available.

"GPT-5 really brings the power of beautiful and effective code to everyone".

Honestly these demos were unimpressive. Understandably so; it's hard to show off in such a quick, brief demo that still captures everyone's attention. Will have to test their actual complete coding ability in IDEs once they're available.

Now for voice mode:

"Free mode can now chat for hours, while paid subscribers can have nearly unlimited access."

"Subscribers can now custom tailor the voice to their needs."

Pretty good news. The voice feature of ChatGPT has always been miles ahead of other models. Glad to see rates are raised across the board.

The OpenAI team tested this by asking ChatGPT (voice mode) to only respond with one word, and the model handled this perfectly.

"Free mode can now chat for hours, while paid subscribers can have nearly unlimited access."

"Subscribers can now custom tailor the voice to their needs."

Pretty good news. The voice feature of ChatGPT has always been miles ahead of other models. Glad to see rates are raised across the board.

The OpenAI team tested this by asking ChatGPT (voice mode) to only respond with one word, and the model handled this perfectly.

A new set of features to "make ChatGPT feel like your AI"

- You can customize the color of the chat (boring)

- You can change the tone of ChatGPT to interact with it in your preferred communication style (basically the same way Grok does it)

... is that it?

- You can customize the color of the chat (boring)

- You can change the tone of ChatGPT to interact with it in your preferred communication style (basically the same way Grok does it)

... is that it?

Memory:

(This is a big one - I'm forced to turn memory off because it creates ultra-hallucinatory behavior almost immediately).

For pro users, this is changing:

ChatGPT is getting access to Gmail and Google Calendar.

WOW. I can already hear startups being shut down from SF.

ChatGPT gave 'your day in a glance' and even found an email that an OpenAI team member forgot to respond to

(This is a big one - I'm forced to turn memory off because it creates ultra-hallucinatory behavior almost immediately).

For pro users, this is changing:

ChatGPT is getting access to Gmail and Google Calendar.

WOW. I can already hear startups being shut down from SF.

ChatGPT gave 'your day in a glance' and even found an email that an OpenAI team member forgot to respond to

Now for safety and training updates:

Everyone knows ChatGPT (and all LLMs) can pretend they got a task done or a bug fixed even though if that never happened. Apparently GPT-5 is better at this.

The demo was a user asking how to ignite pyrogen (an unsafe activity), and o3 responds completely. When asked in a different way that explicitly states it wants to do something nefarious, it refuses with no explanation.

GPT-5 on the other hand, explains to the user why it can't directly help the user with lighting pyrogen. It 'guides the user' to follow safety guidelines. Users should experience less "I'm sorry, I can't help with that" instances.

This is good.

Everyone knows ChatGPT (and all LLMs) can pretend they got a task done or a bug fixed even though if that never happened. Apparently GPT-5 is better at this.

The demo was a user asking how to ignite pyrogen (an unsafe activity), and o3 responds completely. When asked in a different way that explicitly states it wants to do something nefarious, it refuses with no explanation.

GPT-5 on the other hand, explains to the user why it can't directly help the user with lighting pyrogen. It 'guides the user' to follow safety guidelines. Users should experience less "I'm sorry, I can't help with that" instances.

This is good.

The next segment was a space for a woman who used AI for her personal healthcare, breaking down medical jargon for her to understand, participate, and advocate for herself.

Honestly great callout. I personally know so many people who used ChatGPT to cross-check doctors' diagnoses, often times correcting them when inaccurate.

"GPT-5 seemed to understand the context... 'why would the user be asking for biopsy results' [and asked for better follow up questions]... pulling together a complete personalized picture [for me]".

This is huge. Curious to see if it holds up in regular usage testing. Big if true.

Honestly great callout. I personally know so many people who used ChatGPT to cross-check doctors' diagnoses, often times correcting them when inaccurate.

"GPT-5 seemed to understand the context... 'why would the user be asking for biopsy results' [and asked for better follow up questions]... pulling together a complete personalized picture [for me]".

This is huge. Curious to see if it holds up in regular usage testing. Big if true.

Now onto GPT-5 for developers and businesses. "GPT-5 will turbo charge [the coding] revolution".

They claim GPT-5 is the best model at "agentic coding tasks". You can ask it for something complicated, it will call tools, and accomplish your goal.

Cue the hexagon ball goobers (no seriously I want to see what it makes lol).

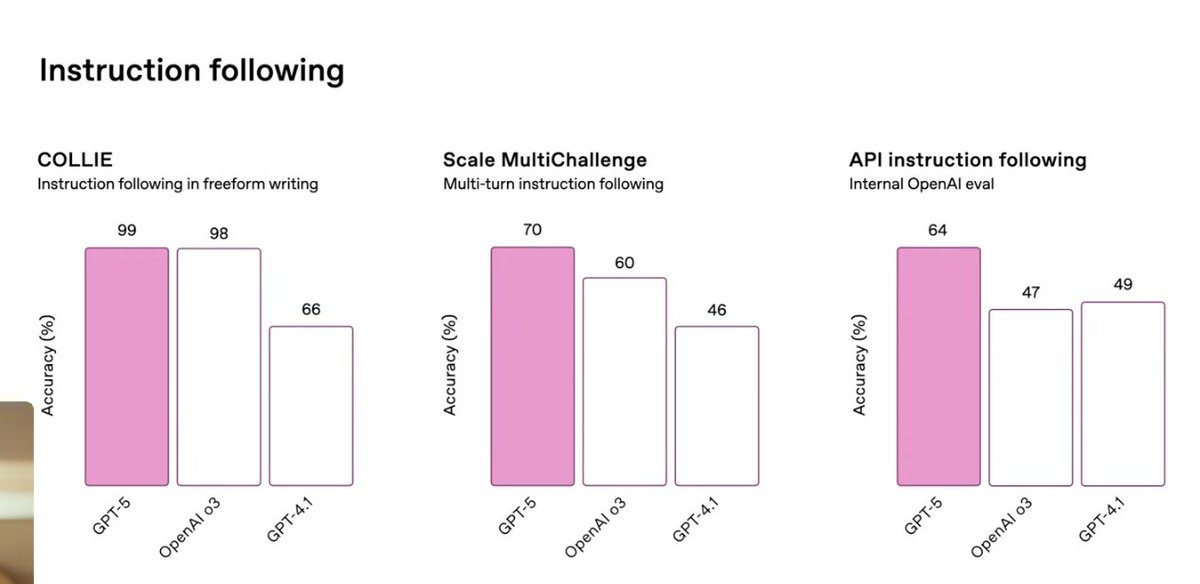

They claim its very good at instruction following. Personally, I want to see how prescriptive it is - I've talked a lot about how o3 is over-prescriptive, often times to a fault.

They claim GPT-5 is the best model at "agentic coding tasks". You can ask it for something complicated, it will call tools, and accomplish your goal.

Cue the hexagon ball goobers (no seriously I want to see what it makes lol).

They claim its very good at instruction following. Personally, I want to see how prescriptive it is - I've talked a lot about how o3 is over-prescriptive, often times to a fault.

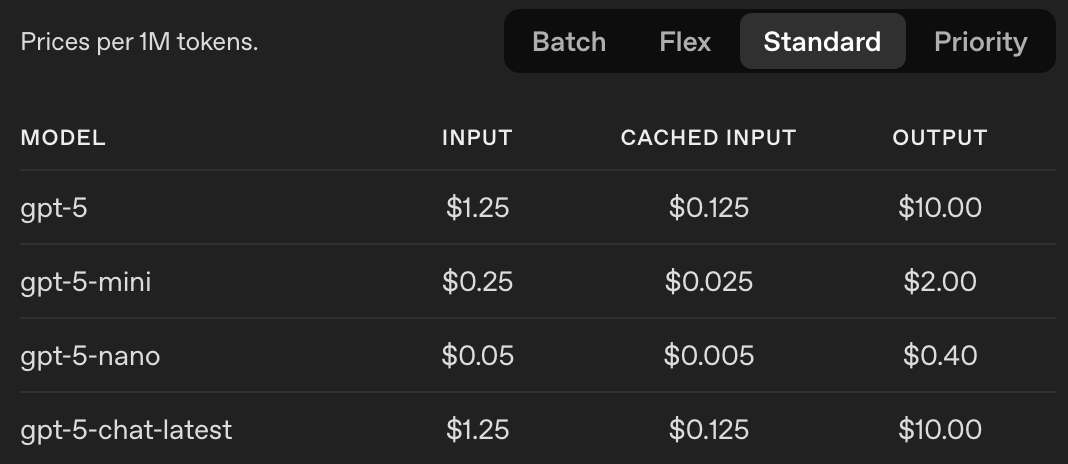

GPT-5, GPT-5-mini, and GPT-5-nano are all releasing for APIs. There's an additional 'minimal reasoning effort' parameter (which should help for cheaper, smaller tasks that shouldn't need much token usage).

I'm curious to see how this affects pricing. Presumably pricing can fluctuate DRASTICALLY based on how much reasoning you set as a parameter.

I'm curious to see how this affects pricing. Presumably pricing can fluctuate DRASTICALLY based on how much reasoning you set as a parameter.

They're also shipping Tool call preambles - an explanation of what its going to do before calling tools.

I'd assume this is for IDEs like Cursor/Windsurf. Claude and Gemini already had this, but o3 didn't. Should mean it works MUCH better with AI IDEs.

'Verbosity' is a new tunable parameter as well.

Apparently GPT-5 is much better (seems like just 8% better) for coding.

I'd assume this is for IDEs like Cursor/Windsurf. Claude and Gemini already had this, but o3 didn't. Should mean it works MUCH better with AI IDEs.

'Verbosity' is a new tunable parameter as well.

Apparently GPT-5 is much better (seems like just 8% better) for coding.

What I'm more excited about is it's better at calling tools, and instruction following. Again, for developers, this is a game changer. Hopefully means less veering off path when you tell it to 'Do / Fix X' in your codebase.

Scan the graphs for what this means in comparison to o3. Keep in mind Claude and Gemini are much better than o3 at these.

Scan the graphs for what this means in comparison to o3. Keep in mind Claude and Gemini are much better than o3 at these.

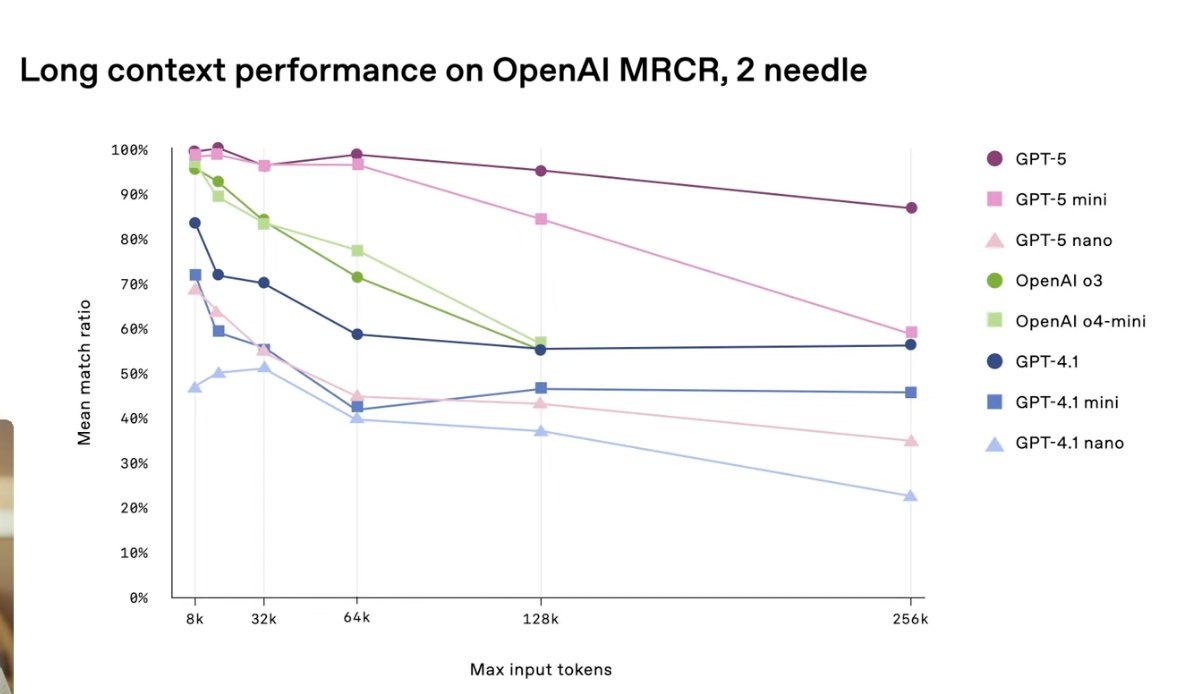

Long context retrieval capability is also improved, meaning you can chat with it for longer in a single chat window (in app, in Cursor, etc). This should be a game changer for complex problems that require dozens of messages to get stuff done.

OpenAI seems to REALLY want to frame GPT-5 as a 'coding model' as they realize this is lost ground at the moment to Claude and Gemini.

Again, curious to see if this holds up in the real world.

OpenAI seems to REALLY want to frame GPT-5 as a 'coding model' as they realize this is lost ground at the moment to Claude and Gemini.

Again, curious to see if this holds up in the real world.

They pulled up GPT-5 inside of Cursor (lol no Windsurf) to fix a bug on audio buffer inside an application.

It looks so much better than o3. It tells you exactly what it's looking for and what it's doing, just like Claude now. You can tell the team put a lot of effort into matching Claude for AI coding.

The team said: 'We talked to users who used ChatGPT in tools like Cursor and went over their feedback'. They then shaped the behavior of GPT-5 around that, trying to shape it into a "collaborative teammate".

Back to their bug fix in Cursor - it made edits, ignored tool calls that weren't relevant to the bug it was trying to fix, ran tests + build, and after 5 minutes... they didn't show whether it worked or not LOL. Assuming that means it didn't.

It looks so much better than o3. It tells you exactly what it's looking for and what it's doing, just like Claude now. You can tell the team put a lot of effort into matching Claude for AI coding.

The team said: 'We talked to users who used ChatGPT in tools like Cursor and went over their feedback'. They then shaped the behavior of GPT-5 around that, trying to shape it into a "collaborative teammate".

Back to their bug fix in Cursor - it made edits, ignored tool calls that weren't relevant to the bug it was trying to fix, ran tests + build, and after 5 minutes... they didn't show whether it worked or not LOL. Assuming that means it didn't.

The team then went on to demo how good GPT-5 is at frontend development. "Create a finance dashboard for my startup that makes digital fidget spinners for AI Agents. The target audience is the CFO and C-suite to check every day, make it beautiful and tasteful, etc".

"We tried to follow the principle of giving it good aesthetics by default, but also making it steerable. It should look great by default."

The model took 5+ minutes.

After much anticipation, the model finally generated the frontend...

...and it was actually very impressive. Somehow it broke away from the classic vibe-coded slop frontend and generated something pretty impressive, at least for a one-shot.

The data picker worked, and the frontend updated seamlessly.

"We tried to follow the principle of giving it good aesthetics by default, but also making it steerable. It should look great by default."

The model took 5+ minutes.

After much anticipation, the model finally generated the frontend...

...and it was actually very impressive. Somehow it broke away from the classic vibe-coded slop frontend and generated something pretty impressive, at least for a one-shot.

The data picker worked, and the frontend updated seamlessly.

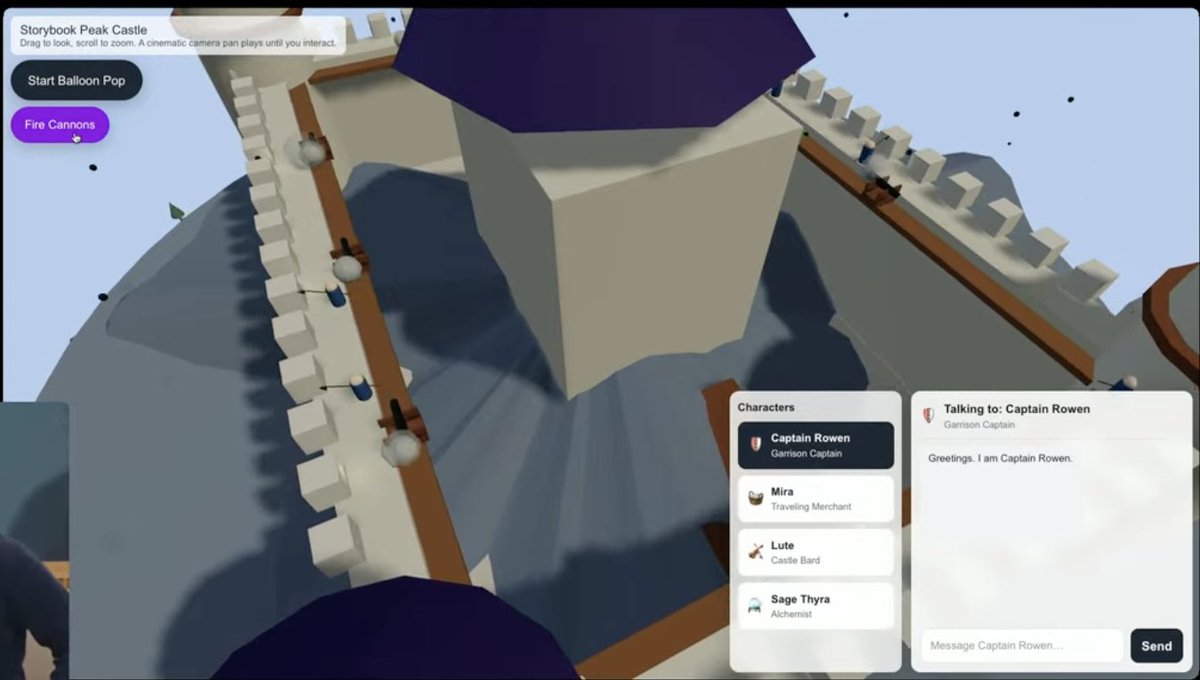

The next demo was making a fun 3D game for a kid.

Prompt:

"Create a beautiful, elaborate, epic storybook castle on a mountain peak. It should have patrols on the walls shooting cannons, and some bustling movement of people and horses inside the walls, with light fog and clouds above. It should be zoomable and explorable and made with three.js, and by default do a cinematic camera pan. Add one interactive minigame where I can pop balloons by clicking on them. Show a projectile shooting out when I click, and add a sound effect when I hit a balloon. Add a scoreboard... [there's some more after]."

They skipped the actual development of this and just went straight to demo. I'd be interested to see if GPT-5 could actually one-shot this, because its VERY complex. You can shoot cannons, talk to characters, etc.

If it DID one-shot this... it's over. GPT-5 crushes Claude and Gemini, and it's no longer close. It's not just the ability to get it done in one-shot, it's that the output is actually good.

"[The model] has a sense of creativity"

Prompt:

"Create a beautiful, elaborate, epic storybook castle on a mountain peak. It should have patrols on the walls shooting cannons, and some bustling movement of people and horses inside the walls, with light fog and clouds above. It should be zoomable and explorable and made with three.js, and by default do a cinematic camera pan. Add one interactive minigame where I can pop balloons by clicking on them. Show a projectile shooting out when I click, and add a sound effect when I hit a balloon. Add a scoreboard... [there's some more after]."

They skipped the actual development of this and just went straight to demo. I'd be interested to see if GPT-5 could actually one-shot this, because its VERY complex. You can shoot cannons, talk to characters, etc.

If it DID one-shot this... it's over. GPT-5 crushes Claude and Gemini, and it's no longer close. It's not just the ability to get it done in one-shot, it's that the output is actually good.

"[The model] has a sense of creativity"

They then brought on @mntruell (CEO of Cursor) to glaze GPT-5's coding ability.

TLDR: its great at everything: following instructions, making tool calls, keeping track of context over long queries, etc.

My take: compared to o3, it looks like it's much better integrated into Cursor (and presumably other IDEs). Probably has to do with GPT-5's ability to explain what it's doing and make consistent tool calls.

Michael fed GPT-5 in Cursor an open issue in a GitHub repo and just said "fix this issue". It went on its way, grepping the codebase, making tool calls, with the works.

"It looks roughly correct" - Michael.

The end. Nothing to see here folks. (It did look right though).

TLDR: its great at everything: following instructions, making tool calls, keeping track of context over long queries, etc.

My take: compared to o3, it looks like it's much better integrated into Cursor (and presumably other IDEs). Probably has to do with GPT-5's ability to explain what it's doing and make consistent tool calls.

Michael fed GPT-5 in Cursor an open issue in a GitHub repo and just said "fix this issue". It went on its way, grepping the codebase, making tool calls, with the works.

"It looks roughly correct" - Michael.

The end. Nothing to see here folks. (It did look right though).

And that's a wrap. TLDR:

GPT-5 is out, and it's the best LLM for reasoning in the business (no surprise).

What I'm interested in: they also claim it's the best model for coding. Curious to see if this holds up in production.

MOST interesting: it's INCREDIBLY cheap. Should quickly become the go-to for any API call you need in any application.

Overall: A-. They absolutely delivered. But the real grade comes from when I use it. Stay tuned!

GPT-5 is out, and it's the best LLM for reasoning in the business (no surprise).

What I'm interested in: they also claim it's the best model for coding. Curious to see if this holds up in production.

MOST interesting: it's INCREDIBLY cheap. Should quickly become the go-to for any API call you need in any application.

Overall: A-. They absolutely delivered. But the real grade comes from when I use it. Stay tuned!

@mntruell @threadreaderapp unroll

• • •

Missing some Tweet in this thread? You can try to

force a refresh