🚨 New @a16z thesis: AI x commerce

AI will change the way we shop - from where we find products to how we evaluate them, when we buy, and much more.

What types of purchases will be disrupted, and where does opportunity exist in the age of AI?

More from me + @arampell 👇

AI will change the way we shop - from where we find products to how we evaluate them, when we buy, and much more.

What types of purchases will be disrupted, and where does opportunity exist in the age of AI?

More from me + @arampell 👇

To start - what are the categories of commerce? (for consumers)

We divide them by level of consideration, from impulse buys to life purchases.

These have vastly different processes - you don't buy a new backpack and a car in the same way - which means the way AI touches each purchase category will vary.

We divide them by level of consideration, from impulse buys to life purchases.

These have vastly different processes - you don't buy a new backpack and a car in the same way - which means the way AI touches each purchase category will vary.

Some thoughts on how this might shake out:

1) Impulse buys - the candy bars you pick up at the checkout counter (or their digital equivalent).

You don't do a lot of research in advance, so it's tough for AI to play a role in your shopping process.

But the algorithms on social apps will continue to improve + target you with more relevant impulse purchases (like that cat-shaped water bottle or $15 t-shirt from your favorite show).

1) Impulse buys - the candy bars you pick up at the checkout counter (or their digital equivalent).

You don't do a lot of research in advance, so it's tough for AI to play a role in your shopping process.

But the algorithms on social apps will continue to improve + target you with more relevant impulse purchases (like that cat-shaped water bottle or $15 t-shirt from your favorite show).

2) Routine essentials - things you buy regularly (groceries, household supplies, pet food).

You have products you know and love. But AI can help find where you can get the best price - and maybe even purchase on your behalf if it spots an incredible deal.

Products like @camelcamelcamel, which alerts you to price drops on Amazon items, are early examples of this.

You have products you know and love. But AI can help find where you can get the best price - and maybe even purchase on your behalf if it spots an incredible deal.

Products like @camelcamelcamel, which alerts you to price drops on Amazon items, are early examples of this.

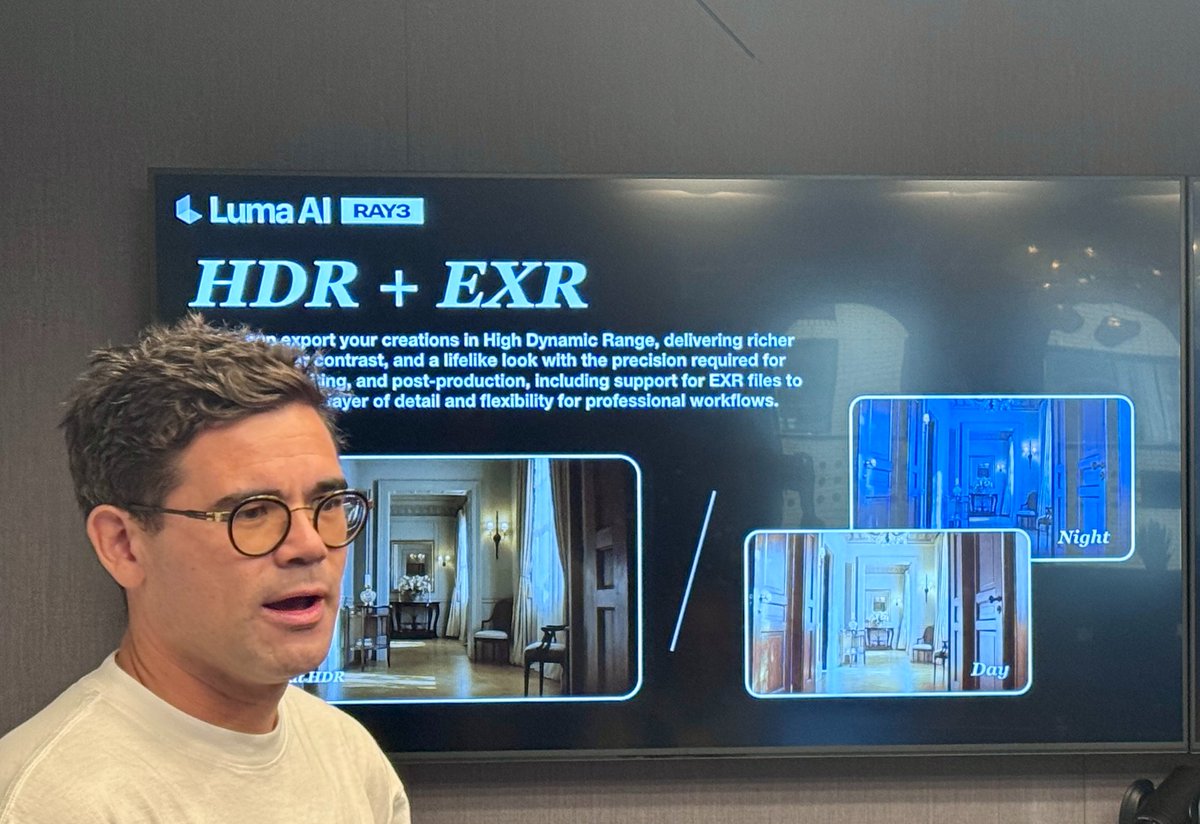

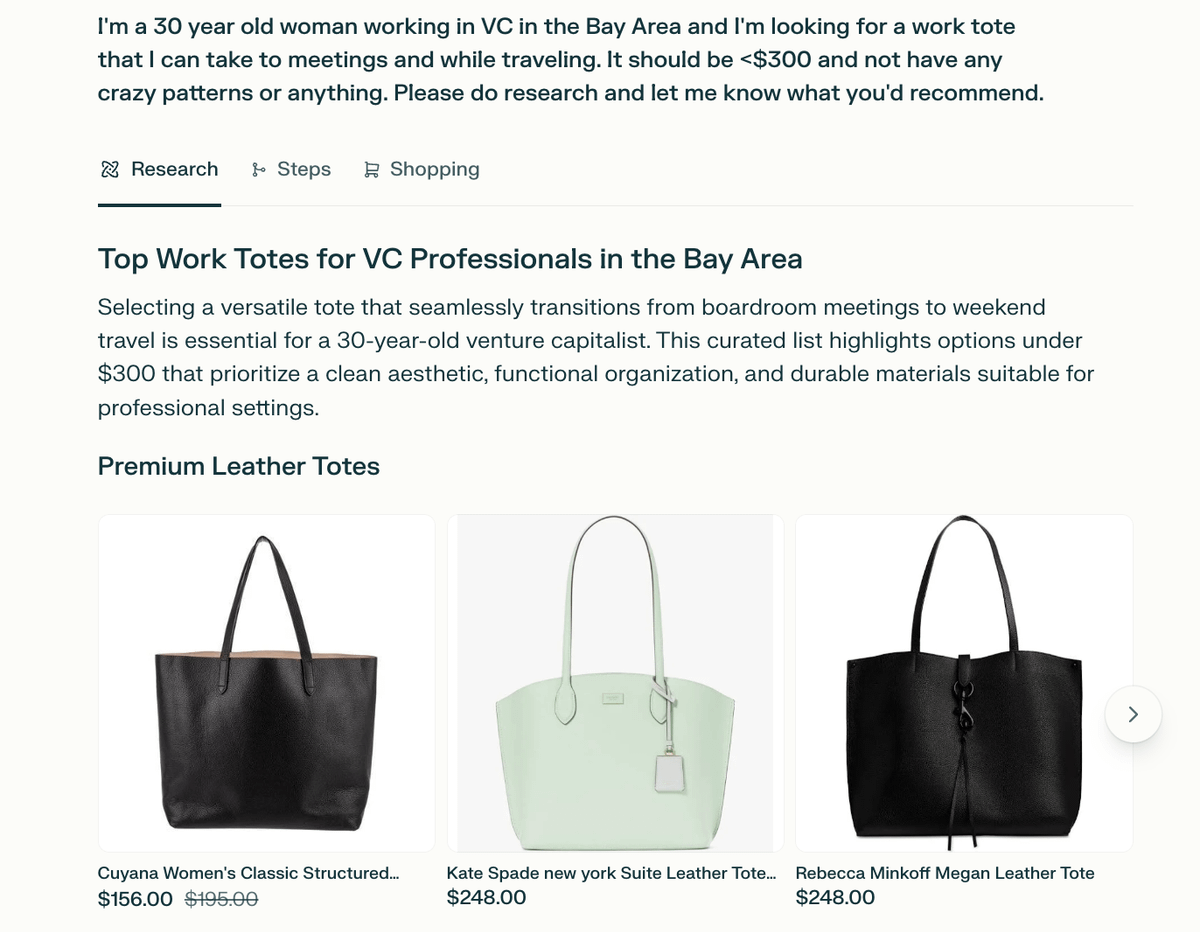

3) Lifestyle purchases - things you don't buy every week, like a wedding guest dress or a nice briefcase.

You're going to want to research and consider a few options. What if an AI agent does the grunt work for you and come back with a summary of what it recommends and why?

Products like @perplexity_ai Shopping are making progress here.

You're going to want to research and consider a few options. What if an AI agent does the grunt work for you and come back with a summary of what it recommends and why?

Products like @perplexity_ai Shopping are making progress here.

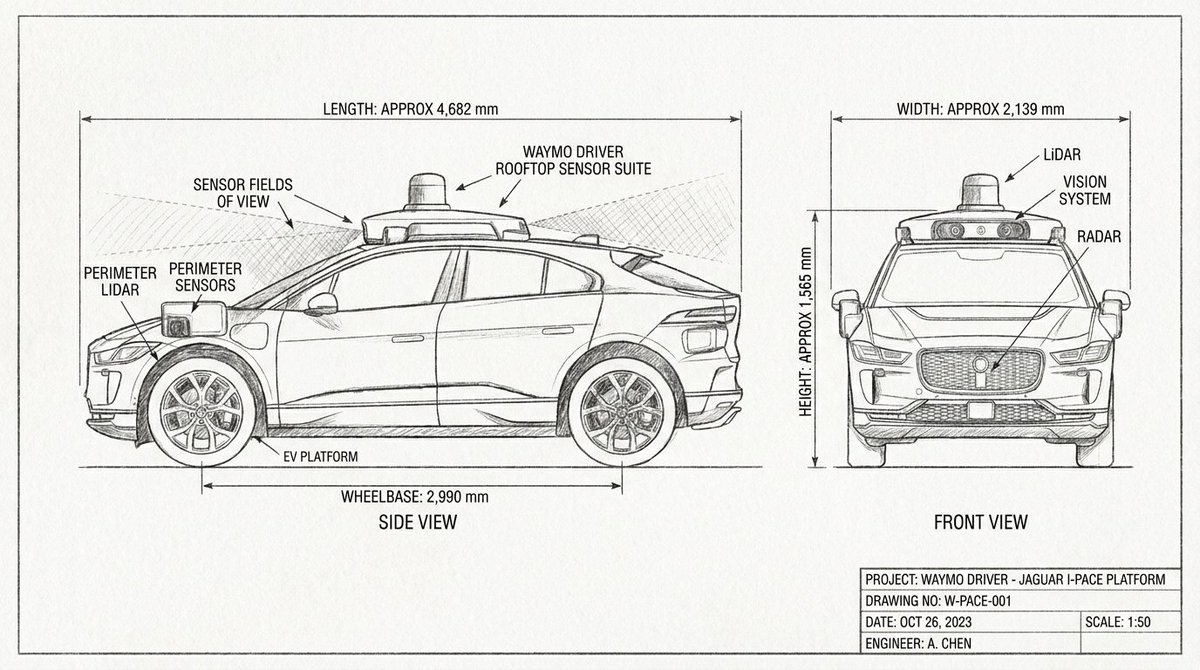

4) Functional purchases - things you use regularly that serve a practical purpose in your life.

Think a couch in your living room, your laptop, or a bike you use for commuting. These things need to hold up!

In addition to having an AI agent do research, you'll also probably want to talk to an expert about your unique needs or debate the pros and cons of various options.

This "expert" could also be AI...imagine an LLM trained on conversations for a specific product category, with integrations to access the most up-to-date data.

Think a couch in your living room, your laptop, or a bike you use for commuting. These things need to hold up!

In addition to having an AI agent do research, you'll also probably want to talk to an expert about your unique needs or debate the pros and cons of various options.

This "expert" could also be AI...imagine an LLM trained on conversations for a specific product category, with integrations to access the most up-to-date data.

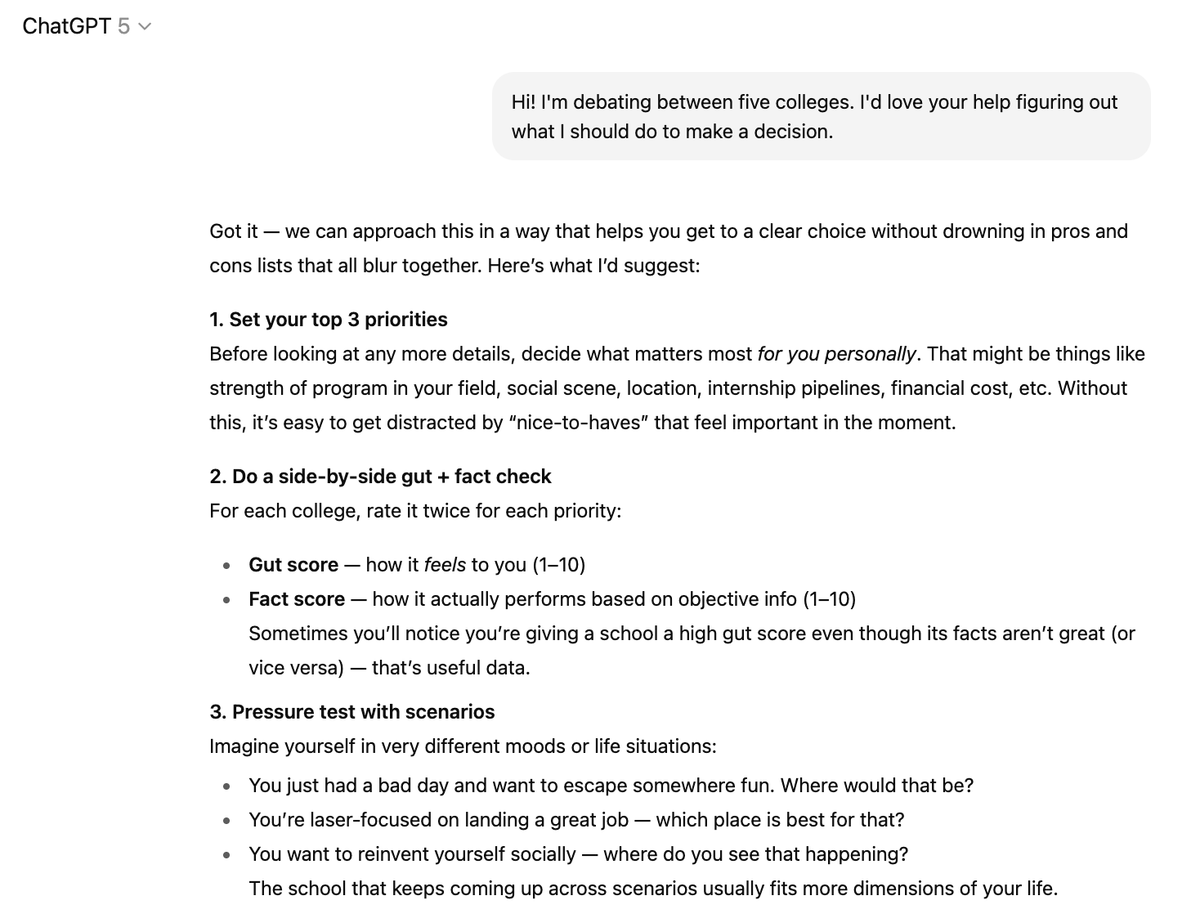

5) Life purchases - think buying a house or a car. Or picking a college.

These are highly considered purchases that happen only a few times in your life. And it's unlikely you'll fully outsource these decisions to AI.

However, it's very possible you'll use an AI coach to guide you through the process - from structuring your decision-making process to debating various options.

These are highly considered purchases that happen only a few times in your life. And it's unlikely you'll fully outsource these decisions to AI.

However, it's very possible you'll use an AI coach to guide you through the process - from structuring your decision-making process to debating various options.

@a16z @arampell Thanks for reading!

If you're building in this space, we'd love to hear from you - you can DM us here (@venturetwins, @arampell) or email me (jmoore@a16z.com).

And check out our full blog post: a16z.com/ai-x-commerce/

If you're building in this space, we'd love to hear from you - you can DM us here (@venturetwins, @arampell) or email me (jmoore@a16z.com).

And check out our full blog post: a16z.com/ai-x-commerce/

• • •

Missing some Tweet in this thread? You can try to

force a refresh