Parsing PDFs has slowly driven me insane over the last year. Here are 8 weird edge cases to show you why PDF parsing isn't an easy problem. 🧵

PDFs have a font map that tells you what actual character is connected to each rendered character, so you can copy/paste. Unfortunately, these maps can lie, so the character you copy is not what you see. If you're unlucky, it's total gibberish.

PDFs can have invisible text that only shows up when you try to extract it. "Measurement in your home" is only here once...or is it?

Math is a whole can of worms. Remember the font map problem? Well, math is almost always random characters - here we get some strange Tamil/Amharic combo.

Math bounding boxes are always fun - see how each formula is broken up into lots of tiny sections? Putting them together is a great time!

Once upon a time, someone decided that their favorite letters should be connected together into one character - like ffi or fl. Unfortunately, PDFs are inconsistent with this, and sometimes will totally skip ligatures - very ecient of them.

Not all text in a PDF is correct. Some PDFs are digital, and the text was added on creation. But others have had invisible OCR text added, sometimes based on pretty bad text detection. That's when you get this mess:

Overlapping text elements can get crazy - see how the watermark overlaps all the other text? Forget about finding good reading order here.

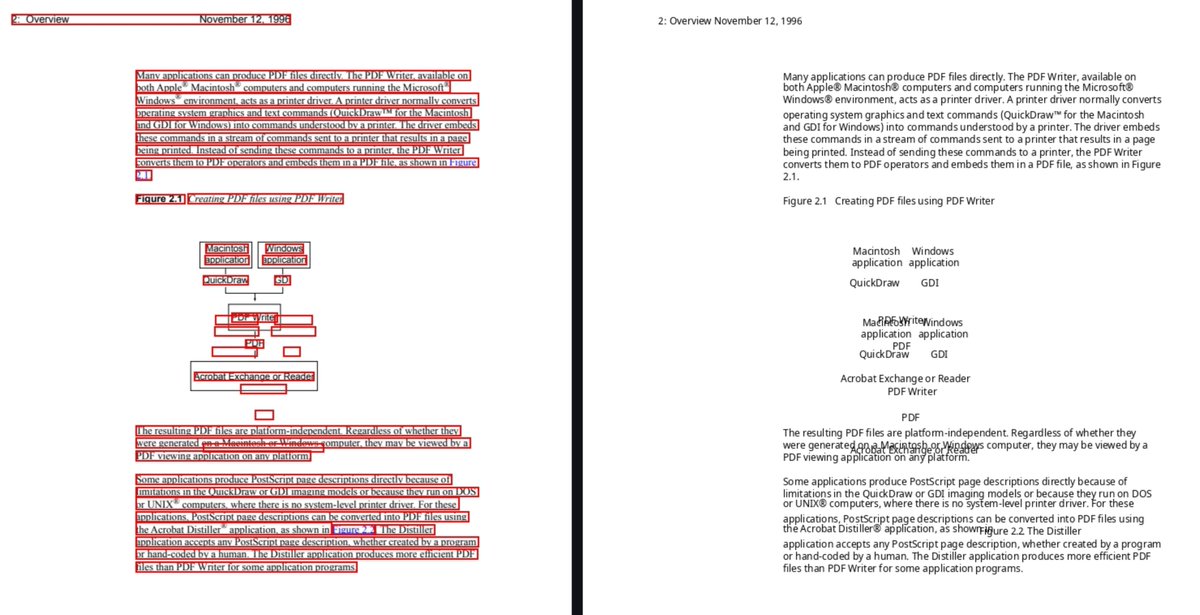

I've been showing you somewhat nice line bounding boxes. But PDFs just have character positions inside - you have to postprocess to join them into lines. In tables, this can get tricky, since it's hard to know when a new cell starts:

You might be wondering why you should even bother with the text inside PDFs. The answer is that a lot of PDFs have good text, and it's faster and more accurate to just pull it out.

This is what we do with marker - - we only OCR if the text is bad.github.com/datalab-to/mar…

This is what we do with marker - - we only OCR if the text is bad.github.com/datalab-to/mar…

Anyways, back to fixing more crazy edge cases. Let me know if you've come across any other PDF weirdness.

• • •

Missing some Tweet in this thread? You can try to

force a refresh