The “controversy” over Sydney Sweeney is absurd and largely fake, but there’s one thing worth paying attention to — the tried and tested formula used by the right-wing outrage machine to manufacture liberal fury and then bait the left into making it a reality.

Here’s how it works:

First, invent the outrage. This usually involves picking a neutral or mildly provocative event and finding something about it to frame as being offensive to the left. In this case, the slogan (“Sydney Sweeney has great jeans”).

First, invent the outrage. This usually involves picking a neutral or mildly provocative event and finding something about it to frame as being offensive to the left. In this case, the slogan (“Sydney Sweeney has great jeans”).

Second, flood the zone. Carry out a social media blitz and manufacture the appearance of outrage by gaming the algorithm with repetitive content, which will then get pushed into trending feeds and recommended videos — creating the perception that people actually care about it.

Third, bait the reaction. Tie the “outrage” to a hot-button topic (in this case, fascism and white supremacy) that will provoke a response. Then, when a few people inevitably respond, screenshot their posts and circulate them as “evidence” that liberals really are outraged.

Finally, close the loop. Manufacture the reality that you are claiming already exists. In this case, it centers around a narrative that liberals are humorless, hypertensive, and obsessed with identity politics. With enough bait, you can make the narrative look like reality.

This works in part because it’s activating two systems at once:

1) the algorithmic brain of the internet

2) the emotional brain of the audience.

If you learn how to hack these two systems, you can pretty much manufacture any bullsh*t into reality.

1) the algorithmic brain of the internet

2) the emotional brain of the audience.

If you learn how to hack these two systems, you can pretty much manufacture any bullsh*t into reality.

The key here is defensiveness. That’s the trap that you want to bait the other side into. If you can get your opponent to always stay on defense, then you are setting the agenda and they are simply responding to it.

This probably sounds quite familiar, and for good reason. We have seen this cycle play out over and over again: when Mr. Potato Head was supposedly “canceled”; when Dr. Suess books allegedly got “banned” by the “woke mob”; when Starbucks apparently “declared war on Christmas.”

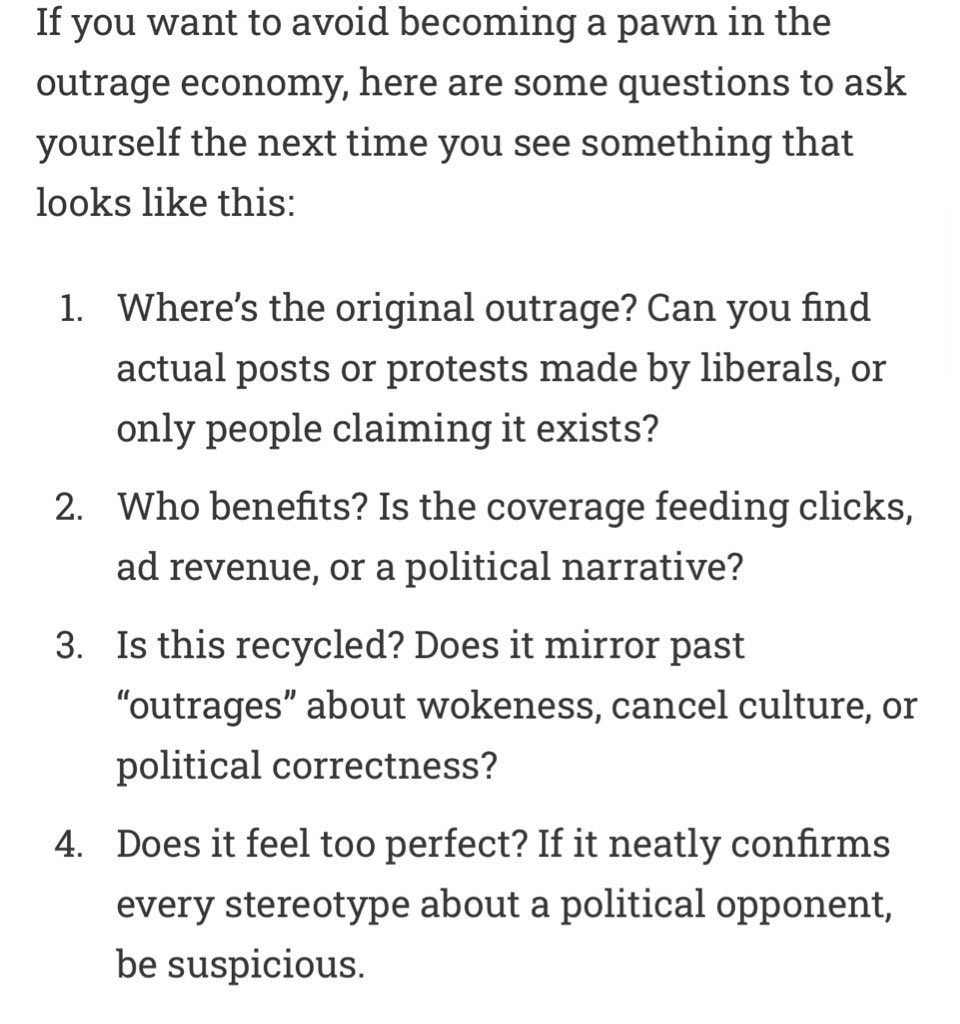

The next time you see something that looks like this, pause and ask yourself a few questions:

-where is the original outrage? Can you find it?

-who benefit benefits from this narrative?

-is this recycled?

-does it seem too perfect or too good to be true? If yes, it probably is.

-where is the original outrage? Can you find it?

-who benefit benefits from this narrative?

-is this recycled?

-does it seem too perfect or too good to be true? If yes, it probably is.

Ultimately, these manufactured outrage cycles are really a battle over control — control over what you see, how you feel, and which stories dominate your mental bandwidth.

Every time the cycle repeats, you lose a little more control over these things.

Every time the cycle repeats, you lose a little more control over these things.

The only winning move here is not to play the game at all.

You don’t have to be a participant in the right-wing cycle. In fact, you can stop the cycle altogether. But you have to stop responding to the bullsh*t that is manufactured specifically to provoke a response from you.

You don’t have to be a participant in the right-wing cycle. In fact, you can stop the cycle altogether. But you have to stop responding to the bullsh*t that is manufactured specifically to provoke a response from you.

The full article laying out how the right-wing outrage machine manufactures liberal fury is available here, free to read. This is a lesson that needs to be learned, so please share it.

weaponizedspaces.substack.com/p/how-the-righ…

weaponizedspaces.substack.com/p/how-the-righ…

If you find my work helpful, please like, share, and subscribe to my Substack (). And if you have suggestions for future article topics, I’m always open to hearing them.

Thank you, as always, for your support! I appreciate every one of you.weaponizedspaces.substack.com

Thank you, as always, for your support! I appreciate every one of you.weaponizedspaces.substack.com

lmao this should say “hypersensitive” not “hypertensive”.

As far as I’m aware, there is no effort to manufacture the appearance of a blood pressure crisis on the left.

As far as I’m aware, there is no effort to manufacture the appearance of a blood pressure crisis on the left.

https://twitter.com/rvawonk/status/1955638279036125545

I don’t know why this is so funny to me but I’m cry-laughing at the idea of Fox News running a 5-day media blitz trying to convince us of a secret blood pressure crisis on the left.

• • •

Missing some Tweet in this thread? You can try to

force a refresh