OpenBench v0.3.0 is live! 🚀

Massive provider expansion: 18 new model providers (now 30+ total!),

Also added: alpha support for the SciCode & GraphWalks benchmarks, and CLI improvements.

The most provider-agnostic eval framework just got even better.

Massive provider expansion: 18 new model providers (now 30+ total!),

Also added: alpha support for the SciCode & GraphWalks benchmarks, and CLI improvements.

The most provider-agnostic eval framework just got even better.

2/ 📡 Our theme for 0.3.0 is making it super easy to run benchmarks across all models, no matter who's running it.

3/ OpenBench already had first-class support for @OpenAI, @AnthropicAI, @GroqInc @GoogleDeepMind, and others -- and now we’ve expanded it to include:

– @AI21Labs, @basetenco, @CerebrasSystems, @cohere,

– @CrusoeAI, @DeepInfra, @friendliai, @huggingface,

– @hyperbolic_labs, @LambdaAPI, @Hailuo_AI, @Kimi_Moonshot,

– @nebiusai, @NousResearch, @novita_labs, @parasailnetwork, @RekaAILabs, @SambaNovaAI.

That’s 30+ providers total - all accessible in one place.

– @AI21Labs, @basetenco, @CerebrasSystems, @cohere,

– @CrusoeAI, @DeepInfra, @friendliai, @huggingface,

– @hyperbolic_labs, @LambdaAPI, @Hailuo_AI, @Kimi_Moonshot,

– @nebiusai, @NousResearch, @novita_labs, @parasailnetwork, @RekaAILabs, @SambaNovaAI.

That’s 30+ providers total - all accessible in one place.

4/ To further our commitment of building in public, we've decided to add alpha benchmarks to OpenBench. We may not be super confident in our implementations of benchmarks, but we'd love for developers to be able to give us feedback on our implementations of evals before they're solid.

5/ To start, we've added SciCode & GraphWalks as alpha evals. But what are they?

6/ 🧬 SciCode - A new frontier for code + science

We've added SciCode, which tests models on real scientific computing problems from physics, chemistry, and biology.

Unlike HumanEval, these require domain knowledge AND coding ability - a true test of reasoning capability.

We've added SciCode, which tests models on real scientific computing problems from physics, chemistry, and biology.

Unlike HumanEval, these require domain knowledge AND coding ability - a true test of reasoning capability.

7/ 🕸️ GraphWalks - Testing graph reasoning (h/t @natashaamayorga)

Can your model navigate complex graph structures? GraphWalks tests:

- Path finding

- Connectivity analysis

- Graph traversal logic

Split into 2 variants for comprehensive evaluation - BFS and Parents.

Can your model navigate complex graph structures? GraphWalks tests:

- Path finding

- Connectivity analysis

- Graph traversal logic

Split into 2 variants for comprehensive evaluation - BFS and Parents.

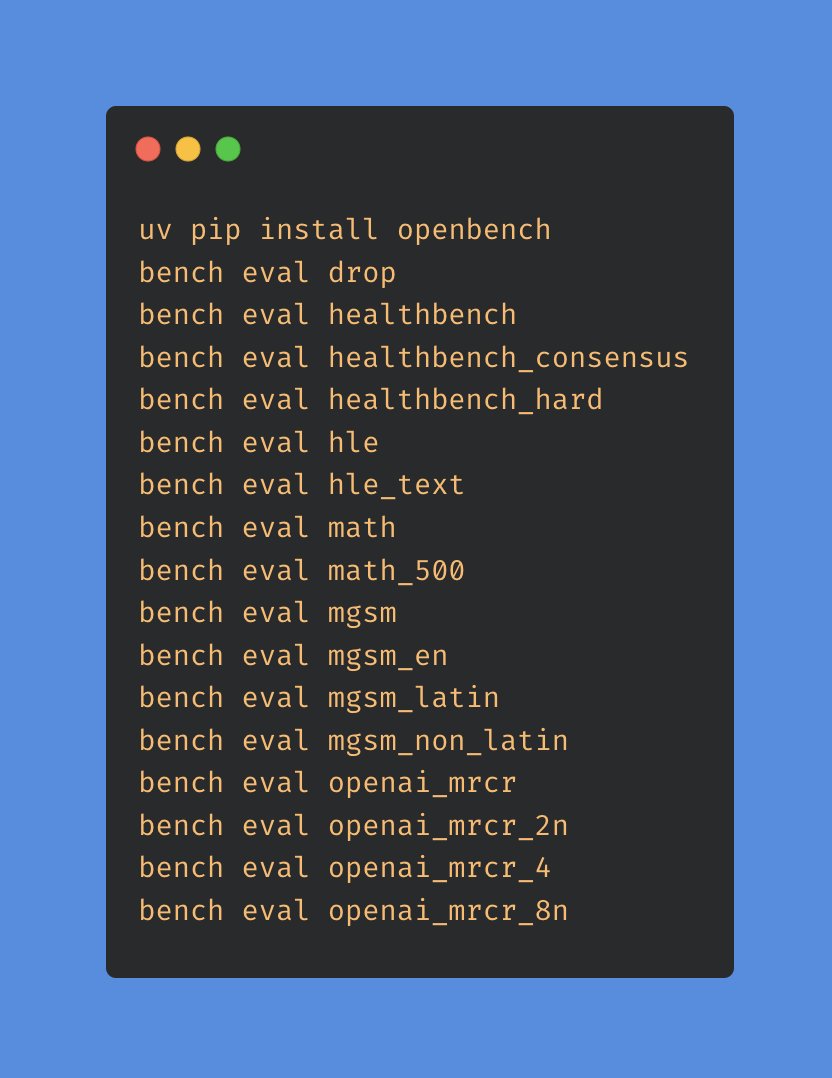

8/ ⚡ More Housekeeping, mainly CLI improvements make evals even faster:

• openbench alias for bench so you can run evals with uvx openbench instead of having to install openbench in a venv

• -M and -T flags for quick model/task args, taken from Inspect AI's CLI

• --debug mode for troubleshooting retries and evals

• --alpha flag unlocks experimental benchmarks

Small changes, big workflow improvements.

• openbench alias for bench so you can run evals with uvx openbench instead of having to install openbench in a venv

• -M and -T flags for quick model/task args, taken from Inspect AI's CLI

• --debug mode for troubleshooting retries and evals

• --alpha flag unlocks experimental benchmarks

Small changes, big workflow improvements.

9/ 📤 Direct HuggingFace integration (h/t @ben_burtenshaw)

Thanks to the great folks @huggingface Your eval results can now be pushed directly to HuggingFace datasets - making it easier to share benchmark results with the community and track model performance over time. All you need to do is add --hf-repo to your eval run and it'll push the logfiles up!

Thanks to the great folks @huggingface Your eval results can now be pushed directly to HuggingFace datasets - making it easier to share benchmark results with the community and track model performance over time. All you need to do is add --hf-repo to your eval run and it'll push the logfiles up!

10/ OpenBench continues to be the most flexible, provider-agnostic eval framework.

One codebase, 30+ providers, 35+ benchmarks.

Star if you find it useful, and follow along for 0.4.0 next week! ⭐

github.com/groq/openbench

One codebase, 30+ providers, 35+ benchmarks.

Star if you find it useful, and follow along for 0.4.0 next week! ⭐

github.com/groq/openbench

• • •

Missing some Tweet in this thread? You can try to

force a refresh