This might be the most important AI paper of the year.

DeepMind showed LLMs can actually reason with explicit rules.

No prompt hacks. No fine-tuning tricks.

Just real, general reasoning.

Let’s break it down:

DeepMind showed LLMs can actually reason with explicit rules.

No prompt hacks. No fine-tuning tricks.

Just real, general reasoning.

Let’s break it down:

For years, the line was:

“LLMs can’t really follow rules. They just mimic patterns.”

Turns out… that’s wrong.

This study shows LLMs can actually internalize rules and apply them in totally new situations just like humans.

“LLMs can’t really follow rules. They just mimic patterns.”

Turns out… that’s wrong.

This study shows LLMs can actually internalize rules and apply them in totally new situations just like humans.

Think of it like teaching someone a card game.

You explain the rules, play a few rounds…

Then hand them a completely different deck.

If they still win, they’re not memorizing they’re understanding.

You explain the rules, play a few rounds…

Then hand them a completely different deck.

If they still win, they’re not memorizing they’re understanding.

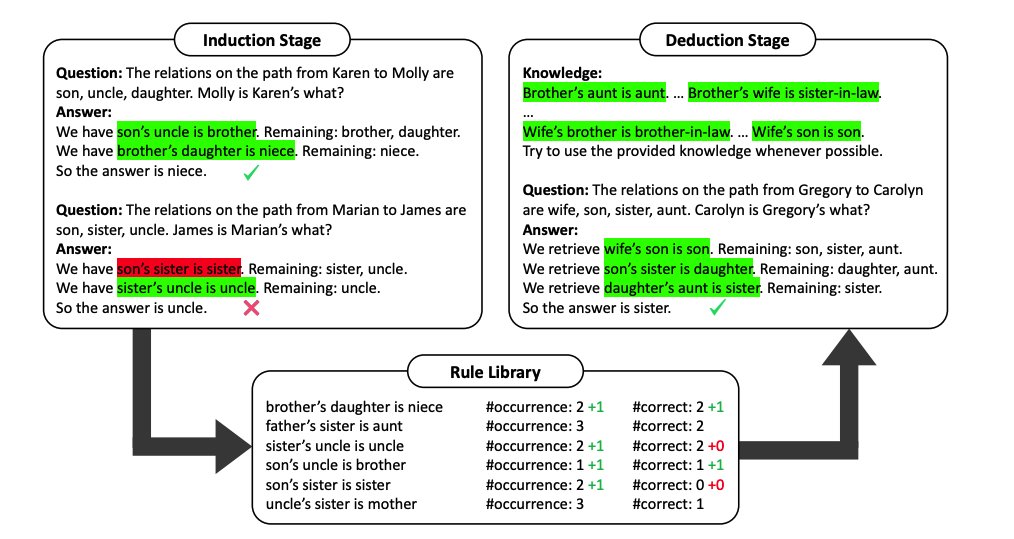

That’s exactly what the researchers tested.

- Gave LLMs made-up rules they’d never seen

- Tested them with brand-new examples

- Measured how well they applied the rules

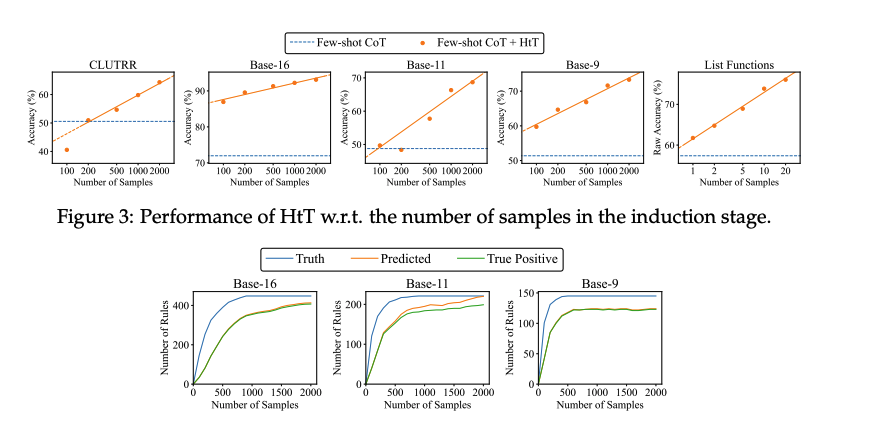

The result? Big models crushed it with 10–30% better accuracy.

- Gave LLMs made-up rules they’d never seen

- Tested them with brand-new examples

- Measured how well they applied the rules

The result? Big models crushed it with 10–30% better accuracy.

And the best part?

The rules didn’t just stick in that one task.

They could be transferred to other problems or even different models.

That’s like teaching one student and suddenly the whole school gets smarter.

The rules didn’t just stick in that one task.

They could be transferred to other problems or even different models.

That’s like teaching one student and suddenly the whole school gets smarter.

Why this matters:

Rule-following is critical in…

• Law

• Science

• Finance

• Safety systems

If AI can follow explicit rules, it’s more than just “creative” it’s reliable.

Rule-following is critical in…

• Law

• Science

• Finance

• Safety systems

If AI can follow explicit rules, it’s more than just “creative” it’s reliable.

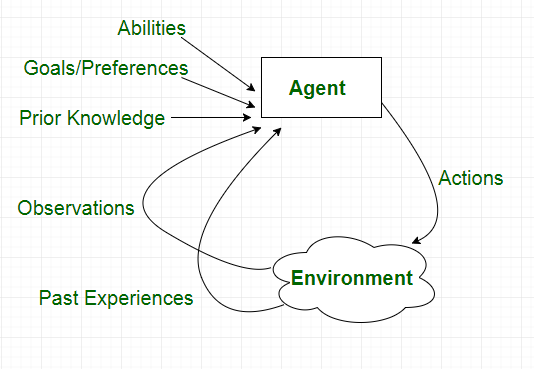

How they did it (simplified):

1. Create brand-new rules

2. Show the model correct examples

3. Throw brand-new, tricky problems at it

4. See if it applies the rules not just patterns

1. Create brand-new rules

2. Show the model correct examples

3. Throw brand-new, tricky problems at it

4. See if it applies the rules not just patterns

One thing was clear: Prompt clarity is king.

When the rule is explained cleanly in the prompt, performance jumps.

Vague, messy instructions? Accuracy tanks.

When the rule is explained cleanly in the prompt, performance jumps.

Vague, messy instructions? Accuracy tanks.

And sometimes?

Models nailed it on the first try no training, just from reading the rules.

That’s like reading chess rules and winning your first game.

But there’s a catch:

✅ Simple, short rules → learned fast

⚠️ Long, tangled rules → harder to master

Complexity still matters.

Models nailed it on the first try no training, just from reading the rules.

That’s like reading chess rules and winning your first game.

But there’s a catch:

✅ Simple, short rules → learned fast

⚠️ Long, tangled rules → harder to master

Complexity still matters.

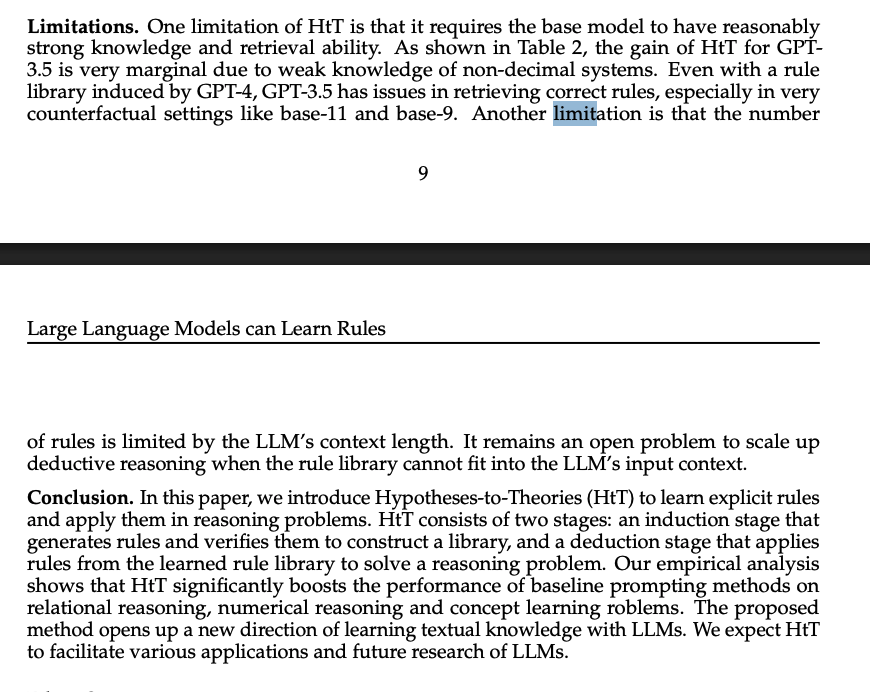

Limitations? yeah there are few.

• Mostly clean, synthetic data

• Real-world rules are messy

• Edge cases can trip models up

Still, it’s a big leap for symbolic reasoning in LLMs.

• Mostly clean, synthetic data

• Real-world rules are messy

• Edge cases can trip models up

Still, it’s a big leap for symbolic reasoning in LLMs.

The takeaway:

LLMs aren’t just parrots.

With the right setup, they can:

• Learn explicit rules

• Apply them to new cases

• Share that knowledge

That’s getting close to true reasoning.

LLMs aren’t just parrots.

With the right setup, they can:

• Learn explicit rules

• Apply them to new cases

• Share that knowledge

That’s getting close to true reasoning.

Imagine the possibilities:

• AI contract review that actually follows legal clauses

• AI tutors that enforce grammar/math rules

• Game AIs adapting instantly to brand-new mechanics

For builders:

• Clearer prompts = better rules

• Fine-tuning locks them in

• Smaller, focused rule sets work best

Think precision over complexity.

• AI contract review that actually follows legal clauses

• AI tutors that enforce grammar/math rules

• Game AIs adapting instantly to brand-new mechanics

For builders:

• Clearer prompts = better rules

• Fine-tuning locks them in

• Smaller, focused rule sets work best

Think precision over complexity.

If LLMs can learn & transfer rules, the “fancy autocomplete” era is over.

We’re in the age of AI that can be taught like a student.

And a well-taught student? Can change the game.

We’re in the age of AI that can be taught like a student.

And a well-taught student? Can change the game.

Read it here:

arxiv.org/abs/2310.07064

arxiv.org/abs/2310.07064

P.S.

We built ClipYard for ruthless performance marketers.

→ Better ROAS

→ 10x faster content ops

→ No human error

→ Full creative control

You’ve never seen AI avatars like this before → clipyard.ai

We built ClipYard for ruthless performance marketers.

→ Better ROAS

→ 10x faster content ops

→ No human error

→ Full creative control

You’ve never seen AI avatars like this before → clipyard.ai

I hope you've found this thread helpful.

Follow me @AlexanderFYoung for more.

Like/Repost the quote below if you can:

Follow me @AlexanderFYoung for more.

Like/Repost the quote below if you can:

https://twitter.com/23948998/status/1956648796659867884

• • •

Missing some Tweet in this thread? You can try to

force a refresh