This is really exciting and impressive, and this stuff is in my area of mathematics research (convex optimization).

I have a nuanced take. 🧵 (1/9)

I have a nuanced take. 🧵 (1/9)

https://twitter.com/SebastienBubeck/status/1958198661139009862

There are 3 proofs in discussion:

v1. ( η ≤ 1/L, discovered by human )

v2. ( η ≤ 1.75/L, discovered by human )

v.GTP5 ( η ≤ 1.5/L, discovered by AI )

Sebastien argues that the v.GPT5 proof is impressive, even though it is weaker than the v2 proof. (2/9)

v1. ( η ≤ 1/L, discovered by human )

v2. ( η ≤ 1.75/L, discovered by human )

v.GTP5 ( η ≤ 1.5/L, discovered by AI )

Sebastien argues that the v.GPT5 proof is impressive, even though it is weaker than the v2 proof. (2/9)

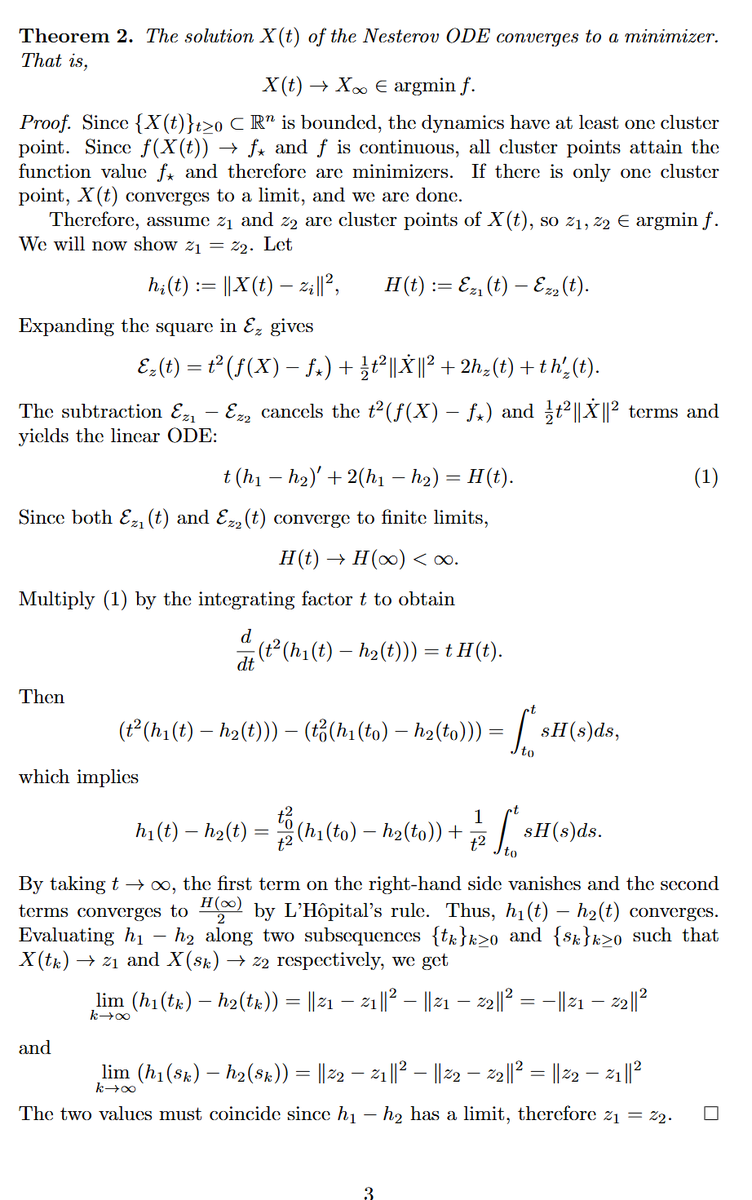

The proof itself is arguably not very difficult for an expert in convex optimization, if the problem is given.

Knowing that the key inequality to use is [Nesterov Theorem 2.1.5], I could prove v2 in a few hours by searching through the set of relevant combinations. (3/9)

Knowing that the key inequality to use is [Nesterov Theorem 2.1.5], I could prove v2 in a few hours by searching through the set of relevant combinations. (3/9)

(And for reasons that I won’t elaborate here, the search for the proof is precisely a 6-dimensional search problem. The author of the v2 proof, Moslem Zamani, also knows this. I know Zamani’s work enough to know that he knows.)

(4/9)

(4/9)

(In research, the key challenge is often in finding problems that are both interesting and solvable. This paper is an example of an interesting problem definition that admits a simple solution.)

(5/9)

(5/9)

When proving bounds (inequalities) in math, there are 2 challenges:

(i) Curating the correct set of base/ingredient inequalities. (This is the part that often requires more creativity.)

(ii) Combining the set of base inequalities. (Calculations can be quite arduous.)

(6/9)

(i) Curating the correct set of base/ingredient inequalities. (This is the part that often requires more creativity.)

(ii) Combining the set of base inequalities. (Calculations can be quite arduous.)

(6/9)

In this problem, that [Nesterov Theorem 2.1.5] should be the key inequality to be used for (i) is known to those working in this subfield. (7/9)

So, the choice of base inequalities (i) is clear/known to me, ChatGPT, and Zamani. Having (i) figured out significantly simplifies this problem. The remaining step (ii) becomes mostly calculations. (8/9)

The proof is something an experienced PhD student could work out in a few hours. That GPT-5 can do it with just ~30 sec of human input is impressive and potentially very useful to the right user.

However, GPT5 is by no means exceeding the capabilities of human experts. (9/9)

However, GPT5 is by no means exceeding the capabilities of human experts. (9/9)

• • •

Missing some Tweet in this thread? You can try to

force a refresh