Two big updates to the Responses API today.

🖇️ Connectors — Pull context from Gmail, Google Calendar, Dropbox, and more in a single API call.

💬 Conversations — Persist chat threads for your users, without running your own database.

More below:

🖇️ Connectors — Pull context from Gmail, Google Calendar, Dropbox, and more in a single API call.

💬 Conversations — Persist chat threads for your users, without running your own database.

More below:

Build rich experiences with connectors to:

- Read emails

- Fetch calendar events

- Search files and chats

Gmail, Google Calendar, Drive, Dropbox, Teams, Outlook Calendar + Email, and SharePoint are available now.

They work with deep research, too!

platform.openai.com/docs/guides/to…

- Read emails

- Fetch calendar events

- Search files and chats

Gmail, Google Calendar, Drive, Dropbox, Teams, Outlook Calendar + Email, and SharePoint are available now.

They work with deep research, too!

platform.openai.com/docs/guides/to…

Here’s a demo app that shows how you can connect your app to Google Calendar and fetch upcoming events: github.com/openai/openai-…

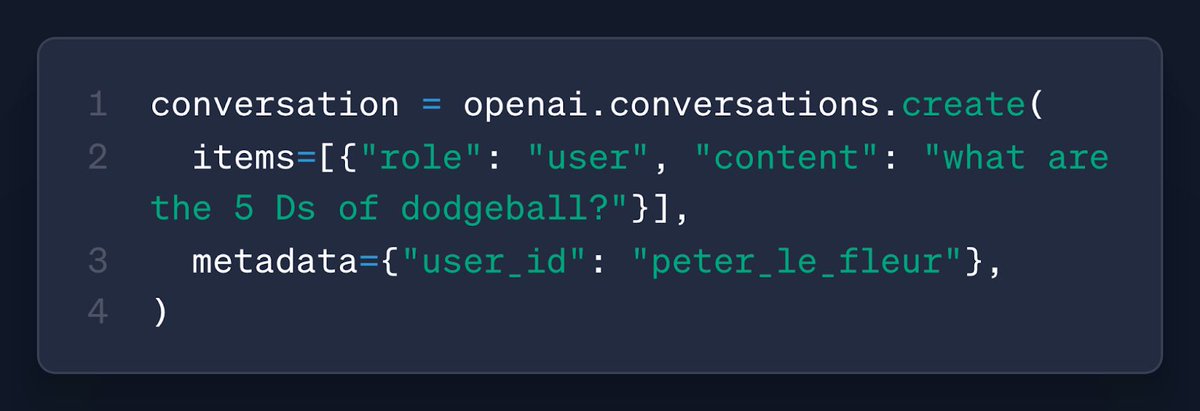

With the Conversations API, you can now store context from Responses API calls (messages, tool calls, tool outputs, and other data). Easily render past chats, then let your users pick up where they left off (just like in ChatGPT).

platform.openai.com/docs/guides/co…

platform.openai.com/docs/guides/co…

• • •

Missing some Tweet in this thread? You can try to

force a refresh