OpenAI has released a shocking paper that reveals

"why AI hallucinates, and its mechanism."

And it's free,

Here's are true reasons why hallucinations occur, as shown in the paper, along with 6 solutions🧵

"why AI hallucinates, and its mechanism."

And it's free,

Here's are true reasons why hallucinations occur, as shown in the paper, along with 6 solutions🧵

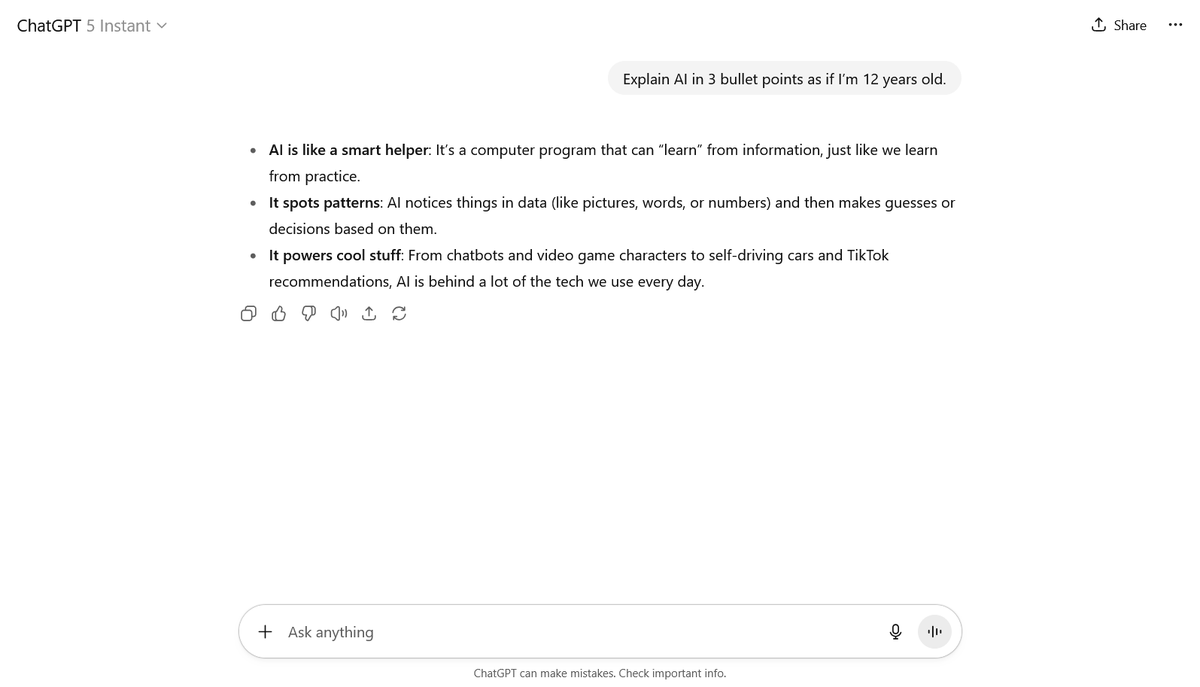

1. AI is trained in such a way that it cannot say

"I don't know"

The biggest cause of hallucinations lies in the AI's training method itself.

In the current evaluation systems, even if the answer is incorrect, guessing provides a higher score than answering "I don't know," so the AI ends up learning to actively lie (bluff).

"I don't know"

The biggest cause of hallucinations lies in the AI's training method itself.

In the current evaluation systems, even if the answer is incorrect, guessing provides a higher score than answering "I don't know," so the AI ends up learning to actively lie (bluff).

2. "Accuracy Supremacy"

Encourages Lying Benchmarks that measure AI performance basically only look at whether the answer is correct or incorrect.

Answering "I don't know" gets 0 points, so even in uncertain cases, guessing yields a higher expected value.

This "test-soaked" state has been producing AIs that confidently lie.

Encourages Lying Benchmarks that measure AI performance basically only look at whether the answer is correct or incorrect.

Answering "I don't know" gets 0 points, so even in uncertain cases, guessing yields a higher expected value.

This "test-soaked" state has been producing AIs that confidently lie.

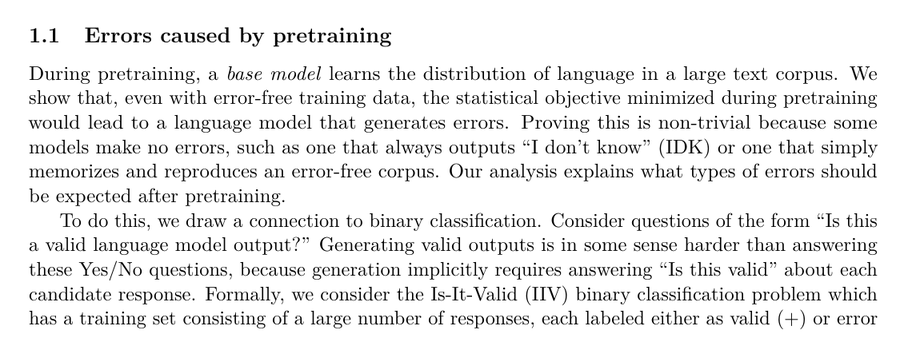

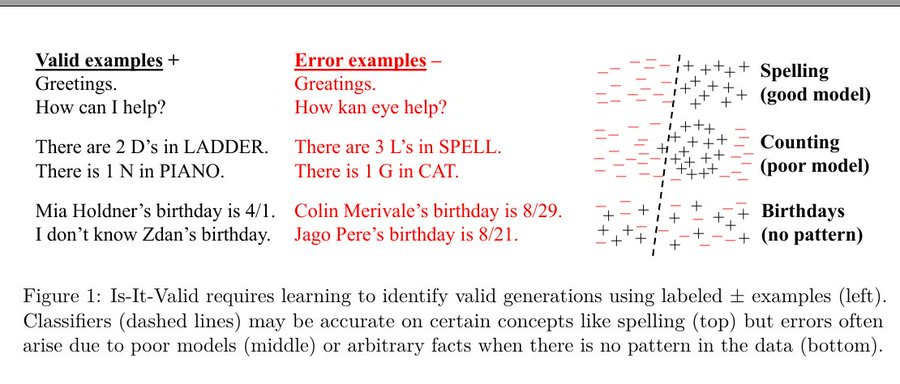

3. Hallucinations arise during the "pre-training" phase

Hallucinations begin from the model's initial training phase.

While the model excels at learning general sentence patterns from vast amounts of text data, it struggles with information that cannot be patterned, such as rare specific facts. As a result, it generates plausible-sounding falsehoods.

Hallucinations begin from the model's initial training phase.

While the model excels at learning general sentence patterns from vast amounts of text data, it struggles with information that cannot be patterned, such as rare specific facts. As a result, it generates plausible-sounding falsehoods.

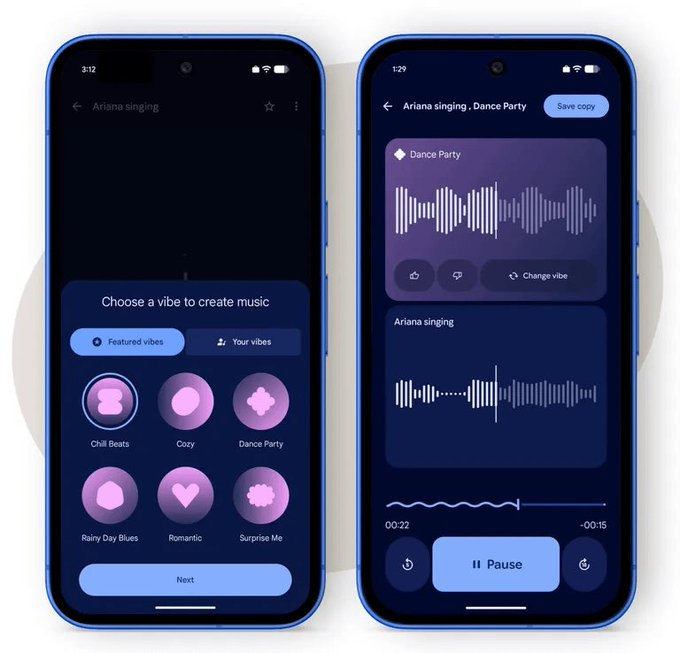

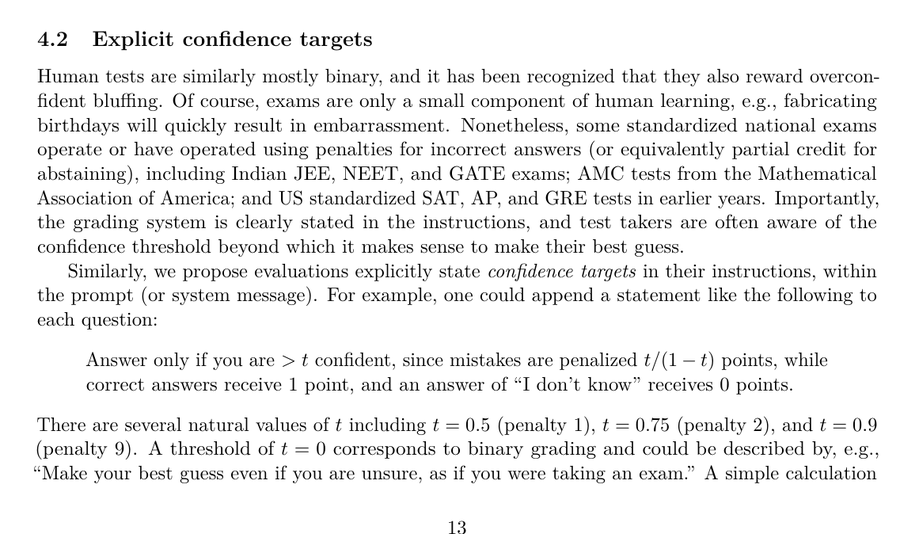

4. An Astonishingly Simple Solution

The solution presented in the paper involves changing the evaluation method.

Simply add a rule such as "only answer if you are more than 90% confident" and impose a heavier penalty for incorrect answers. This makes it optimal for the AI to adopt a strategy of honestly responding "I don't know" when it lacks confidence.

The solution presented in the paper involves changing the evaluation method.

Simply add a rule such as "only answer if you are more than 90% confident" and impose a heavier penalty for incorrect answers. This makes it optimal for the AI to adopt a strategy of honestly responding "I don't know" when it lacks confidence.

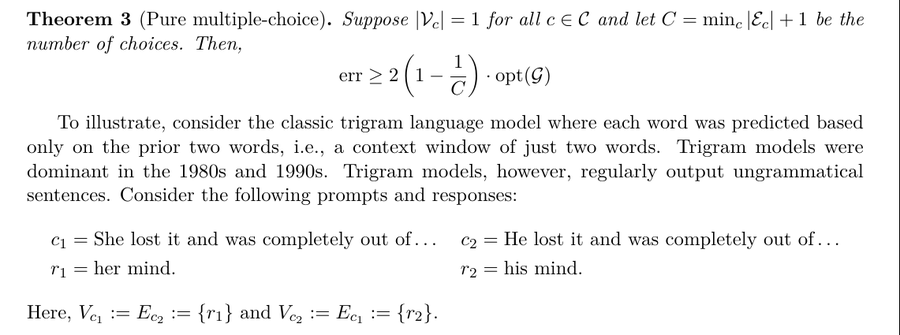

5. Computational Limits and Model Limitations

Computationally, hallucinations occur when facing problems where correct answers beyond chance are impossible, or due to the structural limitations of the model itself (e.g., older models confusing "he" and "she").

Computationally, hallucinations occur when facing problems where correct answers beyond chance are impossible, or due to the structural limitations of the model itself (e.g., older models confusing "he" and "she").

6. Singleton Problem

When there is a lot of information (singletons) that appears only once in the training data, it is statistically impossible for AI to achieve 100% accuracy.

Since pattern learning is not possible, it inevitably has to rely on inference.

When there is a lot of information (singletons) that appears only once in the training data, it is statistically impossible for AI to achieve 100% accuracy.

Since pattern learning is not possible, it inevitably has to rely on inference.

OpenAI concludes that

"hallucinations are not a mysterious phenomenon, but merely a statistical classification error."

It states that AI's lies are not bugs, but inevitable results produced by the current evaluation system, and that by simply changing the evaluation method, a more honest AI can be created.

"hallucinations are not a mysterious phenomenon, but merely a statistical classification error."

It states that AI's lies are not bugs, but inevitable results produced by the current evaluation system, and that by simply changing the evaluation method, a more honest AI can be created.

AI is shaping your future - discover the key developments, challenges, and opportunities in The AI Foreground.

aiforeground.beehiiv.com

aiforeground.beehiiv.com

• • •

Missing some Tweet in this thread? You can try to

force a refresh